Tuesday, 22 September 2009

Infinispan@Devoxx

http://www.devoxx.com/s/1116/1/DV09//download/userResources/DV09/logo[]

I will be presenting on Infinispan at Devoxx in Antwerp this November. For details, see:

Remember that you can track where the core Infinispan team will be making public appearances on the Infinispan Talks calendar!

So, see you in Antwerp!

Cheers

Manik

Tags: presentations devoxx

Tuesday, 22 September 2009

Comparing JBoss Cache, Infinispan and Gigaspaces

Chris Wilk has posted a detailed blog comparing features in JBoss Cache, Infinispan and Gigaspaces.

This well-written article is available here:

and is a good starting point for more in-depth analysis and comparison.

Cheers

Manik

Tags: jboss cache comparison gigaspaces

Tuesday, 15 September 2009

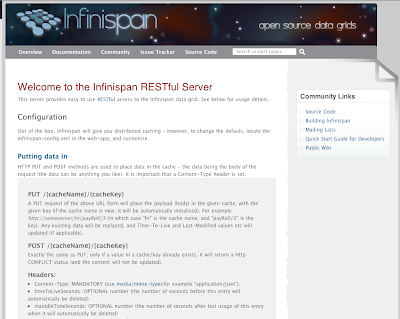

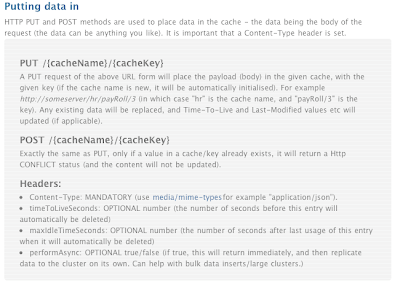

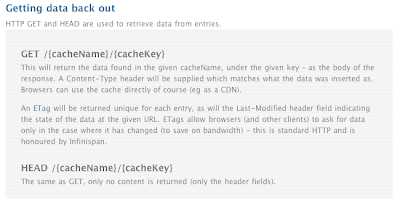

Introducing the Infinispan (REST) server

Introducing the Infinispan RESTful server !

The Infinispan RESTful server combines the whole grain goodness of RESTEasy (JAX-RS, or JSR-311) with Infinispan to provide a web-ready RESTful data grid.

Recently I (Michael) spoke to Manik about an interesting use case, and he indicated great interest in such a server. It wasn’t a huge amount of work to do the initial version - given that JAX-RS is designed to make things easy.

For those that don’t know: RESTful design is using the well proven and established http/web standards for providing services (as a simple alternative to WS-*) - if that still isn’t enough, you can read more here. So for Infinispan that means that any type of client can place data in the Infinispan grid.

So what would you use it for? For non java clients, or clients where you need to use HTTP as the transport mechanism for caching/data grid needs. A content delivery network (?) - push data into the grid, let Infinispan spread it around and serve it out via the nearest server. See here for details on using http and URLs with it.

In terms of clients - you only need HTTP - no binary dependencies or libraries needed (the wiki page has some samples in ruby/python, also in the project source).

Where does it live? The server is a module in Infinispan under /server/rest (for the moment, we may re-arrange the sources at a later date).

Getting it. Currently you can download the war from the wiki page, or build it yourself (as it is still new, early days). This is at present a war file (tested on JBoss AS and Jetty) which should work in most containers - we plan to deliver a stand alone server (with an embedded JBoss AS) Real Soon Now.

Questions: (find me on the dev list, or poke around the wiki).

Implemented in scala: After chatting with Manik and co, we decided this would serve as a good test bed to "test the waters" on Scala - so this module is written in scala - it worked just fine with RESTEasy, and Infinispan (which one would reasonably expect, but nice when things do work as advertised !).

Implemented in scala: After chatting with Manik and co, we decided this would serve as a good test bed to "test the waters" on Scala - so this module is written in scala - it worked just fine with RESTEasy, and Infinispan (which one would reasonably expect, but nice when things do work as advertised !).

Tags: rest server

Friday, 28 August 2009

Podcast on Infinispan

So a lot of folks have asked me for a downloadable slide deck from my recent JUG presentations on Infinispan. I’ve gone a step further and have recorded a short 5 minute intro to data grids and Infinispan as a podcast.

Enjoy! Manik

Tags: podcast

Tuesday, 25 August 2009

First beta now available!

So today I’ve finally cut the much-awaited Infinispan 4.0.0.BETA1. Codenamed Starobrno - after the Czech beer that was omnipresent during early planning sessions of Infinispan - Beta1 is finally feature-complete. This is also the first release where distribution is complete, with rehashing on joins and leaves implemented as well. In addition, a number of bugs reported on previous alpha releases have been tended to.

So today I’ve finally cut the much-awaited Infinispan 4.0.0.BETA1. Codenamed Starobrno - after the Czech beer that was omnipresent during early planning sessions of Infinispan - Beta1 is finally feature-complete. This is also the first release where distribution is complete, with rehashing on joins and leaves implemented as well. In addition, a number of bugs reported on previous alpha releases have been tended to.

This is a hugely important release for Infinispan. No more features will be added to 4.0.0, and all efforts will now focus on stability, performance and squashing bugs. And for this we need your help! Download, try out, feedback. And you will be rewarded with a rock-solid, lightning-fast final release that you can depend on.

Some things that have changed since the alphas include a better mechanism of naming caches and overriding configurations, and a new configuration XML reference guide. Don’t forget the 5-minute guide for the impatient, and the interactive tutorial to get you started as well.

There are a lot of folk to thank - way too many to list here, but you all know who you are. For a full set of release notes, visit this JIRA page.

Enjoy Manik

Tags: beta