1. Introduction

Welcome to the official Infinispan User Guide. This comprehensive document will guide you through every last detail of Infinispan.

1.1. What is Infinispan ?

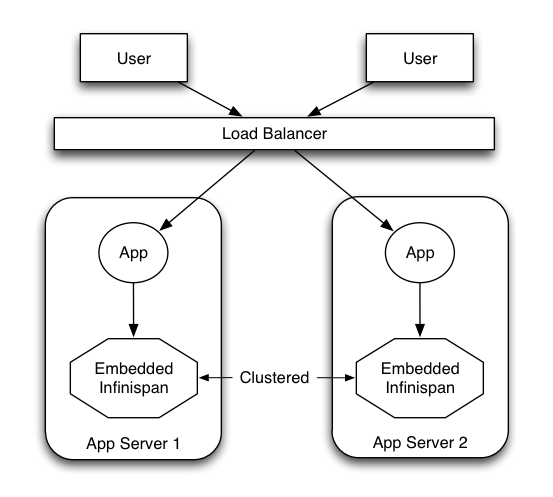

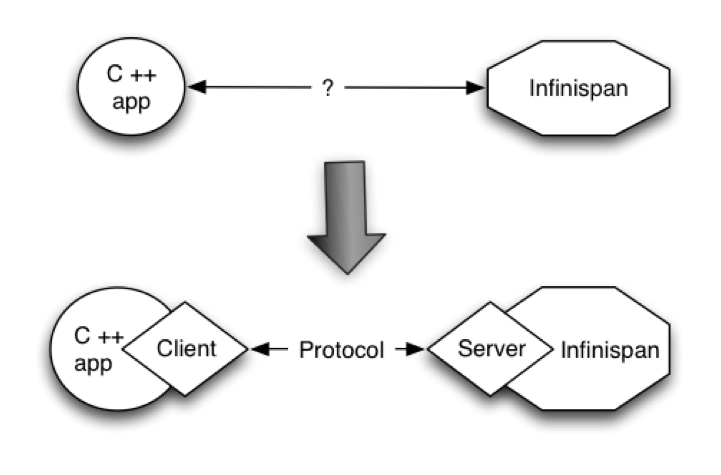

Infinispan is a distributed in-memory key/value data store with optional schema. It can be used both as an embedded Java library and as a language-independent service accessed remotely over a variety of protocols (Hot Rod, REST, and Memcached). It offers advanced functionality such as transactions, events, querying and distributed processing as well as numerous integrations with frameworks such as the JCache API standard, CDI, Hibernate, WildFly, Spring Cache, Spring Session, Lucene, Spark and Hadoop.

1.2. Why use Infinispan ?

1.2.1. As a local cache

The primary use for Infinispan is to provide a fast in-memory cache of frequently accessed data. Suppose you have a slow data source (database, web service, text file, etc): you could load some or all of that data in memory so that it’s just a memory access away from your code. Using Infinispan is better than using a simple ConcurrentHashMap, since it has additional useful features such as expiration and eviction.

1.2.2. As a clustered cache

If your data doesn’t fit in a single node, or you want to invalidate entries across multiple instances of your application, Infinispan can scale horizontally to several hundred nodes.

1.2.3. As a clustering building block for your applications

If you need to make your application cluster-aware, integrate Infinispan and get access to features like topology change notifications, cluster communication and clustered execution.

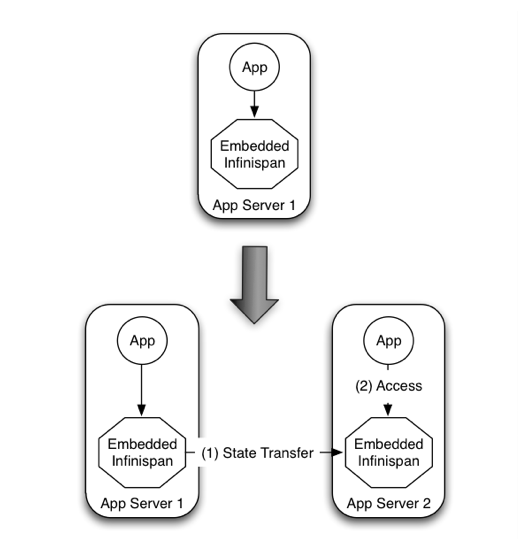

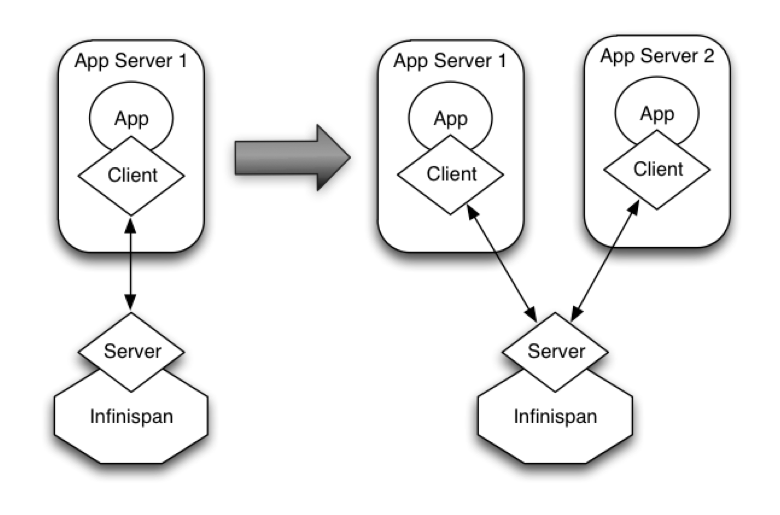

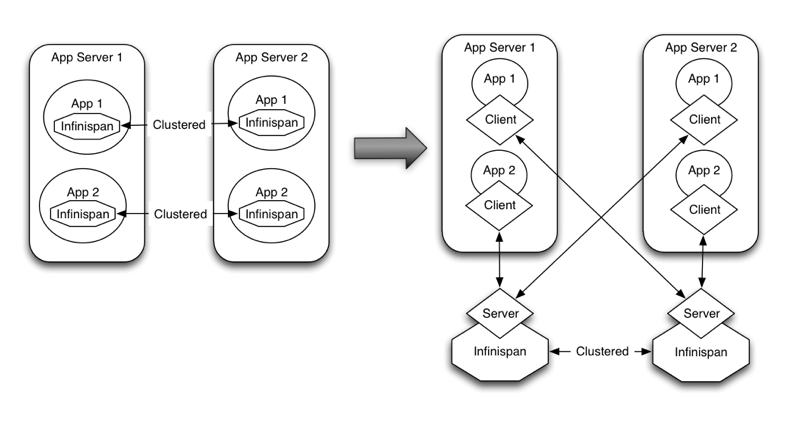

1.2.4. As a remote cache

If you want to be able to scale your caching layer independently from your application, or you need to make your data available to different applications, possibly even using different languages / platforms, use Infinispan Server and its various clients.

2. The Embedded CacheManager

The CacheManager is Infinispan’s main entry point. You use a CacheManager to

-

configure and obtain caches

-

manage and monitor your nodes

-

execute code across a cluster

-

more…

Depending on whether you are embedding Infinispan in your application or you are using it remotely, you will be

dealing with either an EmbeddedCacheManager or a RemoteCacheManager. While they share some methods and properties,

be aware that there are semantic differences between them. The following chapters focus mostly on the embedded

implementation. For details on the remote implementation refer to Hot Rod Java Client.

CacheManagers are heavyweight objects, and we foresee no more than one CacheManager being used per JVM (unless specific setups require more than one; but either way, this would be a minimal and finite number of instances).

The simplest way to create a CacheManager is:

EmbeddedCacheManager manager = new DefaultCacheManager();which starts the most basic, local mode, non-clustered cache manager with no caches. CacheManagers have a lifecycle and the default constructors also call start(). Overloaded versions of the constructors are available, that do not start the CacheManager, although keep in mind that CacheManagers need to be started before they can be used to create Cache instances.

Once constructed, CacheManagers should be made available to any component that require to interact with it via some form of application-wide scope such as JNDI, a ServletContext or via some other mechanism such as an IoC container.

When you are done with a CacheManager, you must stop it so that it can release its resources:

manager.stop();This will ensure all caches within its scope are properly stopped, thread pools are shutdown. If the CacheManager was clustered it will also leave the cluster gracefully.

2.1. Configuration

Infinispan offers both declarative and programmatic configuration.

2.1.1. Configuring caches declaratively

Declarative configuration comes in a form of XML document that adheres to a provided Infinispan configuration XML schema.

Every aspect of Infinispan that can be configured declaratively can also be configured programmatically. In fact, declarative configuration, behind the scenes, invokes the programmatic configuration API as the XML configuration file is being processed. One can even use a combination of these approaches. For example, you can read static XML configuration files and at runtime programmatically tune that same configuration. Or you can use a certain static configuration defined in XML as a starting point or template for defining additional configurations in runtime.

There are two main configuration abstractions in Infinispan: global and cache.

Global configuration defines global settings shared among all cache instances created by a single EmbeddedCacheManager. Shared resources like thread pools, serialization/marshalling settings, transport and network settings, JMX domains are all part of global configuration.

Cache configuration is specific to the actual caching domain itself: it specifies eviction, locking, transaction, clustering, persistence etc.

You can specify as many named cache configurations as you need. One of these caches can be indicated as the default cache,

which is the cache returned by the CacheManager.getCache() API, whereas other named caches are retrieved via the CacheManager.getCache(String name) API.

Whenever they are specified, named caches inherit settings from the default cache while additional behavior can be specified or overridden. Infinispan also provides a very flexible inheritance mechanism, where you can define a hierarchy of configuration templates, allowing multiple caches to share the same settings, or overriding specific parameters as necessary.

| Embedded and Server configuration use different schemas, but we strive to maintain them as compatible as possible so that you can easily migrate between the two. |

One of the major goals of Infinispan is to aim for zero configuration. A simple XML configuration file containing nothing more than a single infinispan element is enough to get you started. The configuration file listed below provides sensible defaults and is perfectly valid.

<infinispan />However, that would only give you the most basic, local mode, non-clustered cache manager with no caches. Non-basic configurations are very likely to use customized global and default cache elements.

Declarative configuration is the most common approach to configuring Infinispan cache instances. In order to read XML configuration files one would typically construct an instance of DefaultCacheManager by pointing to an XML file containing Infinispan configuration. Once the configuration file is read you can obtain reference to the default cache instance.

EmbeddedCacheManager manager = new DefaultCacheManager("my-config-file.xml");

Cache defaultCache = manager.getCache();or any other named instance specified in my-config-file.xml.

Cache someNamedCache = manager.getCache("someNamedCache");The name of the default cache is defined in the <cache-container> element of the XML configuration file, and additional

caches can be configured using the <local-cache>,<distributed-cache>,<invalidation-cache> or <replicated-cache> elements.

The following example shows the simplest possible configuration for each of the cache types supported by Infinispan:

<infinispan>

<cache-container default-cache="local">

<transport cluster="mycluster"/>

<local-cache name="local"/>

<invalidation-cache name="invalidation" mode="SYNC"/>

<replicated-cache name="repl-sync" mode="SYNC"/>

<distributed-cache name="dist-sync" mode="SYNC"/>

</cache-container>

</infinispan>Cache configuration templates

As mentioned above, Infinispan supports the notion of configuration templates. These are full or partial configuration declarations which can be shared among multiple caches or as the basis for more complex configurations.

The following example shows how a configuration named local-template is used to define a cache named local.

<infinispan>

<cache-container default-cache="local">

<!-- template configurations -->

<local-cache-configuration name="local-template">

<expiration interval="10000" lifespan="10" max-idle="10"/>

</local-cache-configuration>

<!-- cache definitions -->

<local-cache name="local" configuration="local-template" />

</cache-container>

</infinispan>Templates can inherit from previously defined templates, augmenting and/or overriding some or all of the configuration elements:

<infinispan>

<cache-container default-cache="local">

<!-- template configurations -->

<local-cache-configuration name="base-template">

<expiration interval="10000" lifespan="10" max-idle="10"/>

</local-cache-configuration>

<local-cache-configuration name="extended-template" configuration="base-template">

<expiration lifespan="20"/>

<memory>

<object size="2000"/>

</memory>

</local-cache-configuration>

<!-- cache definitions -->

<local-cache name="local" configuration="base-template" />

<local-cache name="local-bounded" configuration="extended-template" />

</cache-container>

</infinispan>In the above example, base-template defines a local cache with a specific expiration configuration. The extended-template

configuration inherits from base-template, overriding just a single parameter of the expiration element (all other

attributes are inherited) and adds a memory element. Finally, two caches are defined: local which uses the base-template

configuration and local-bounded which uses the extended-template configuration.

Be aware that for multi-valued elements (such as properties) the inheritance is additive, i.e. the child configuration will be the result of merging the properties from the parent and its own.

|

Cache configuration wildcards

An alternative way to apply templates to caches is to use wildcards in the template name, e.g. basecache*. Any cache whose name matches the template wildcard will inherit that configuration.

<infinispan>

<cache-container>

<local-cache-configuration name="basecache*">

<expiration interval="10500" lifespan="11" max-idle="11"/>

</local-cache-configuration>

<local-cache name="basecache-1"/>

<local-cache name="basecache-2"/>

</cache-container>

</infinispan>Above, caches basecache-1 and basecache-2 will use the basecache* configuration. The configuration will also

be applied when retrieving undefined caches programmatically.

| If a cache name matches multiple wildcards, i.e. it is ambiguous, an exception will be thrown. |

Declarative configuration reference

For more details on the declarative configuration schema, refer to the configuration reference. If you are using XML editing tools for configuration writing you can use the provided Infinispan schema to assist you.

2.1.2. Configuring caches programmatically

Programmatic Infinispan configuration is centered around the CacheManager and ConfigurationBuilder API. Although every single aspect of Infinispan configuration could be set programmatically, the most usual approach is to create a starting point in a form of XML configuration file and then in runtime, if needed, programmatically tune a specific configuration to suit the use case best.

EmbeddedCacheManager manager = new DefaultCacheManager("my-config-file.xml");

Cache defaultCache = manager.getCache();Let’s assume that a new synchronously replicated cache is to be configured programmatically. First, a fresh instance of Configuration object is created using ConfigurationBuilder helper object, and the cache mode is set to synchronous replication. Finally, the configuration is defined/registered with a manager.

Configuration c = new ConfigurationBuilder().clustering().cacheMode(CacheMode.REPL_SYNC).build();

String newCacheName = "repl";

manager.defineConfiguration(newCacheName, c);

Cache<String, String> cache = manager.getCache(newCacheName);The default cache configuration (or any other cache configuration) can be used as a starting point for creation of a new cache.

For example, lets say that infinispan-config-file.xml specifies a replicated cache as a default and that a distributed cache is desired with a specific L1 lifespan while at the same time retaining all other aspects of a default cache.

Therefore, the starting point would be to read an instance of a default Configuration object and use ConfigurationBuilder to construct and modify cache mode and L1 lifespan on a new Configuration object. As a final step the configuration is defined/registered with a manager.

EmbeddedCacheManager manager = new DefaultCacheManager("infinispan-config-file.xml");

Configuration dcc = manager.getDefaultCacheConfiguration();

Configuration c = new ConfigurationBuilder().read(dcc).clustering().cacheMode(CacheMode.DIST_SYNC).l1().lifespan(60000L).build();

String newCacheName = "distributedWithL1";

manager.defineConfiguration(newCacheName, c);

Cache<String, String> cache = manager.getCache(newCacheName);As long as the base configuration is the default named cache, the previous code works perfectly fine. However, other times the base configuration might be another named cache. So, how can new configurations be defined based on other defined caches? Take the previous example and imagine that instead of taking the default cache as base, a named cache called "replicatedCache" is used as base. The code would look something like this:

EmbeddedCacheManager manager = new DefaultCacheManager("infinispan-config-file.xml");

Configuration rc = manager.getCacheConfiguration("replicatedCache");

Configuration c = new ConfigurationBuilder().read(rc).clustering().cacheMode(CacheMode.DIST_SYNC).l1().lifespan(60000L).build();

String newCacheName = "distributedWithL1";

manager.defineConfiguration(newCacheName, c);

Cache<String, String> cache = manager.getCache(newCacheName);Refer to CacheManager , ConfigurationBuilder , Configuration , and GlobalConfiguration javadocs for more details.

ConfigurationBuilder Programmatic Configuration API

While the above paragraph shows how to combine declarative and programmatic configuration, starting from an XML configuration is completely optional. The ConfigurationBuilder fluent interface style allows for easier to write and more readable programmatic configuration. This approach can be used for both the global and the cache level configuration. GlobalConfiguration objects are constructed using GlobalConfigurationBuilder while Configuration objects are built using ConfigurationBuilder. Let’s look at some examples on configuring both global and cache level options with this API:

Configuring the transport

One of the most commonly configured global option is the transport layer, where you indicate how an Infinispan node will discover the others:

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder().transport()

.defaultTransport()

.clusterName("qa-cluster")

.addProperty("configurationFile", "jgroups-tcp.xml")

.machineId("qa-machine").rackId("qa-rack")

.build();Using a custom JChannel

If you want to construct the JGroups JChannel by yourself, you can do so.

| The JChannel must not be already connected. |

GlobalConfigurationBuilder global = new GlobalConfigurationBuilder();

JChannel jchannel = new JChannel();

// Configure the jchannel to your needs.

JGroupsTransport transport = new JGroupsTransport(jchannel);

global.transport().transport(transport);

new DefaultCacheManager(global.build());Enabling JMX MBeans and statistics

Sometimes you might also want to enable collection of global JMX statistics at cache manager level or get information about the transport. To enable global JMX statistics simply do:

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.globalJmxStatistics()

.enable()

.build();Please note that by not enabling (or by explicitly disabling) global JMX statistics your are just turning off statistics collection. The corresponding MBean is still registered and can be used to manage the cache manager in general, but the statistics attributes do not return meaningful values.

Further options at the global JMX statistics level allows you to configure the cache manager name which comes handy when you have multiple cache managers running on the same system, or how to locate the JMX MBean Server:

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.globalJmxStatistics()

.cacheManagerName("SalesCacheManager")

.mBeanServerLookup(new JBossMBeanServerLookup())

.build();Configuring the thread pools

Some of the Infinispan features are powered by a group of the thread pool executors which can also be tweaked at this global level. For example:

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.replicationQueueThreadPool()

.threadPoolFactory(ScheduledThreadPoolExecutorFactory.create())

.build();You can not only configure global, cache manager level, options, but you can also configure cache level options such as the cluster mode:

Configuration config = new ConfigurationBuilder()

.clustering()

.cacheMode(CacheMode.DIST_SYNC)

.sync()

.l1().lifespan(25000L)

.hash().numOwners(3)

.build();Or you can configure eviction and expiration settings:

Configuration config = new ConfigurationBuilder()

.memory()

.size(20000)

.expiration()

.wakeUpInterval(5000L)

.maxIdle(120000L)

.build();Configuring transactions and locking

An application might also want to interact with an Infinispan cache within the boundaries of JTA and to do that you need to configure the transaction layer and optionally tweak the locking settings. When interacting with transactional caches, you might want to enable recovery to deal with transactions that finished with an heuristic outcome and if you do that, you will often want to enable JMX management and statistics gathering too:

Configuration config = new ConfigurationBuilder()

.locking()

.concurrencyLevel(10000).isolationLevel(IsolationLevel.REPEATABLE_READ)

.lockAcquisitionTimeout(12000L).useLockStriping(false).writeSkewCheck(true)

.versioning().enable().scheme(VersioningScheme.SIMPLE)

.transaction()

.transactionManagerLookup(new GenericTransactionManagerLookup())

.recovery()

.jmxStatistics()

.build();Configuring cache stores

Configuring Infinispan with chained cache stores is simple too:

Configuration config = new ConfigurationBuilder()

.persistence().passivation(false)

.addSingleFileStore().location("/tmp").async().enable()

.preload(false).shared(false).threadPoolSize(20).build();Advanced programmatic configuration

The fluent configuration can also be used to configure more advanced or exotic options, such as advanced externalizers:

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

.serialization()

.addAdvancedExternalizer(998, new PersonExternalizer())

.addAdvancedExternalizer(999, new AddressExternalizer())

.build();Or, add custom interceptors:

Configuration config = new ConfigurationBuilder()

.customInterceptors().addInterceptor()

.interceptor(new FirstInterceptor()).position(InterceptorConfiguration.Position.FIRST)

.interceptor(new LastInterceptor()).position(InterceptorConfiguration.Position.LAST)

.interceptor(new FixPositionInterceptor()).index(8)

.interceptor(new AfterInterceptor()).after(NonTransactionalLockingInterceptor.class)

.interceptor(new BeforeInterceptor()).before(CallInterceptor.class)

.build();For information on the individual configuration options, please check the configuration guide.

2.1.3. Configuration Migration Tools

The configuration format of Infinispan has changed since schema version 6.0 in order to align the embedded schema with the one used by the server. For this reason, when upgrading to schema 7.x or later, you should use the configuration converter included in the all distribution. Simply invoke it from the command-line passing the old configuration file as the first parameter and the name of the converted file as the second parameter.

To convert on Unix/Linux/macOS:

bin/config-converter.sh oldconfig.xml newconfig.xmlon Windows:

bin\config-converter.bat oldconfig.xml newconfig.xml| If you wish to help write conversion tools from other caching systems, please contact infinispan-dev. |

2.1.4. Clustered Configuration

Infinispan uses JGroups for network communications when in clustered mode. Infinispan ships with pre-configured JGroups stacks that make it easy for you to jump-start a clustered configuration.

Using an external JGroups file

If you are configuring your cache programmatically, all you need to do is:

GlobalConfiguration gc = new GlobalConfigurationBuilder()

.transport().defaultTransport()

.addProperty("configurationFile", "jgroups.xml")

.build();and if you happen to use an XML file to configure Infinispan, just use:

<infinispan>

<jgroups>

<stack-file name="external-file" path="jgroups.xml"/>

</jgroups>

<cache-container default-cache="replicatedCache">

<transport stack="external-file" />

<replicated-cache name="replicatedCache"/>

</cache-container>

...

</infinispan>In both cases above, Infinispan looks for jgroups.xml first in your classpath, and then for an absolute path name if not found in the classpath.

Use one of the pre-configured JGroups files

Infinispan ships with a few different JGroups files (packaged in infinispan-core.jar) which means they will already be on your classpath by default.

All you need to do is specify the file name, e.g., instead of jgroups.xml above, specify /default-configs/default-jgroups-tcp.xml.

The configurations available are:

-

default-jgroups-udp.xml - Uses UDP as a transport, and UDP multicast for discovery. Usually suitable for larger (over 100 nodes) clusters or if you are using replication or invalidation. Minimises opening too many sockets.

-

default-jgroups-tcp.xml - Uses TCP as a transport and UDP multicast for discovery. Better for smaller clusters (under 100 nodes) only if you are using distribution, as TCP is more efficient as a point-to-point protocol

-

default-jgroups-ec2.xml - Uses TCP as a transport and S3_PING for discovery. Suitable on Amazon EC2 nodes where UDP multicast isn’t available.

-

default-jgroups-kubernetes.xml - Uses TCP as a transport and KUBE_PING for discovery. Suitable on Kubernetes and OpenShift nodes where UDP multicast is not always available.

Tuning JGroups settings

The settings above can be further tuned without editing the XML files themselves. Passing in certain system properties to your JVM at startup can affect the behaviour of some of these settings. The table below shows you which settings can be configured in this way. E.g.,

$ java -cp ... -Djgroups.tcp.port=1234 -Djgroups.tcp.address=10.11.12.13

System Property |

Description |

Default |

Required? |

jgroups.udp.mcast_addr |

IP address to use for multicast (both for communications and discovery). Must be a valid Class D IP address, suitable for IP multicast. |

228.6.7.8 |

No |

jgroups.udp.mcast_port |

Port to use for multicast socket |

46655 |

No |

jgroups.udp.ip_ttl |

Specifies the time-to-live (TTL) for IP multicast packets. The value here refers to the number of network hops a packet is allowed to make before it is dropped |

2 |

No |

System Property |

Description |

Default |

Required? |

jgroups.tcp.address |

IP address to use for the TCP transport. |

127.0.0.1 |

No |

jgroups.tcp.port |

Port to use for TCP socket |

7800 |

No |

jgroups.udp.mcast_addr |

IP address to use for multicast (for discovery). Must be a valid Class D IP address, suitable for IP multicast. |

228.6.7.8 |

No |

jgroups.udp.mcast_port |

Port to use for multicast socket |

46655 |

No |

jgroups.udp.ip_ttl |

Specifies the time-to-live (TTL) for IP multicast packets. The value here refers to the number of network hops a packet is allowed to make before it is dropped |

2 |

No |

System Property |

Description |

Default |

Required? |

jgroups.tcp.address |

IP address to use for the TCP transport. |

127.0.0.1 |

No |

jgroups.tcp.port |

Port to use for TCP socket |

7800 |

No |

jgroups.s3.access_key |

The Amazon S3 access key used to access an S3 bucket |

|

No |

jgroups.s3.secret_access_key |

The Amazon S3 secret key used to access an S3 bucket |

|

No |

jgroups.s3.bucket |

Name of the Amazon S3 bucket to use. Must be unique and must already exist |

|

No |

System Property |

Description |

Default |

Required? |

jgroups.tcp.address |

IP address to use for the TCP transport. |

eth0 |

No |

jgroups.tcp.port |

Port to use for TCP socket |

7800 |

No |

Further reading

JGroups also supports more system property overrides, details of which can be found on this page: SystemProps

In addition, the JGroups configuration files shipped with Infinispan are intended as a jumping off point to getting something up and running, and working. More often than not though, you will want to fine-tune your JGroups stack further to extract every ounce of performance from your network equipment. For this, your next stop should be the JGroups manual which has a detailed section on configuring each of the protocols you see in a JGroups configuration file.

2.2. Obtaining caches

After you configure the CacheManager, you can obtain and control caches.

Invoke the getCache() method to obtain caches, as follows:

Cache<String, String> myCache = manager.getCache("myCache");The preceding operation creates a cache named myCache, if it does not already exist, and returns it.

Using the getCache() method creates the cache only on the node where you invoke the method. In other words, it performs a local operation that must be invoked on each node across the cluster. Typically, applications deployed across multiple nodes obtain caches during initialization to ensure that caches are symmetric and exist on each node.

Invoke the createCache() method to create caches dynamically across the entire cluster, as follows:

Cache<String, String> myCache = manager.administration().createCache("myCache", "myTemplate");The preceding operation also automatically creates caches on any nodes that subsequently join the cluster.

Caches that you create with the createCache() method are ephemeral by default. If the entire cluster shuts down, the cache is not automatically created again when it restarts.

Use the PERMANENT flag to ensure that caches can survive restarts, as follows:

Cache<String, String> myCache = manager.administration().withFlags(AdminFlag.PERMANENT).createCache("myCache", "myTemplate");For the PERMANENT flag to take effect, you must enable global state and set a configuration storage provider.

For more information about configuration storage providers, see GlobalStateConfigurationBuilder#configurationStorage().

2.3. Clustering Information

The EmbeddedCacheManager has quite a few methods to provide information

as to how the cluster is operating. The following methods only really make

sense when being used in a clustered environment (that is when a Transport

is configured).

2.3.1. Member Information

When you are using a cluster it is very important to be able to find information about membership in the cluster including who is the owner of the cluster.

The getMembers() method returns all of the nodes in the current cluster.

The getCoordinator() method will tell you which one of the members is the coordinator of the cluster. For most intents you shouldn’t need to care who the coordinator is. You can use isCoordinator() method directly to see if the local node is the coordinator as well.

2.3.2. Other methods

This method provides you access to the underlying Transport that is used to send messages to other nodes. In most cases a user wouldn’t ever need to go to this level, but if you want to get Transport specific information (in this case JGroups) you can use this mechanism.

The stats provided here are coalesced from all of the active caches in this manager. These stats can be useful to see if there is something wrong going on with your cluster overall.

3. The Cache API

3.1. The Cache interface

Infinispan’s Caches are manipulated through the Cache interface.

A Cache exposes simple methods for adding, retrieving and removing entries, including atomic mechanisms exposed by the JDK’s ConcurrentMap interface. Based on the cache mode used, invoking these methods will trigger a number of things to happen, potentially even including replicating an entry to a remote node or looking up an entry from a remote node, or potentially a cache store.

| For simple usage, using the Cache API should be no different from using the JDK Map API, and hence migrating from simple in-memory caches based on a Map to Infinispan’s Cache should be trivial. |

3.1.1. Performance Concerns of Certain Map Methods

Certain methods exposed in Map have certain performance consequences when used with Infinispan, such as

size() ,

values() ,

keySet() and

entrySet() .

Specific methods on the keySet, values and entrySet are fine for use please see their Javadoc for further details.

Attempting to perform these operations globally would have large performance impact as well as become a scalability bottleneck. As such, these methods should only be used for informational or debugging purposes only.

It should be noted that using certain flags with the withFlags method can mitigate some of these concerns, please check each method’s documentation for more details.

For more performance tips, have a look at our Performance Guide.

3.1.2. Mortal and Immortal Data

Further to simply storing entries, Infinispan’s cache API allows you to attach mortality information to data. For example, simply using put(key, value) would create an immortal entry, i.e., an entry that lives in the cache forever, until it is removed (or evicted from memory to prevent running out of memory). If, however, you put data in the cache using put(key, value, lifespan, timeunit) , this creates a mortal entry, i.e., an entry that has a fixed lifespan and expires after that lifespan.

In addition to lifespan , Infinispan also supports maxIdle as an additional metric with which to determine expiration. Any combination of lifespans or maxIdles can be used.

3.1.3. Expiration and Mortal Data

See Expiration for more information about using mortal data with Infinispan.

3.1.4. putForExternalRead operation

Infinispan’s Cache class contains a different 'put' operation called putForExternalRead . This operation is particularly useful when Infinispan is used as a temporary cache for data that is persisted elsewhere. Under heavy read scenarios, contention in the cache should not delay the real transactions at hand, since caching should just be an optimization and not something that gets in the way.

To achieve this, putForExternalRead acts as a put call that only operates if the key is not present in the cache, and fails fast and silently if another thread is trying to store the same key at the same time. In this particular scenario, caching data is a way to optimise the system and it’s not desirable that a failure in caching affects the on-going transaction, hence why failure is handled differently. putForExternalRead is considered to be a fast operation because regardless of whether it’s successful or not, it doesn’t wait for any locks, and so returns to the caller promptly.

To understand how to use this operation, let’s look at basic example. Imagine a cache of Person instances, each keyed by a PersonId , whose data originates in a separate data store. The following code shows the most common pattern of using putForExternalRead within the context of this example:

// Id of the person to look up, provided by the application

PersonId id = ...;

// Get a reference to the cache where person instances will be stored

Cache<PersonId, Person> cache = ...;

// First, check whether the cache contains the person instance

// associated with with the given id

Person cachedPerson = cache.get(id);

if (cachedPerson == null) {

// The person is not cached yet, so query the data store with the id

Person person = dataStore.lookup(id);

// Cache the person along with the id so that future requests can

// retrieve it from memory rather than going to the data store

cache.putForExternalRead(id, person);

} else {

// The person was found in the cache, so return it to the application

return cachedPerson;

}Please note that putForExternalRead should never be used as a mechanism to update the cache with a new Person instance originating from application execution (i.e. from a transaction that modifies a Person’s address). When updating cached values, please use the standard put operation, otherwise the possibility of caching corrupt data is likely.

3.2. The AdvancedCache interface

In addition to the simple Cache interface, Infinispan offers an AdvancedCache interface, geared towards extension authors. The AdvancedCache offers the ability to inject custom interceptors, access certain internal components and to apply flags to alter the default behavior of certain cache methods. The following code snippet depicts how an AdvancedCache can be obtained:

AdvancedCache advancedCache = cache.getAdvancedCache();3.2.1. Flags

Flags are applied to regular cache methods to alter the behavior of certain methods. For a list of all available flags, and their effects, see the Flag enumeration. Flags are applied using AdvancedCache.withFlags() . This builder method can be used to apply any number of flags to a cache invocation, for example:

advancedCache.withFlags(Flag.CACHE_MODE_LOCAL, Flag.SKIP_LOCKING)

.withFlags(Flag.FORCE_SYNCHRONOUS)

.put("hello", "world");3.2.2. Custom Interceptors

The AdvancedCache interface also offers advanced developers a mechanism with which to attach custom interceptors. Custom interceptors allow developers to alter the behavior of the cache API methods, and the AdvancedCache interface allows developers to attach these interceptors programmatically, at run-time. See the AdvancedCache Javadocs for more details.

For more information on writing custom interceptors, see Custom Interceptors.

3.3. Listeners and Notifications

Infinispan offers a listener API, where clients can register for and get notified when events take place. This annotation-driven API applies to 2 different levels: cache level events and cache manager level events.

Events trigger a notification which is dispatched to listeners. Listeners are simple POJO s annotated with @Listener and registered using the methods defined in the Listenable interface.

| Both Cache and CacheManager implement Listenable, which means you can attach listeners to either a cache or a cache manager, to receive either cache-level or cache manager-level notifications. |

For example, the following class defines a listener to print out some information every time a new entry is added to the cache:

@Listener

public class PrintWhenAdded {

@CacheEntryCreated

public void print(CacheEntryCreatedEvent event) {

System.out.println("New entry " + event.getKey() + " created in the cache");

}

}For more comprehensive examples, please see the Javadocs for @Listener.

3.3.1. Cache-level notifications

Cache-level events occur on a per-cache basis, and by default are only raised on nodes where the events occur. Note in a distributed cache these events are only raised on the owners of data being affected. Examples of cache-level events are entries being added, removed, modified, etc. These events trigger notifications to listeners registered to a specific cache.

Please see the Javadocs on the org.infinispan.notifications.cachelistener.annotation package for a comprehensive list of all cache-level notifications, and their respective method-level annotations.

| Please refer to the Javadocs on the org.infinispan.notifications.cachelistener.annotation package for the list of cache-level notifications available in Infinispan. |

Cluster Listeners

The cluster listeners should be used when it is desirable to listen to the cache events on a single node.

To do so all that is required is set to annotate your listener as being clustered.

@Listener (clustered = true)

public class MyClusterListener { .... }There are some limitations to cluster listeners from a non clustered listener.

-

A cluster listener can only listen to

@CacheEntryModified,@CacheEntryCreated,@CacheEntryRemovedand@CacheEntryExpiredevents. Note this means any other type of event will not be listened to for this listener. -

Only the post event is sent to a cluster listener, the pre event is ignored.

Event filtering and conversion

All applicable events on the node where the listener is installed will be raised to the listener. It is possible to dynamically filter what events are raised by using a KeyFilter (only allows filtering on keys) or CacheEventFilter (used to filter for keys, old value, old metadata, new value, new metadata, whether command was retried, if the event is before the event (ie. isPre) and also the command type).

The example here shows a simple KeyFilter that will only allow events to be raised when an event modified the entry for the key Only Me.

public class SpecificKeyFilter implements KeyFilter<String> {

private final String keyToAccept;

public SpecificKeyFilter(String keyToAccept) {

if (keyToAccept == null) {

throw new NullPointerException();

}

this.keyToAccept = keyToAccept;

}

boolean accept(String key) {

return keyToAccept.equals(key);

}

}

...

cache.addListener(listener, new SpecificKeyFilter("Only Me"));

...This can be useful when you want to limit what events you receive in a more efficient manner.

There is also a CacheEventConverter that can be supplied that allows for converting a value to another before raising the event. This can be nice to modularize any code that does value conversions.

| The mentioned filters and converters are especially beneficial when used in conjunction with a Cluster Listener. This is because the filtering and conversion is done on the node where the event originated and not on the node where event is listened to. This can provide benefits of not having to replicate events across the cluster (filter) or even have reduced payloads (converter). |

Initial State Events

When a listener is installed it will only be notified of events after it is fully installed.

It may be desirable to get the current state of the cache contents upon first registration of listener by having an event generated of type @CacheEntryCreated for each element in the cache. Any additionally generated events during this initial phase will be queued until appropriate events have been raised.

| This only works for clustered listeners at this time. ISPN-4608 covers adding this for non clustered listeners. |

Duplicate Events

It is possible in a non transactional cache to receive duplicate events. This is possible when the primary owner of a key goes down while trying to perform a write operation such as a put.

Infinispan internally will rectify the put operation by sending it to the new primary owner for the given key automatically, however there are no guarantees in regards to if the write was first replicated to backups. Thus more than 1 of the following write events (CacheEntryCreatedEvent, CacheEntryModifiedEvent & CacheEntryRemovedEvent) may be sent on a single operation.

If more than one event is generated Infinispan will mark the event that it was generated by a retried command to help the user to know when this occurs without having to pay attention to view changes.

@Listener

public class MyRetryListener {

@CacheEntryModified

public void entryModified(CacheEntryModifiedEvent event) {

if (event.isCommandRetried()) {

// Do something

}

}

}Also when using a CacheEventFilter or CacheEventConverter the EventType contains a method isRetry to tell if the event was generated due to retry.

3.3.2. Cache manager-level notifications

Cache manager-level events occur on a cache manager. These too are global and cluster-wide, but involve events that affect all caches created by a single cache manager. Examples of cache manager-level events are nodes joining or leaving a cluster, or caches starting or stopping.

Please see the Javadocs on the org.infinispan.notifications.cachemanagerlistener.annotation package for a comprehensive list of all cache manager-level notifications, and their respective method-level annotations.

3.3.3. Synchronicity of events

By default, all notifications are dispatched in the same thread that generates the event. This means that you must write your listener such that it does not block or do anything that takes too long, as it would prevent the thread from progressing. Alternatively, you could annotate your listener as asynchronous , in which case a separate thread pool will be used to dispatch the notification and prevent blocking the event originating thread. To do this, simply annotate your listener such:

@Listener (sync = false)

public class MyAsyncListener { .... }Asynchronous thread pool

To tune the thread pool used to dispatch such asynchronous notifications, use the <listener-executor /> XML element in your configuration file.

3.4. Asynchronous API

In addition to synchronous API methods like Cache.put() , Cache.remove() , etc., Infinispan also has an asynchronous, non-blocking API where you can achieve the same results in a non-blocking fashion.

These methods are named in a similar fashion to their blocking counterparts, with "Async" appended. E.g., Cache.putAsync() , Cache.removeAsync() , etc. These asynchronous counterparts return a Future containing the actual result of the operation.

For example, in a cache parameterized as Cache<String, String>, Cache.put(String key, String value) returns a String.

Cache.putAsync(String key, String value) would return a Future<String>.

3.4.1. Why use such an API?

Non-blocking APIs are powerful in that they provide all of the guarantees of synchronous communications - with the ability to handle communication failures and exceptions - with the ease of not having to block until a call completes. This allows you to better harness parallelism in your system. For example:

Set<Future<?>> futures = new HashSet<Future<?>>();

futures.add(cache.putAsync(key1, value1)); // does not block

futures.add(cache.putAsync(key2, value2)); // does not block

futures.add(cache.putAsync(key3, value3)); // does not block

// the remote calls for the 3 puts will effectively be executed

// in parallel, particularly useful if running in distributed mode

// and the 3 keys would typically be pushed to 3 different nodes

// in the cluster

// check that the puts completed successfully

for (Future<?> f: futures) f.get();3.4.2. Which processes actually happen asynchronously?

There are 4 things in Infinispan that can be considered to be on the critical path of a typical write operation. These are, in order of cost:

-

network calls

-

marshalling

-

writing to a cache store (optional)

-

locking

As of Infinispan 4.0, using the async methods will take the network calls and marshalling off the critical path. For various technical reasons, writing to a cache store and acquiring locks, however, still happens in the caller’s thread. In future, we plan to take these offline as well. See this developer mail list thread about this topic.

3.4.3. Notifying futures

Strictly, these methods do not return JDK Futures, but rather a sub-interface known as a NotifyingFuture . The main difference is that you can attach a listener to a NotifyingFuture such that you could be notified when the future completes. Here is an example of making use of a notifying future:

FutureListener futureListener = new FutureListener() {

public void futureDone(Future future) {

try {

future.get();

} catch (Exception e) {

// Future did not complete successfully

System.out.println("Help!");

}

}

};

cache.putAsync("key", "value").attachListener(futureListener);3.4.4. Further reading

The Javadocs on the Cache interface has some examples on using the asynchronous API, as does this article by Manik Surtani introducing the API.

3.5. Invocation Flags

An important aspect of getting the most of Infinispan is the use of per-invocation flags in order to provide specific behaviour to each particular cache call. By doing this, some important optimizations can be implemented potentially saving precious time and network resources. One of the most popular usages of flags can be found right in Cache API, underneath the putForExternalRead() method which is used to load an Infinispan cache with data read from an external resource. In order to make this call efficient, Infinispan basically calls a normal put operation passing the following flags: FAIL_SILENTLY , FORCE_ASYNCHRONOUS , ZERO_LOCK_ACQUISITION_TIMEOUT

What Infinispan is doing here is effectively saying that when putting data read from external read, it will use an almost-zero lock acquisition time and that if the locks cannot be acquired, it will fail silently without throwing any exception related to lock acquisition. It also specifies that regardless of the cache mode, if the cache is clustered, it will replicate asynchronously and so won’t wait for responses from other nodes. The combination of all these flags make this kind of operation very efficient, and the efficiency comes from the fact this type of putForExternalRead calls are used with the knowledge that client can always head back to a persistent store of some sorts to retrieve the data that should be stored in memory. So, any attempt to store the data is just a best effort and if not possible, the client should try again if there’s a cache miss.

3.5.1. Examples

If you want to use these or any other flags available, which by the way are described in detail the Flag enumeration , you simply need to get hold of the advanced cache and add the flags you need via the withFlags() method call. For example:

Cache cache = ...

cache.getAdvancedCache()

.withFlags(Flag.SKIP_CACHE_STORE, Flag.CACHE_MODE_LOCAL)

.put("local", "only");It’s worth noting that these flags are only active for the duration of the cache operation. If the same flags need to be used in several invocations, even if they’re in the same transaction, withFlags() needs to be called repeatedly. Clearly, if the cache operation is to be replicated in another node, the flags are carried over to the remote nodes as well.

Suppressing return values from a put() or remove()

Another very important use case is when you want a write operation such as put() to not return the previous value. To do that, you need to use two flags to make sure that in a distributed environment, no remote lookup is done to potentially get previous value, and if the cache is configured with a cache loader, to avoid loading the previous value from the cache store. You can see these two flags in action in the following example:

Cache cache = ...

cache.getAdvancedCache()

.withFlags(Flag.SKIP_REMOTE_LOOKUP, Flag.SKIP_CACHE_LOAD)

.put("local", "only")For more information, please check the Flag enumeration javadoc.

3.6. Tree API Module

Infinispan’s tree API module offers clients the possibility of storing data using a tree-structure like API. This API is similar to the one provided by JBoss Cache, hence the tree module is perfect for those users wanting to migrate their applications from JBoss Cache to Infinispan, who want to limit changes their codebase as part of the migration. Besides, it’s important to understand that Infinispan provides this tree API much more efficiently than JBoss Cache did, so if you’re a user of the tree API in JBoss Cache, you should consider migrating to Infinispan.

3.6.1. What is Tree API about?

The aim of this API is to store information in a hierarchical way. The hierarchy is defined using paths represented as Fqn or fully qualified names , for example: /this/is/a/fqn/path or /another/path . In the hierarchy, there’s a special path called root which represents the starting point of all paths and it’s represented as: /

Each FQN path is represented as a node where users can store data using a key/value pair style API (i.e. a Map). For example, in /persons/john , you could store information belonging to John, for example: surname=Smith, birthdate=05/02/1980…etc.

Please remember that users should not use root as a place to store data. Instead, users should define their own paths and store data there. The following sections will delve into the practical aspects of this API.

3.6.2. Using the Tree API

Dependencies

For your application to use the tree API, you need to import infinispan-tree.jar which can be located in the Infinispan binary distributions, or you can simply add a dependency to this module in your pom.xml:

<dependencies>

...

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-tree</artifactId>

<version>${version.infinispan}</version>

</dependency>

...

</dependencies>Replace ${version.infinispan} with the appropriate version of Infinispan.

3.6.3. Creating a Tree Cache

The first step to use the tree API is to actually create a tree cache. To do so, you need to create an Infinispan Cache as you’d normally do, and using the TreeCacheFactory , create an instance of TreeCache . A very important note to remember here is that the Cache instance passed to the factory must be configured with invocation batching. For example:

import org.infinispan.config.Configuration;

import org.infinispan.tree.TreeCacheFactory;

import org.infinispan.tree.TreeCache;

...

Configuration config = new Configuration();

config.setInvocationBatchingEnabled(true);

Cache cache = new DefaultCacheManager(config).getCache();

TreeCache treeCache = TreeCacheFactory.createTreeCache(cache);3.6.4. Manipulating data in a Tree Cache

The Tree API effectively provides two ways to interact with the data:

Via TreeCache convenience methods: These methods are located within the TreeCache interface and enable users to store , retrieve , move , remove …etc data with a single call that takes the Fqn , in String or Fqn format, and the data involved in the call. For example:

treeCache.put("/persons/john", "surname", "Smith");Or:

import org.infinispan.tree.Fqn;

...

Fqn johnFqn = Fqn.fromString("persons/john");

Calendar calendar = Calendar.getInstance();

calendar.set(1980, 5, 2);

treeCache.put(johnFqn, "birthdate", calendar.getTime()));Via Node API: It allows finer control over the individual nodes that form the FQN, allowing manipulation of nodes relative to a particular node. For example:

import org.infinispan.tree.Node;

...

TreeCache treeCache = ...

Fqn johnFqn = Fqn.fromElements("persons", "john");

Node<String, Object> john = treeCache.getRoot().addChild(johnFqn);

john.put("surname", "Smith");Or:

Node persons = treeCache.getRoot().addChild(Fqn.fromString("persons"));

Node<String, Object> john = persons.addChild(Fqn.fromString("john"));

john.put("surname", "Smith");Or even:

Fqn personsFqn = Fqn.fromString("persons");

Fqn johnFqn = Fqn.fromRelative(personsFqn, Fqn.fromString("john"));

Node<String, Object> john = treeCache.getRoot().addChild(johnFqn);

john.put("surname", "Smith");Node<String, Object> john = ...

Node persons = john.getParent();Or:

Set<Node<String, Object>> personsChildren = persons.getChildren();3.6.5. Common Operations

In the previous section, some of the most used operations, such as addition and retrieval, have been shown. However, there are other important operations that are worth mentioning, such as remove:

You can for example remove an entire node, i.e. /persons/john , using:

treeCache.removeNode("/persons/john");Or remove a child node, i.e. persons that a child of root, via:

treeCache.getRoot().removeChild(Fqn.fromString("persons"));You can also remove a particular key/value pair in a node:

Node john = treeCache.getRoot().getChild(Fqn.fromElements("persons", "john"));

john.remove("surname");Or you can remove all data in a node with:

Node john = treeCache.getRoot().getChild(Fqn.fromElements("persons", "john"));

john.clearData();Another important operation supported by Tree API is the ability to move nodes around in the tree. Imagine we have a node called "john" which is located under root node. The following example is going to show how to we can move "john" node to be under "persons" node:

Current tree structure:

/persons /john

Moving trees from one FQN to another:

Node john = treeCache.getRoot().addChild(Fqn.fromString("john"));

Node persons = treeCache.getRoot().getChild(Fqn.fromString("persons"));

treeCache.move(john.getFqn(), persons.getFqn());Final tree structure:

/persons/john

3.6.6. Locking in the Tree API

Understanding when and how locks are acquired when manipulating the tree structure is important in order to maximise the performance of any client application interacting against the tree, while at the same time maintaining consistency.

Locking on the tree API happens on a per node basis. So, if you’re putting or updating a key/value under a particular node, a write lock is acquired for that node. In such case, no write locks are acquired for parent node of the node being modified, and no locks are acquired for children nodes.

If you’re adding or removing a node, the parent is not locked for writing. In JBoss Cache, this behaviour was configurable with the default being that parent was not locked for insertion or removal.

Finally, when a node is moved, the node that’s been moved and any of its children are locked, but also the target node and the new location of the moved node and its children. To understand this better, let’s look at an example:

Imagine you have a hierarchy like this and we want to move c/ to be underneath b/:

/ --|-- / \ a c | | b e | d

The end result would be something like this:

/ | a | b --|-- / \ d c | e

To make this move, locks would have been acquired on:

-

/a/b - because it’s the parent underneath which the data will be put

-

/c and /c/e - because they’re the nodes that are being moved

-

/a/b/c and /a/b/c/e - because that’s new target location for the nodes being moved

3.6.7. Listeners for tree cache events

The current Infinispan listeners have been designed with key/value store notifications in mind, and hence they do not map to tree cache events correctly. Tree cache specific listeners that map directly to tree cache events (i.e. adding a child…etc) are desirable but these are not yet available. If you’re interested in this type of listeners, please follow this issue to find out about any progress in this area.

3.7. Functional Map API

Infinispan includes an experimental API for interacting with your data which takes advantage of the functional programming additions and improved asynchronous programming capabilities available in Java 8.

Infinispan’s Functional Map API is a distilled map-like asynchronous API which uses functions to interact with data.

3.7.1. Asynchronous and Lazy

Being an asynchronous API, all methods that return a single result,

return a CompletableFuture which wraps the result, so you can use the

resources of your system more efficiently by having the possibility to

receive callbacks when the

CompletableFuture

has completed, or you can chain or compose them with other CompletableFuture.

For those operations that return multiple results, the API returns

instances of a

Traversable

interface which offers a lazy pull-style

API for working with multiple results.

Traversable

, being a lazy pull-style API, can still be asynchronous underneath

since the user can decide to work on the traversable at a later stage,

and the

Traversable

implementation itself can decide when to compute

those results.

3.7.2. Function transparency

Since the content of the functions is transparent to Infinispan, the API

has been split into 3 interfaces for read-only (

ReadOnlyMap

), read-write (

ReadWriteMap

) and write-only (

WriteOnlyMap

) operations respectively, in order to provide hints to the Infinispan

internals on the type of work needed to support functions.

3.7.3. Constructing Functional Maps

To construct any of the read-only, write-only or read-write map

instances, an Infinispan

AdvancedCache

is required, which is retrieved from the Cache Manager, and using the

AdvancedCache

, static method

factory methods are used to create

ReadOnlyMap

,

ReadWriteMap

or

WriteOnlyMap

import org.infinispan.commons.api.functional.FunctionalMap.*;

import org.infinispan.functional.impl.*;

AdvancedCache<String, String> cache = ...

FunctionalMapImpl<String, String> functionalMap = FunctionalMapImpl.create(cache);

ReadOnlyMap<String, String> readOnlyMap = ReadOnlyMapImpl.create(functionalMap);

WriteOnlyMap<String, String> writeOnlyMap = WriteOnlyMapImpl.create(functionalMap);

ReadWriteMap<String, String> readWriteMap = ReadWriteMapImpl.create(functionalMap);| At this stage, the Functional Map API is experimental and hence the way FunctionalMap, ReadOnlyMap, WriteOnlyMap and ReadWriteMap are constructed is temporary. |

3.7.4. Read-Only Map API

Read-only operations have the advantage that no locks are acquired for the duration of the operation. Here’s an example on how to the equivalent operation for Map.get(K):

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

ReadOnlyMap<String, String> readOnlyMap = ...

CompletableFuture<Optional<String>> readFuture = readOnlyMap.eval("key1", ReadEntryView::find);

readFuture.thenAccept(System.out::println);Read-only map also exposes operations to retrieve multiple keys in one go:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

import org.infinispan.commons.api.functional.Traversable;

ReadOnlyMap<String, String> readOnlyMap = ...

Set<String> keys = new HashSet<>(Arrays.asList("key1", "key2"));

Traversable<String> values = readOnlyMap.evalMany(keys, ReadEntryView::get);

values.forEach(System.out::println);Finally, read-only map also exposes methods to read all existing keys as well as entries, which include both key and value information.

Read-Only Entry View

The function parameters for read-only maps provide the user with a read-only entry view to interact with the data in the cache, which include these operations:

-

key()method returns the key for which this function is being executed. -

find()returns a Java 8Optionalwrapping the value if present, otherwise it returns an empty optional. Unless the value is guaranteed to be associated with the key, it’s recommended to usefind()to verify whether there’s a value associated with the key. -

get()returns the value associated with the key. If the key has no value associated with it, callingget()throws aNoSuchElementException.get()can be considered as a shortcut ofReadEntryView.find().get()which should be used only when the caller has guarantees that there’s definitely a value associated with the key. -

findMetaParam(Class<T> type)allows metadata parameter information associated with the cache entry to be looked up, for example: entry lifespan, last accessed time…etc. See Metadata Parameter Handling to find out more.

3.7.5. Write-Only Map API

Write-only operations include operations that insert or update data in the cache and also removals. Crucially, a write-only operation does not attempt to read any previous value associated with the key. This is an important optimization since that means neither the cluster nor any persistence stores will be looked up to retrieve previous values. In the main Infinispan Cache, this kind of optimization was achieved using a local-only per-invocation flag, but the use case is so common that in this new functional API, this optimization is provided as a first-class citizen.

Using

write-only map API

, an operation equivalent to

javax.cache.Cache (JCache)

's void returning

put

can be achieved this way, followed by an attempt to read the stored

value using the read-only map API:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

WriteOnlyMap<String, String> writeOnlyMap = ...

ReadOnlyMap<String, String> readOnlyMap = ...

CompletableFuture<Void> writeFuture = writeOnlyMap.eval("key1", "value1",

(v, view) -> view.set(v));

CompletableFuture<String> readFuture = writeFuture.thenCompose(r ->

readOnlyMap.eval("key1", ReadEntryView::get));

readFuture.thenAccept(System.out::println);Multiple key/value pairs can be stored in one go using

evalMany

API:

WriteOnlyMap<String, String> writeOnlyMap = ...

Map<K, String> data = new HashMap<>();

data.put("key1", "value1");

data.put("key2", "value2");

CompletableFuture<Void> writerAllFuture = writeOnlyMap.evalMany(data, (v, view) -> view.set(v));

writerAllFuture.thenAccept(x -> "Write completed");To remove all contents of the cache, there are two possibilities with

different semantics. If using

evalAll

each cached entry is iterated over and the function is called

with that entry’s information. Using this method also results in listeners being invoked. See functional listeners for more information.

WriteOnlyMap<String, String> writeOnlyMap = ...

CompletableFuture<Void> removeAllFuture = writeOnlyMap.evalAll(WriteEntryView::remove);

removeAllFuture.thenAccept(x -> "All entries removed");The alternative way to remove all entries is to call

truncate

operation which clears the entire cache contents in one go without

invoking any listeners and is best-effort:

WriteOnlyMap<String, String> writeOnlyMap = ...

CompletableFuture<Void> truncateFuture = writeOnlyMap.truncate();

truncateFuture.thenAccept(x -> "Cache contents cleared");Write-Only Entry View

The function parameters for write-only maps provide the user with a write-only entry view to modify the data in the cache, which include these operations:

-

set(V, MetaParam.Writable…)method allows for a new value to be associated with the cache entry for which this function is executed, and it optionally takes zero or more metadata parameters to be stored along with the value. See Metadata Parameter Handling for more information. -

remove()method removes the cache entry, including both value and metadata parameters associated with this key.

3.7.6. Read-Write Map API

The final type of operations we have are readwrite operations, and within

this category CAS-like (CompareAndSwap) operations can be found.

This type of operations require previous value associated with the key

to be read and for locks to be acquired before executing the function.

The vast majority of operations within

ConcurrentMap

and

JCache

APIs fall within this category, and they can easily be implemented using the

read-write map API

. Moreover, with

read-write map API

, you can make CASlike comparisons not only based on value equality

but based on metadata parameter equality such as version information,

and you can send back previous value or boolean instances to signal

whether the CASlike comparison succeeded.

Implementing a write operation that returns the previous value associated with the cache entry is easy to achieve with the read-write map API:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

ReadWriteMap<String, String> readWriteMap = ...

CompletableFuture<Optional<String>> readWriteFuture = readWriteMap.eval("key1", "value1",

(v, view) -> {

Optional<V> prev = rw.find();

view.set(v);

return prev;

});

readWriteFuture.thenAccept(System.out::println);ConcurrentMap.replace(K, V, V)

is a replace function that compares the

value present in the map and if it’s equals to the value passed in as

first parameter, the second value is stored, returning a boolean

indicating whether the replace was successfully completed. This operation

can easily be implemented using the read-write map API:

ReadWriteMap<String, String> readWriteMap = ...

String oldValue = "old-value";

CompletableFuture<Boolean> replaceFuture = readWriteMap.eval("key1", "value1", (v, view) -> {

return view.find().map(prev -> {

if (prev.equals(oldValue)) {

rw.set(v);

return true; // previous value present and equals to the expected one

}

return false; // previous value associated with key does not match

}).orElse(false); // no value associated with this key

});

replaceFuture.thenAccept(replaced -> System.out.printf("Value was replaced? %s%n", replaced));

The function in the example above captures oldValue which is an

external value to the function which is valid use case.

|

Read-write map API contains evalMany and evalAll operations which behave

similar to the write-only map offerings, except that they enable previous

value and metadata parameters to be read.

Read-Write Entry View

The function parameters for read-write maps provide the user with the possibility to query the information associated with the key, including value and metadata parameters, and the user can also use this read-write entry view to modify the data in the cache.

The operations are exposed by read-write entry views are a union of the operations exposed by read-only entry views and write-only entry views.

3.7.7. Metadata Parameter Handling

Metadata parameters provide extra information about the cache entry, such as version information, lifespan, last accessed/used time…etc. Some of these can be provided by the user, e.g. version, lifespan…etc, but some others are computed internally and can only be queried, e.g. last accessed/used time.

The functional map API provides a flexible way to store metadata parameters

along with an cache entry. To be able to store a metadata parameter, it must

extend

MetaParam.Writable

interface, and implement the methods to allow the

internal logic to extra the data. Storing is done via the

set(V, MetaParam.Writable…) method in the write-only entry view or read-write entry view function parameters.

Querying metadata parameters is available via the

findMetaParam(Class)

method

available via read-write entry view or

read-only entry views or function parameters.

Here is an example showing how to store metadata parameters and how to query them:

import java.time.Duration;

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

import org.infinispan.commons.api.functional.MetaParam.*;

WriteOnlyMap<String, String> writeOnlyMap = ...

ReadOnlyMap<String, String> readOnlyMap = ...

CompletableFuture<Void> writeFuture = writeOnlyMap.eval("key1", "value1",

(v, view) -> view.set(v, new MetaLifespan(Duration.ofHours(1).toMillis())));

CompletableFuture<MetaLifespan> readFuture = writeFuture.thenCompose(r ->

readOnlyMap.eval("key1", view -> view.findMetaParam(MetaLifespan.class).get()));

readFuture.thenAccept(System.out::println);If the metadata parameter is generic, for example

MetaEntryVersion<T>

, retrieving the metadata parameter along with a specific type can be tricky

if using .class static helper in a class because it does not return a

Class<T> but only Class, and hence any generic information in the class is

lost:

ReadOnlyMap<String, String> readOnlyMap = ...

CompletableFuture<String> readFuture = readOnlyMap.eval("key1", view -> {

// If caller depends on the typed information, this is not an ideal way to retrieve it

// If the caller does not depend on the specific type, this works just fine.

Optional<MetaEntryVersion> version = view.findMetaParam(MetaEntryVersion.class);

return view.get();

});When generic information is important the user can define a static helper

method that coerces the static class retrieval to the type requested,

and then use that helper method in the call to findMetaParam:

class MetaEntryVersion<T> implements MetaParam.Writable<EntryVersion<T>> {

...

public static <T> T type() { return (T) MetaEntryVersion.class; }

...

}

ReadOnlyMap<String, String> readOnlyMap = ...

CompletableFuture<String> readFuture = readOnlyMap.eval("key1", view -> {

// The caller wants guarantees that the metadata parameter for version is numeric

// e.g. to query the actual version information

Optional<MetaEntryVersion<Long>> version = view.findMetaParam(MetaEntryVersion.type());

return view.get();

});Finally, users are free to create new instances of metadata parameters to suit their needs. They are stored and retrieved in the very same way as done for the metadata parameters already provided by the functional map API.

3.7.8. Invocation Parameter

Per-invocation parameters

are applied to regular functional map API calls to

alter the behaviour of certain aspects. Adding per invocation parameters is

done using the

withParams(Param<?>…)

method.

Param.FutureMode

tweaks whether a method returning a

CompletableFuture

will span a thread to invoke the method, or instead will use the caller

thread. By default, whenever a call is made to a method returning a

CompletableFuture

, a separate thread will be span to execute the method asynchronously.

However, if the caller will immediately block waiting for the

CompletableFuture

to complete, spanning a different thread is wasteful, and hence

Param.FutureMode.COMPLETED

can be passed as per-invocation parameter to avoid creating that extra thread. Example:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

import org.infinispan.commons.api.functional.Param.*;

ReadOnlyMap<String, String> readOnlyMap = ...

ReadOnlyMap<String, String> readOnlyMapCompleted = readOnlyMap.withParams(FutureMode.COMPLETED);

Optional<String> readFuture = readOnlyMapCompleted.eval("key1", ReadEntryView::find).get();Param.PersistenceMode controls whether a write operation will be propagated

to a persistence store. The default behaviour is for all write-operations

to be propagated to the persistence store if the cache is configured with

a persistence store. By passing PersistenceMode.SKIP as parameter,

the write operation skips the persistence store and its effects are only

seen in the in-memory contents of the cache. PersistenceMode.SKIP can

be used to implement an

Cache.evict()

method which removes data from memory but leaves the persistence store

untouched:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

import org.infinispan.commons.api.functional.Param.*;

WriteOnlyMap<String, String> writeOnlyMap = ...

WriteOnlyMap<String, String> skiPersistMap = writeOnlyMap.withParams(PersistenceMode.SKIP);

CompletableFuture<Void> removeFuture = skiPersistMap.eval("key1", WriteEntryView::remove);Note that there’s no need for another PersistenceMode option to skip reading from the persistence store, because a write operation can skip reading previous value from the store by calling a write-only operation via the WriteOnlyMap.

Finally, new Param implementations are normally provided by the functional map API since they tweak how the internal logic works. So, for the most part of users, they should limit themselves to using the Param instances exposed by the API. The exception to this rule would be advanced users who decide to add new interceptors to the internal stack. These users have the ability to query these parameters within the interceptors.

3.7.9. Functional Listeners

The functional map offers a listener API, where clients can register for and get notified when events take place. These notifications are post-event, so that means the events are received after the event has happened.

The listeners that can be registered are split into two categories: write listeners and read-write listeners.

Write Listeners

Write listeners enable user to register listeners for any cache entry write events that happen in either a read-write or write-only functional map.

Listeners for write events cannot distinguish between cache entry created and cache entry modify/update events because they don’t have access to the previous value. All they know is that a new non-null entry has been written.

However, write event listeners can distinguish between entry removals

and cache entry create/modify-update events because they can query

what the new entry’s value via

ReadEntryView.find()

method.

Adding a write listener is done via the WriteListeners interface

which is accessible via both

ReadWriteMap.listeners()

and

WriteOnlyMap.listeners()

method.

A write listener implementation can be defined either passing a function

to

onWrite(Consumer<ReadEntryView<K, V>>)

method, or passing a

WriteListener implementation to

add(WriteListener<K, V>)

method.

Either way, all these methods return an

AutoCloseable

instance that can be used to de-register the function listener:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

import org.infinispan.commons.api.functional.Listeners.WriteListeners.WriteListener;

WriteOnlyMap<String, String> woMap = ...

AutoCloseable writeFunctionCloseHandler = woMap.listeners().onWrite(written -> {

// `written` is a ReadEntryView of the written entry

System.out.printf("Written: %s%n", written.get());

});

AutoCloseable writeCloseHanlder = woMap.listeners().add(new WriteListener<String, String>() {

@Override

public void onWrite(ReadEntryView<K, V> written) {

System.out.printf("Written: %s%n", written.get());

}

});

// Either wrap handler in a try section to have it auto close...

try(writeFunctionCloseHandler) {

// Write entries using read-write or write-only functional map API

...

}

// Or close manually

writeCloseHanlder.close();Read-Write Listeners

Read-write listeners enable users to register listeners for cache entry created, modified and removed events, and also register listeners for any cache entry write events.

Entry created, modified and removed events can only be fired when these originate on a read-write functional map, since this is the only one that guarantees that the previous value has been read, and hence the differentiation between create, modified and removed can be fully guaranteed.

Adding a read-write listener is done via the

ReadWriteListeners

interface which is accessible via

ReadWriteMap.listeners()

method.

If interested in only one of the event types, the simplest way to add a

listener is to pass a function to either

onCreate

,

onModify

or

onRemove

methods. All these methods return an AutoCloseable instance that can be

used to de-register the function listener:

import org.infinispan.commons.api.functional.EntryView.*;

import org.infinispan.commons.api.functional.FunctionalMap.*;

ReadWriteMap<String, String> rwMap = ...

AutoCloseable createClose = rwMap.listeners().onCreate(created -> {

// `created` is a ReadEntryView of the created entry

System.out.printf("Created: %s%n", created.get());

});

AutoCloseable modifyClose = rwMap.listeners().onModify((before, after) -> {

// `before` is a ReadEntryView of the entry before update

// `after` is a ReadEntryView of the entry after update

System.out.printf("Before: %s%n", before.get());

System.out.printf("After: %s%n", after.get());

});

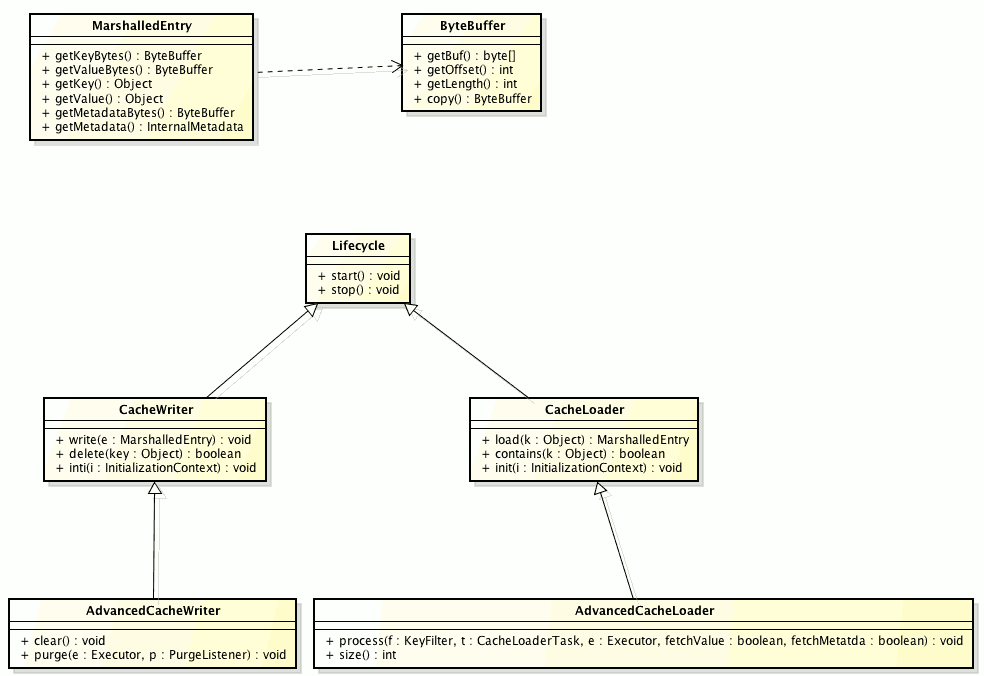

AutoCloseable removeClose = rwMap.listeners().onRemove(removed -> {