Create and configure Infinispan caches with the mode and capabilities that suit your application requirements. You can configure caches with expiration to remove stale entries or use eviction to control cache size. You can also add persistent storage to caches, enable partition handling for clustered caches, set up transactions, and more.

1. Infinispan caches

Infinispan caches provide flexible, in-memory data stores that you can configure to suit use cases such as:

-

Boosting application performance with high-speed local caches.

-

Optimizing databases by decreasing the volume of write operations.

-

Providing resiliency and durability for consistent data across clusters.

1.1. Cache API

Cache<K,V> is the central interface for Infinispan and extends java.util.concurrent.ConcurrentMap.

Cache entries are highly concurrent data structures in key:value format that support a wide and configurable range of data types, from simple strings to much more complex objects.

1.2. Cache Managers

The CacheManager API is the entry point for interacting with Infinispan.

Cache Managers control cache lifecycle; creating, modifying, and deleting cache instances.

Cache Managers also provide cluster management and monitoring along with the ability to execute code across nodes.

Infinispan provides two CacheManager implementations:

EmbeddedCacheManager-

Entry point for caches when running Infinispan inside the same Java Virtual Machine (JVM) as the client application.

RemoteCacheManager-

Entry point for caches when running Infinispan Server in its own JVM. When you instantiate a

RemoteCacheManagerit establishes a persistent TCP connection to Infinispan Server through the Hot Rod endpoint.

|

Both embedded and remote |

1.3. Cache modes

|

Infinispan Cache Managers can create and control multiple caches that use different modes. For example, you can use the same Cache Manager for local caches, distributed caches, and caches with invalidation mode. |

- Local

-

Infinispan runs as a single node and never replicates read or write operations on cache entries.

- Replicated

-

Infinispan replicates all cache entries on all nodes in a cluster and performs local read operations only.

- Distributed

-

Infinispan replicates cache entries on a subset of nodes in a cluster and assigns entries to fixed owner nodes.

Infinispan requests read operations from owner nodes to ensure it returns the correct value. - Invalidation

-

Infinispan evicts stale data from all nodes whenever operations modify entries in the cache. Infinispan performs local read operations only.

1.3.1. Comparison of cache modes

The cache mode that you should choose depends on the qualities and guarantees you need for your data.

The following table summarizes the primary differences between cache modes:

| Simple | Local | Invalidation | Replicated | Distributed | Scattered | |

|---|---|---|---|---|---|---|

Clustered |

No |

No |

Yes |

Yes |

Yes |

Yes |

Read performance |

Highest |

High |

High |

High |

Medium |

Medium |

Write performance |

Highest |

High |

Low |

Lowest |

Medium |

Higher |

Capacity |

Single node |

Single node |

Single node |

Smallest node |

Cluster |

Cluster |

Availability |

Single node |

Single node |

Single node |

All nodes |

Owner nodes |

Owner nodes |

Features |

No TX, persistence, indexing |

All |

No indexing |

All |

All |

No TX |

1.4. Local caches

Infinispan offers a local cache mode that is similar to a ConcurrentHashMap.

Caches offer more capabilities than simple maps, including write-through and write-behind to persistent storage as well as management capabilities such as eviction and expiration.

The Infinispan Cache API extends the ConcurrentMap API in Java, making it easy to migrate from a map to a Infinispan cache.

Local cache configuration

<local-cache name="mycache"

statistics="true">

<encoding media-type="application/x-protostream"/>

</local-cache>{

"local-cache": {

"name": "mycache",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

}

}

}localCache:

name: "mycache"

statistics: "true"

encoding:

mediaType: "application/x-protostream"1.4.1. Simple caches

A simple cache is a type of local cache that disables support for the following capabilities:

-

Transactions and invocation batching

-

Persistent storage

-

Custom interceptors

-

Indexing

-

Transcoding

However, you can use other Infinispan capabilities with simple caches such as expiration, eviction, statistics, and security features. If you configure a capability that is not compatible with a simple cache, Infinispan throws an exception.

Simple cache configuration

<local-cache simple-cache="true" />{

"local-cache" : {

"simple-cache" : "true"

}

}localCache:

simpleCache: "true"2. Clustered caches

You can create embedded and remote caches on Infinispan clusters that replicate data across nodes.

2.1. Replicated caches

Infinispan replicates all entries in the cache to all nodes in the cluster. Each node can perform read operations locally.

Replicated caches provide a quick and easy way to share state across a cluster, but is suitable for clusters of less than ten nodes. Because the number of replication requests scales linearly with the number of nodes in the cluster, using replicated caches with larger clusters reduces performance. However you can use UDP multicasting for replication requests to improve performance.

Each key has a primary owner, which serializes data container updates in order to provide consistency.

-

Synchronous replication blocks the caller (e.g. on a

cache.put(key, value)) until the modifications have been replicated successfully to all the nodes in the cluster. -

Asynchronous replication performs replication in the background, and write operations return immediately. Asynchronous replication is not recommended, because communication errors, or errors that happen on remote nodes are not reported to the caller.

If transactions are enabled, write operations are not replicated through the primary owner.

With pessimistic locking, each write triggers a lock message, which is broadcast to all the nodes. During transaction commit, the originator broadcasts a one-phase prepare message and an unlock message (optional). Either the one-phase prepare or the unlock message is fire-and-forget.

With optimistic locking, the originator broadcasts a prepare message, a commit message, and an unlock message (optional). Again, either the one-phase prepare or the unlock message is fire-and-forget.

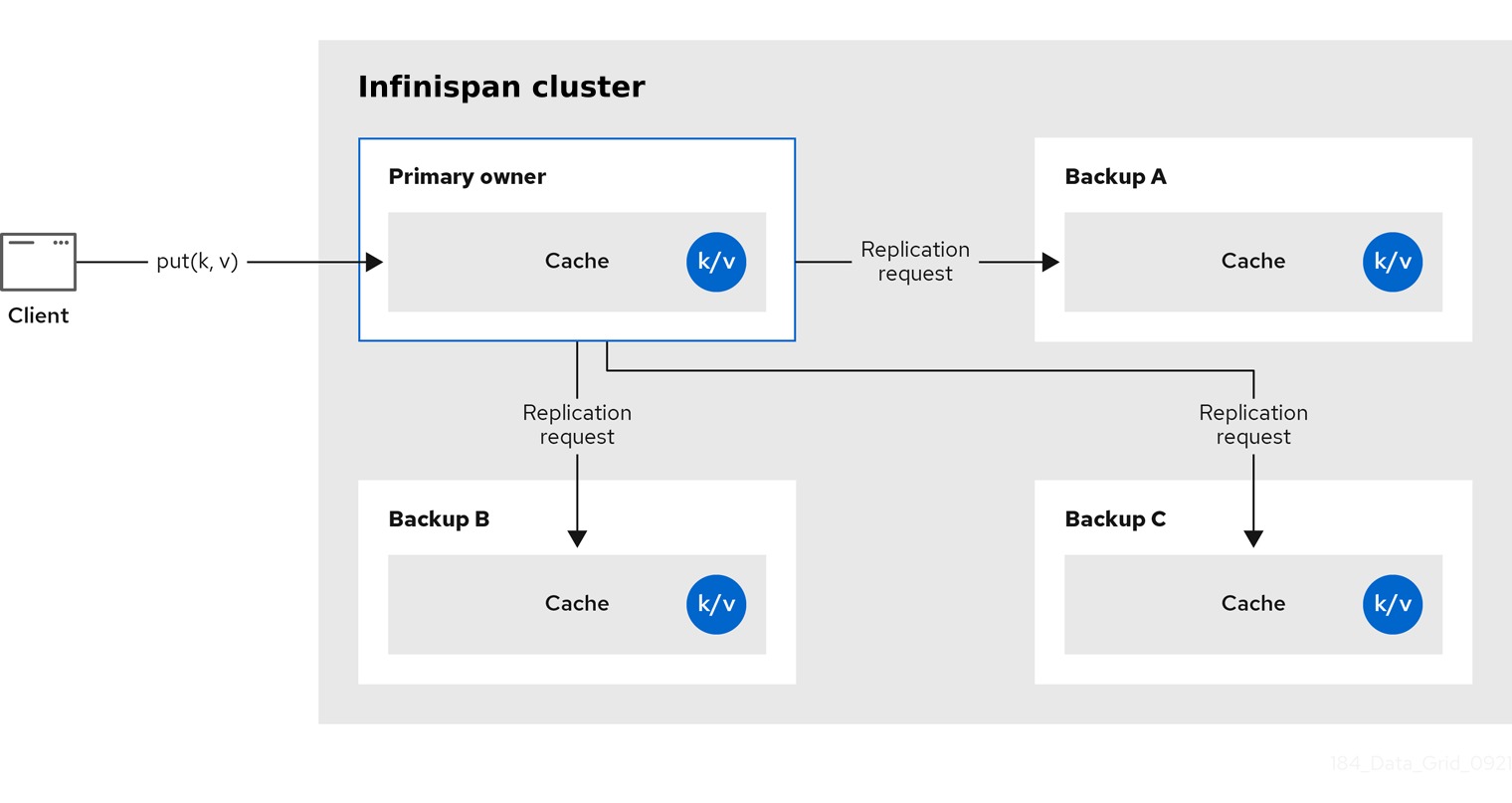

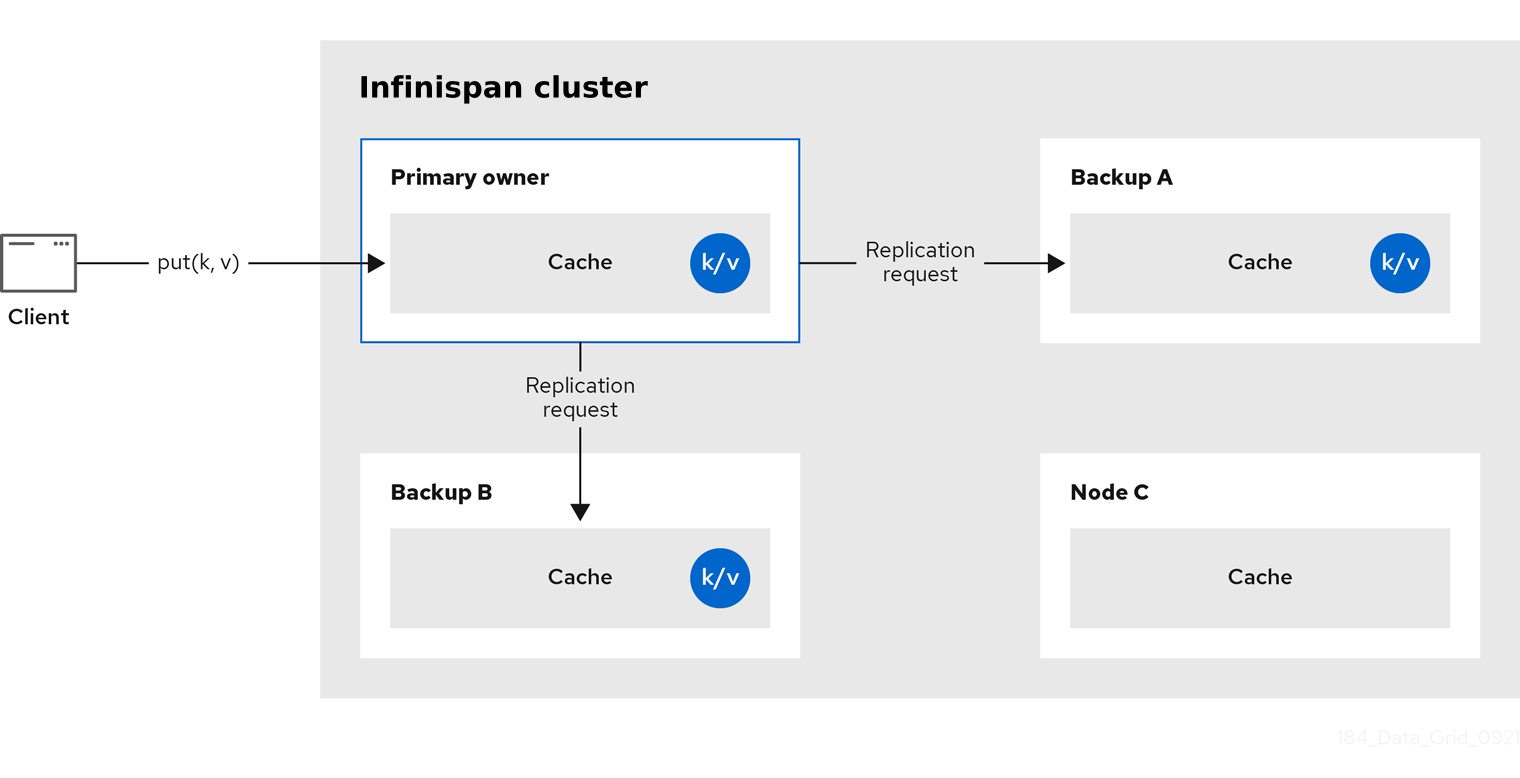

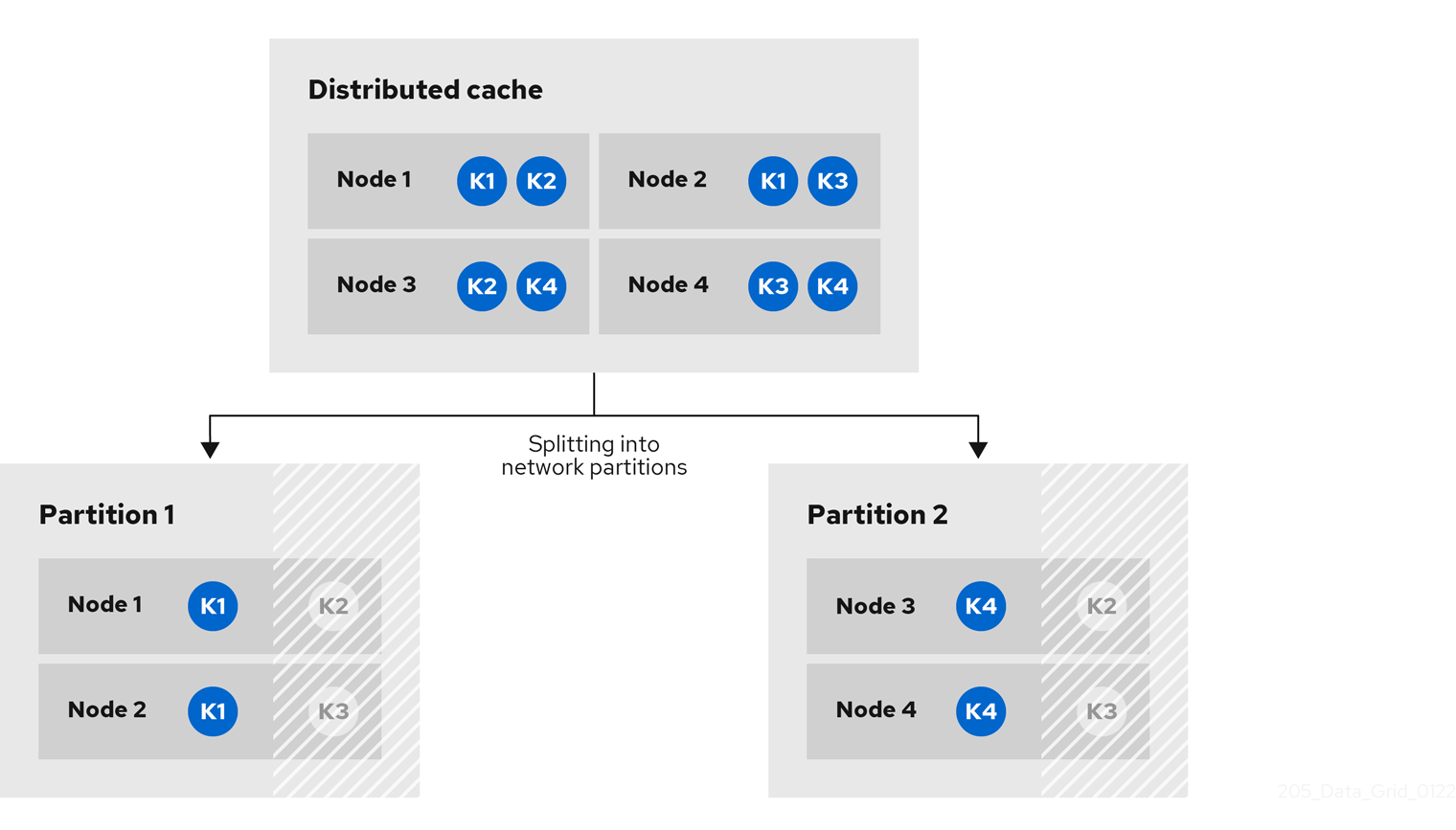

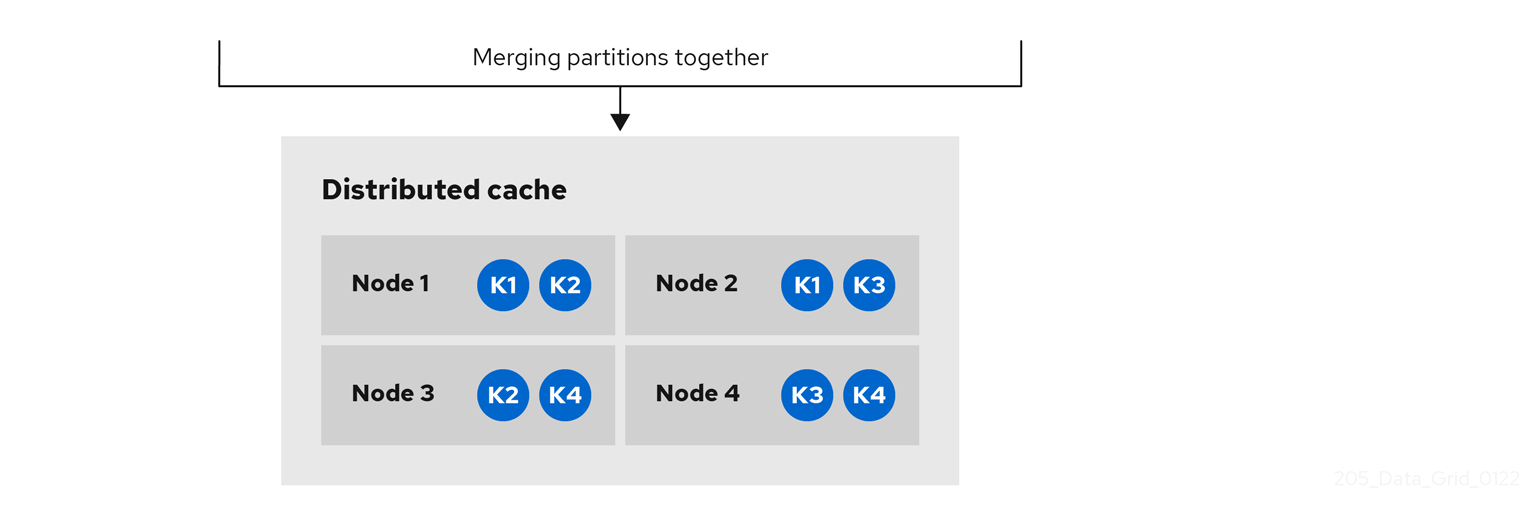

2.2. Distributed caches

Infinispan attempts to keep a fixed number of copies of any entry in the cache,

configured as numOwners.

This allows distributed caches to scale linearly, storing more data as nodes are added to the cluster.

As nodes join and leave the cluster, there will be times when a key has more or less than numOwners copies.

In particular, if numOwners nodes leave in quick succession, some entries will be lost, so we say that a distributed cache tolerates numOwners - 1 node failures.

The number of copies represents a trade-off between performance and durability of data. The more copies you maintain, the lower performance will be, but also the lower the risk of losing data due to server or network failures.

Infinispan splits the owners of a key into one primary owner, which coordinates writes to the key, and zero or more backup owners.

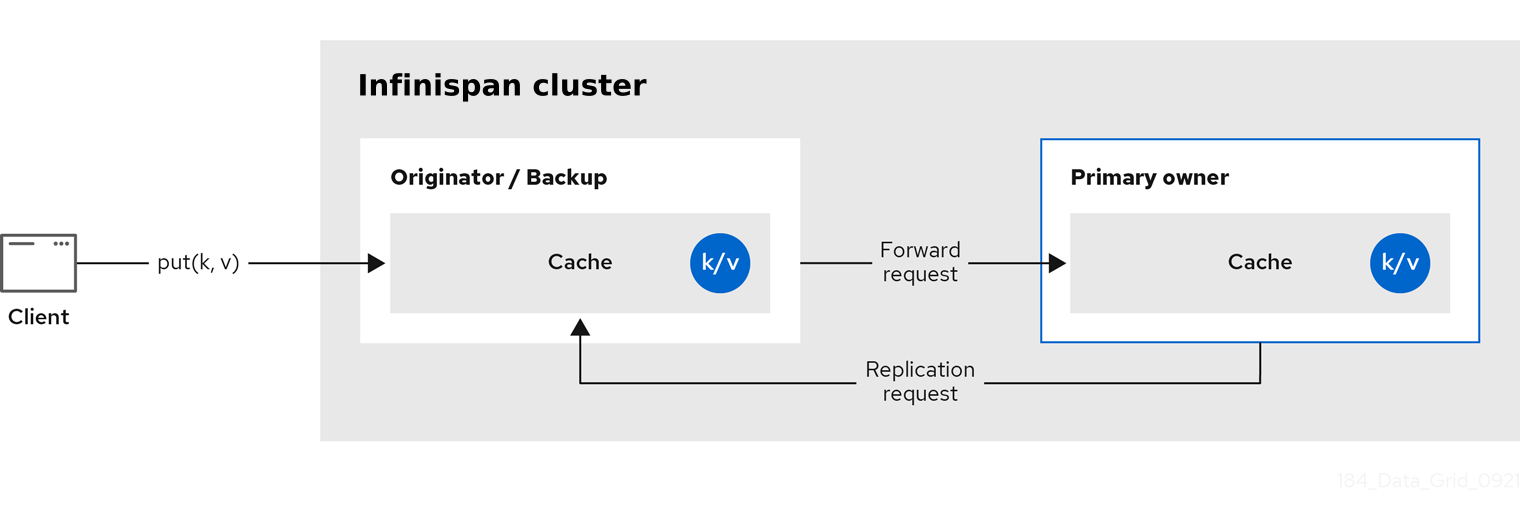

The following diagram shows a write operation that a client sends to a backup owner. In this case the backup node forwards the write to the primary owner, which then replicates the write to the backup.

Read operations request the value from the primary owner. If the primary owner does not respond in a reasonable amount of time, Infinispan requests the value from the backup owners as well.

A read operation may require 0 messages if the key is present in the local cache, or up to 2 * numOwners messages if all the owners are slow.

Write operations result in at most 2 * numOwners messages.

One message from the originator to the primary owner and numOwners - 1 messages from the primary to the backup nodes along with the corresponding acknowledgment messages.

|

Cache topology changes may cause retries and additional messages for both read and write operations. |

Asynchronous replication is not recommended because it can lose updates. In addition to losing updates, asynchronous distributed caches can also see a stale value when a thread writes to a key and then immediately reads the same key.

Transactional distributed caches send lock/prepare/commit/unlock messages to the affected nodes only, meaning all nodes that own at least one key affected by the transaction. As an optimization, if the transaction writes to a single key and the originator is the primary owner of the key, lock messages are not replicated.

2.2.1. Read consistency

Even with synchronous replication, distributed caches are not linearizable. For transactional caches, they do not support serialization/snapshot isolation.

For example, a thread is carrying out a single put request:

cache.get(k) -> v1

cache.put(k, v2)

cache.get(k) -> v2But another thread might see the values in a different order:

cache.get(k) -> v2

cache.get(k) -> v1The reason is that read can return the value from any owner, depending on how fast the primary owner replies. The write is not atomic across all the owners. In fact, the primary commits the update only after it receives a confirmation from the backup. While the primary is waiting for the confirmation message from the backup, reads from the backup will see the new value, but reads from the primary will see the old one.

2.2.2. Key ownership

Distributed caches split entries into a fixed number of segments and assign each segment to a list of owner nodes. Replicated caches do the same, with the exception that every node is an owner.

The first node in the list of owners is the primary owner. The other nodes in the list are backup owners. When the cache topology changes, because a node joins or leaves the cluster, the segment ownership table is broadcast to every node. This allows nodes to locate keys without making multicast requests or maintaining metadata for each key.

The numSegments property configures the number of segments available.

However, the number of segments cannot change unless the cluster is restarted.

Likewise the key-to-segment mapping cannot change. Keys must always map to the same segments regardless of cluster topology changes. It is important that the key-to-segment mapping evenly distributes the number of segments allocated to each node while minimizing the number of segments that must move when the cluster topology changes.

| Consistent hash factory implementation | Description |

|---|---|

|

Uses an algorithm based on consistent hashing. Selected by default when server hinting is disabled. This implementation always assigns keys to the same nodes in every cache as long as the cluster is symmetric. In other words, all caches run on all nodes. This implementation does have some negative points in that the load distribution is slightly uneven. It also moves more segments than strictly necessary on a join or leave. |

|

Equivalent to |

|

Achieves a more even distribution than |

|

Equivalent to |

|

Used internally to implement replicated caches. You should never explicitly select this algorithm in a distributed cache. |

Hashing configuration

You can configure ConsistentHashFactory implementations, including custom ones, with embedded caches only.

<distributed-cache name="distributedCache"

owners="2"

segments="100"

capacity-factor="2" />Configuration c = new ConfigurationBuilder()

.clustering()

.cacheMode(CacheMode.DIST_SYNC)

.hash()

.numOwners(2)

.numSegments(100)

.capacityFactor(2)

.build();2.2.3. Capacity factors

Capacity factors allocate the number of segments based on resources available to each node in the cluster.

The capacity factor for a node applies to segments for which that node is both the primary owner and backup owner. In other words, the capacity factor specifies is the total capacity that a node has in comparison to other nodes in the cluster.

The default value is 1 which means that all nodes in the cluster have an equal capacity and Infinispan allocates the same number of segments to all nodes in the cluster.

However, if nodes have different amounts of memory available to them, you can configure the capacity factor so that the Infinispan hashing algorithm assigns each node a number of segments weighted by its capacity.

The value for the capacity factor configuration must be a positive number and can be a fraction such as 1.5.

You can also configure a capacity factor of 0 but is recommended only for nodes that join the cluster temporarily and should use the zero capacity configuration instead.

Zero capacity nodes

You can configure nodes where the capacity factor is 0 for every cache, user defined caches, and internal caches.

When defining a zero capacity node, the node does not hold any data.

Zero capacity node configuration

<infinispan>

<cache-container zero-capacity-node="true" />

</infinispan>{

"infinispan" : {

"cache-container" : {

"zero-capacity-node" : "true"

}

}

}infinispan:

cacheContainer:

zeroCapacityNode: "true"new GlobalConfigurationBuilder().zeroCapacityNode(true);2.2.4. Level one (L1) caches

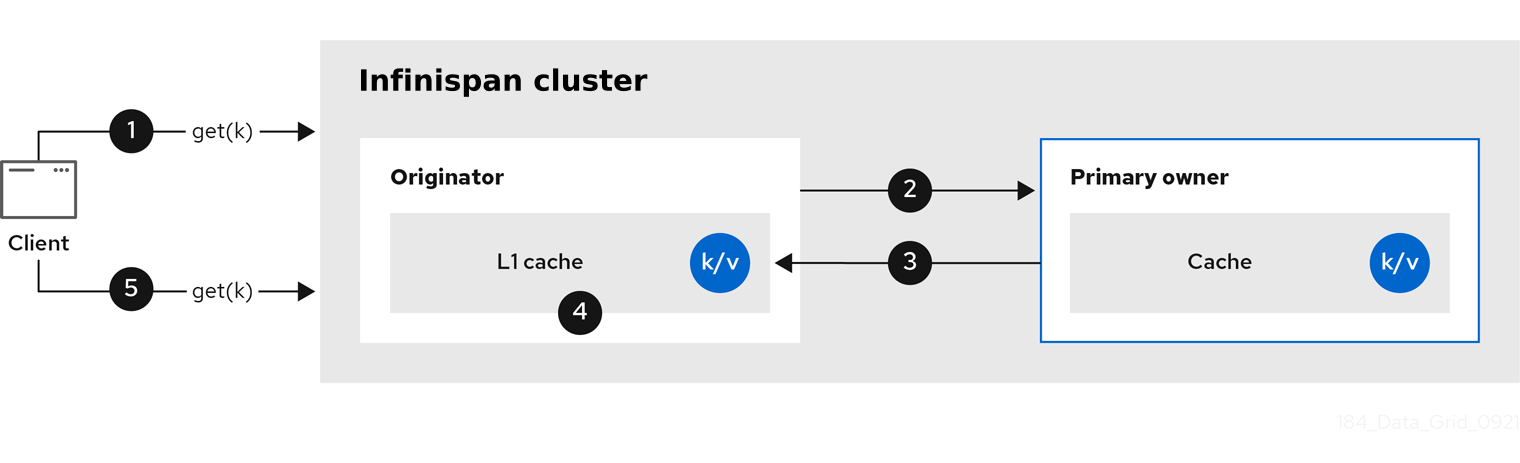

Infinispan nodes create local replicas when they retrieve entries from another node in the cluster. L1 caches avoid repeatedly looking up entries on primary owner nodes and adds performance.

The following diagram illustrates how L1 caches work:

In the "L1 cache" diagram:

-

A client invokes

cache.get()to read an entry for which another node in the cluster is the primary owner. -

The originator node forwards the read operation to the primary owner.

-

The primary owner returns the key/value entry.

-

The originator node creates a local copy.

-

Subsequent

cache.get()invocations return the local entry instead of forwarding to the primary owner.

L1 caching performance

Enabling L1 improves performance for read operations but requires primary owner nodes to broadcast invalidation messages when entries are modified. This ensures that Infinispan removes any out of date replicas across the cluster. However this also decreases performance of write operations and increases memory usage, reducing overall capacity of caches.

|

Infinispan evicts and expires local replicas, or L1 entries, like any other cache entry. |

L1 cache configuration

<distributed-cache l1-lifespan="5s"

l1-cleanup-interval="1m">

</distributed-cache>{

"distributed-cache": {

"l1-lifespan": "5000",

"l1-cleanup-interval": "60000"

}

}distributedCache:

l1Lifespan: "5000"

l1-cleanup-interval: "60000"ConfigurationBuilder builder = new ConfigurationBuilder();

builder.clustering().cacheMode(CacheMode.DIST_SYNC)

.l1()

.lifespan(5000, TimeUnit.MILLISECONDS)

.cleanupTaskFrequency(60000, TimeUnit.MILLISECONDS);2.2.5. Server hinting

Server hinting increases availability of data in distributed caches by replicating entries across as many servers, racks, and data centers as possible.

| Server hinting applies only to distributed caches. |

When Infinispan distributes the copies of your data, it follows the order of precedence: site, rack, machine, and node. All of the configuration attributes are optional. For example, when you specify only the rack IDs, then Infinispan distributes the copies across different racks and nodes.

Server hinting can impact cluster rebalancing operations by moving more segments than necessary if the number of segments for the cache is too low.

| An alternative for clusters in multiple data centers is cross-site replication. |

Server hinting configuration

<cache-container>

<transport cluster="MyCluster"

machine="LinuxServer01"

rack="Rack01"

site="US-WestCoast"/>

</cache-container>{

"infinispan" : {

"cache-container" : {

"transport" : {

"cluster" : "MyCluster",

"machine" : "LinuxServer01",

"rack" : "Rack01",

"site" : "US-WestCoast"

}

}

}

}cacheContainer:

transport:

cluster: "MyCluster"

machine: "LinuxServer01"

rack: "Rack01"

site: "US-WestCoast"GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.transport()

.clusterName("MyCluster")

.machineId("LinuxServer01")

.rackId("Rack01")

.siteId("US-WestCoast");2.2.6. Key affinity service

In a distributed cache, a key is allocated to a list of nodes with an opaque algorithm. There is no easy way to reverse the computation and generate a key that maps to a particular node. However, Infinispan can generate a sequence of (pseudo-)random keys, see what their primary owner is, and hand them out to the application when it needs a key mapping to a particular node.

Following code snippet depicts how a reference to this service can be obtained and used.

// 1. Obtain a reference to a cache

Cache cache = ...

Address address = cache.getCacheManager().getAddress();

// 2. Create the affinity service

KeyAffinityService keyAffinityService = KeyAffinityServiceFactory.newLocalKeyAffinityService(

cache,

new RndKeyGenerator(),

Executors.newSingleThreadExecutor(),

100);

// 3. Obtain a key for which the local node is the primary owner

Object localKey = keyAffinityService.getKeyForAddress(address);

// 4. Insert the key in the cache

cache.put(localKey, "yourValue");The service is started at step 2: after this point it uses the supplied Executor to generate and queue keys. At step 3, we obtain a key from the service, and at step 4 we use it.

Lifecycle

KeyAffinityService extends Lifecycle, which allows stopping and (re)starting it:

public interface Lifecycle {

void start();

void stop();

}The service is instantiated through KeyAffinityServiceFactory.

All the factory methods have an Executor parameter, that is used for asynchronous key generation (so that it

won’t happen in the caller’s thread).

It is the user’s responsibility to handle the shutdown of this Executor.

The KeyAffinityService, once started, needs to be explicitly stopped.

This stops the background key generation and releases other held resources.

The only situation in which KeyAffinityService stops by itself is when the Cache Manager with which it was registered is shutdown.

Topology changes

When the cache topology changes, the ownership of the keys generated by the KeyAffinityService might change.

The key affinity service keep tracks of these topology changes and doesn’t return keys that would currently map to a different node, but it won’t do anything about keys generated earlier.

As such, applications should treat KeyAffinityService purely as an optimization, and they should not rely on the location of a generated key for correctness.

In particular, applications should not rely on keys generated by KeyAffinityService for the same address to always be located together.

Collocation of keys is only provided by the Grouping API.

2.2.7. Grouping API

Complementary to the Key affinity service, the Grouping API allows you to co-locate a group of entries on the same nodes, but without being able to select the actual nodes.

By default, the segment of a key is computed using the key’s hashCode().

If you use the Grouping API, Infinispan will compute the segment of the group and use that as the segment of the key.

When the Grouping API is in use, it is important that every node can still compute the owners of every key without contacting other nodes.

For this reason, the group cannot be specified manually.

The group can either be intrinsic to the entry (generated by the key class) or extrinsic (generated by an external function).

To use the Grouping API, you must enable groups.

Configuration c = new ConfigurationBuilder()

.clustering().hash().groups().enabled()

.build();<distributed-cache>

<groups enabled="true"/>

</distributed-cache>If you have control of the key class (you can alter the class definition, it’s not part of an unmodifiable library), then we recommend using an intrinsic group.

The intrinsic group is specified by adding the @Group annotation to a method, for example:

class User {

...

String office;

...

public int hashCode() {

// Defines the hash for the key, normally used to determine location

...

}

// Override the location by specifying a group

// All keys in the same group end up with the same owners

@Group

public String getOffice() {

return office;

}

}

}

The group method must return a String

|

If you don’t have control over the key class, or the determination of the group is an orthogonal concern to the key class, we recommend using an extrinsic group.

An extrinsic group is specified by implementing the Grouper interface.

public interface Grouper<T> {

String computeGroup(T key, String group);

Class<T> getKeyType();

}If multiple Grouper classes are configured for the same key type, all of them will be called, receiving the value computed by the previous one.

If the key class also has a @Group annotation, the first Grouper will receive the group computed by the annotated method.

This allows you even greater control over the group when using an intrinsic group.

Grouper implementationpublic class KXGrouper implements Grouper<String> {

// The pattern requires a String key, of length 2, where the first character is

// "k" and the second character is a digit. We take that digit, and perform

// modular arithmetic on it to assign it to group "0" or group "1".

private static Pattern kPattern = Pattern.compile("(^k)(<a>\\d</a>)$");

public String computeGroup(String key, String group) {

Matcher matcher = kPattern.matcher(key);

if (matcher.matches()) {

String g = Integer.parseInt(matcher.group(2)) % 2 + "";

return g;

} else {

return null;

}

}

public Class<String> getKeyType() {

return String.class;

}

}Grouper implementations must be registered explicitly in the cache configuration.

If you are configuring Infinispan programmatically:

Configuration c = new ConfigurationBuilder()

.clustering().hash().groups().enabled().addGrouper(new KXGrouper())

.build();Or, if you are using XML:

<distributed-cache>

<groups enabled="true">

<grouper class="com.example.KXGrouper" />

</groups>

</distributed-cache>Advanced API

AdvancedCache has two group-specific methods:

-

getGroup(groupName)retrieves all keys in the cache that belong to a group. -

removeGroup(groupName)removes all the keys in the cache that belong to a group.

Both methods iterate over the entire data container and store (if present), so they can be slow when a cache contains lots of small groups.

2.3. Invalidation caches

Invalidation cache mode in Infinispan is designed to optimize systems that perform high volumes of read operations to a shared permanent data store. You can use invalidation mode to reduce the number of database writes when state changes occur.

|

Invalidation cache mode is deprecated for Infinispan remote deployments. Use invalidation cache mode with embedded caches that are stored in shared cache stores only. |

Invalidation cache mode is effective only when you have a permanent data store, such as a database, and are only using Infinispan as an optimization in a read-heavy system to prevent hitting the database for every read.

When a cache is configured for invalidation, each data change in a cache triggers a message to other caches in the cluster, informing them that their data is now stale and should be removed from memory. Invalidation messages remove stale values from other nodes' memory. The messages are very small compared to replicating the entire value, and also other caches in the cluster look up modified data in a lazy manner, only when needed. The update to the shared store is typically handled by user application code or Hibernate.

Sometimes the application reads a value from the external store and wants to write it to the local cache, without removing it from the other nodes.

To do this, it must call Cache.putForExternalRead(key, value) instead of Cache.put(key, value).

|

Invalidation mode is suitable only for shared stores where all nodes can access the same data. Using invalidation mode without a persistent store is impractical, as updated values need to be read from a shared store for consistency across nodes. Never use invalidation mode with a local, non-shared, cache store. The invalidation message will not remove entries in the local store, and some nodes will keep seeing the stale value. |

An invalidation cache can also be configured with a special cache loader, ClusterLoader.

When ClusterLoader is enabled, read operations that do not find the key on the local node will request it from all the other nodes first, and store it in memory locally.

This can lead to storing stale values, so only use it if you have a high tolerance for stale values.

When synchronous, a write operation blocks until all nodes in the cluster have evicted the stale value. When asynchronous, the originator broadcasts invalidation messages but does not wait for responses. That means other nodes still see the stale value for a while after the write completed on the originator.

Transactions can be used to batch the invalidation messages. Transactions acquire the key lock on the primary owner.

With pessimistic locking, each write triggers a lock message, which is broadcast to all the nodes. During transaction commit, the originator broadcasts a one-phase prepare message (optionally fire-and-forget) which invalidates all affected keys and releases the locks.

With optimistic locking, the originator broadcasts a prepare message, a commit message, and an unlock message (optional). Either the one-phase prepare or the unlock message is fire-and-forget, and the last message always releases the locks.

2.4. Asynchronous replication

All clustered cache modes can be configured to use asynchronous communications with the

mode="ASYNC"

attribute on the <replicated-cache/>, <distributed-cache>, or <invalidation-cache/>

element.

With asynchronous communications, the originator node does not receive any acknowledgement from the other nodes about the status of the operation, so there is no way to check if it succeeded on other nodes.

We do not recommend asynchronous communications in general, as they can cause inconsistencies in the data, and the results are hard to reason about. Nevertheless, sometimes speed is more important than consistency, and the option is available for those cases.

Asynchronous API

The Asynchronous API allows you to use synchronous communications, but without blocking the user thread.

There is one caveat:

The asynchronous operations do NOT preserve the program order.

If a thread calls cache.putAsync(k, v1); cache.putAsync(k, v2), the final value of k

may be either v1 or v2.

The advantage over using asynchronous communications is that the final value can’t be

v1 on one node and v2 on another.

2.4.1. Return values with asynchronous replication

Because the Cache interface extends java.util.Map, write methods like

put(key, value) and remove(key) return the previous value by default.

In some cases, the return value may not be correct:

-

When using

AdvancedCache.withFlags()withFlag.IGNORE_RETURN_VALUE,Flag.SKIP_REMOTE_LOOKUP, orFlag.SKIP_CACHE_LOAD. -

When the cache is configured with

unreliable-return-values="true". -

When using asynchronous communications.

-

When there are multiple concurrent writes to the same key, and the cache topology changes. The topology change will make Infinispan retry the write operations, and a retried operation’s return value is not reliable.

Transactional caches return the correct previous value in cases 3 and 4. However, transactional caches also have a gotcha: in distributed mode, the read-committed isolation level is implemented as repeatable-read. That means this example of "double-checked locking" won’t work:

Cache cache = ...

TransactionManager tm = ...

tm.begin();

try {

Integer v1 = cache.get(k);

// Increment the value

Integer v2 = cache.put(k, v1 + 1);

if (Objects.equals(v1, v2) {

// success

} else {

// retry

}

} finally {

tm.commit();

}The correct way to implement this is to use

cache.getAdvancedCache().withFlags(Flag.FORCE_WRITE_LOCK).get(k).

In caches with optimistic locking, writes can also return stale previous values. Write skew checks can avoid stale previous values.

2.5. Configuring initial cluster size

Infinispan handles cluster topology changes dynamically. This means that nodes do not need to wait for other nodes to join the cluster before Infinispan initializes the caches.

If your applications require a specific number of nodes in the cluster before caches start, you can configure the initial cluster size as part of the transport.

-

Open your Infinispan configuration for editing.

-

Set the minimum number of nodes required before caches start with the

initial-cluster-sizeattribute orinitialClusterSize()method. -

Set the timeout, in milliseconds, after which the Cache Manager does not start with the

initial-cluster-timeoutattribute orinitialClusterTimeout()method. -

Save and close your Infinispan configuration.

Initial cluster size configuration

<infinispan>

<cache-container>

<transport initial-cluster-size="4"

initial-cluster-timeout="30s" />

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"transport" : {

"initial-cluster-size" : "4",

"initial-cluster-timeout" : "30000"

}

}

}

}infinispan:

cacheContainer:

transport:

initialClusterSize: "4"

initialClusterTimeout: "30000"GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.transport()

.initialClusterSize(4)

.initialClusterTimeout(30000, TimeUnit.MILLISECONDS);3. Infinispan cache configuration

Cache configuration controls how Infinispan stores your data.

As part of your cache configuration, you declare the cache mode you want to use. For instance, you can configure Infinispan clusters to use replicated caches or distributed caches.

Your configuration also defines the characteristics of your caches and enables the Infinispan capabilities that you want to use when handling data. For instance, you can configure how Infinispan encodes entries in your caches, whether replication requests happen synchronously or asynchronously between nodes, if entries are mortal or immortal, and so on.

3.1. Declarative cache configuration

You can configure caches declaratively, in XML, JSON, and YAML format, according to the Infinispan schema.

Declarative cache configuration has the following advantages over programmatic configuration:

- Portability

-

Define each configuration in a standalone file that you can use to create embedded and remote caches.

You can also use declarative configuration to create caches with Infinispan Operator for clusters running on Kubernetes. - Simplicity

-

Keep markup languages separate to programming languages.

For example, to create remote caches it is generally better to not add complex XML directly to Java code.

|

Infinispan Server configuration extends To dynamically synchronize remote caches across Infinispan clusters, create them at runtime. |

|

Wherever a time quantity, such as a timeout or an interval, is specified within a declarative configuration, it is possible to describe it using time units:

Examples: |

3.1.1. Cache configuration

You can create declarative cache configuration in XML, JSON, and YAML format.

All declarative caches must conform to the Infinispan schema. Configuration in JSON format must follow the structure of an XML configuration, elements correspond to objects and attributes correspond to fields.

|

Infinispan restricts characters to a maximum of |

|

A file system might set a limitation for the length of a file name, so ensure that a cache’s name does not exceed this limitation. If a cache name exceeds a file system’s naming limitation, general operations or initialing operations towards that cache might fail. Write succinct file names. |

Distributed caches

<distributed-cache owners="2"

segments="256"

capacity-factor="1.0"

l1-lifespan="5s"

mode="SYNC"

statistics="true">

<encoding media-type="application/x-protostream"/>

<locking isolation="REPEATABLE_READ"/>

<transaction mode="FULL_XA"

locking="OPTIMISTIC"/>

<expiration lifespan="5s"

max-idle="1s" />

<memory max-count="1000000"

when-full="REMOVE"/>

<indexing enabled="true"

storage="local-heap">

<index-reader refresh-interval="1s"/>

<indexed-entities>

<indexed-entity>org.infinispan.Person</indexed-entity>

</indexed-entities>

</indexing>

<partition-handling when-split="ALLOW_READ_WRITES"

merge-policy="PREFERRED_NON_NULL"/>

<persistence passivation="false">

<!-- Persistent storage configuration. -->

</persistence>

</distributed-cache>{

"distributed-cache": {

"mode": "SYNC",

"owners": "2",

"segments": "256",

"capacity-factor": "1.0",

"l1-lifespan": "5000",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

},

"locking": {

"isolation": "REPEATABLE_READ"

},

"transaction": {

"mode": "FULL_XA",

"locking": "OPTIMISTIC"

},

"expiration" : {

"lifespan" : "5000",

"max-idle" : "1000"

},

"memory": {

"max-count": "1000000",

"when-full": "REMOVE"

},

"indexing" : {

"enabled" : true,

"storage" : "local-heap",

"index-reader" : {

"refresh-interval" : "1000"

},

"indexed-entities": [

"org.infinispan.Person"

]

},

"partition-handling" : {

"when-split" : "ALLOW_READ_WRITES",

"merge-policy" : "PREFERRED_NON_NULL"

},

"persistence" : {

"passivation" : false

}

}

}distributedCache:

mode: "SYNC"

owners: "2"

segments: "256"

capacityFactor: "1.0"

l1Lifespan: "5000"

statistics: "true"

encoding:

mediaType: "application/x-protostream"

locking:

isolation: "REPEATABLE_READ"

transaction:

mode: "FULL_XA"

locking: "OPTIMISTIC"

expiration:

lifespan: "5000"

maxIdle: "1000"

memory:

maxCount: "1000000"

whenFull: "REMOVE"

indexing:

enabled: "true"

storage: "local-heap"

indexReader:

refreshInterval: "1000"

indexedEntities:

- "org.infinispan.Person"

partitionHandling:

whenSplit: "ALLOW_READ_WRITES"

mergePolicy: "PREFERRED_NON_NULL"

persistence:

passivation: "false"

# Persistent storage configuration.Replicated caches

<replicated-cache segments="256"

mode="SYNC"

statistics="true">

<encoding media-type="application/x-protostream"/>

<locking isolation="REPEATABLE_READ"/>

<transaction mode="FULL_XA"

locking="OPTIMISTIC"/>

<expiration lifespan="5s"

max-idle="1s" />

<memory max-count="1000000"

when-full="REMOVE"/>

<indexing enabled="true"

storage="local-heap">

<index-reader refresh-interval="1s"/>

<indexed-entities>

<indexed-entity>org.infinispan.Person</indexed-entity>

</indexed-entities>

</indexing>

<partition-handling when-split="ALLOW_READ_WRITES"

merge-policy="PREFERRED_NON_NULL"/>

<persistence passivation="false">

<!-- Persistent storage configuration. -->

</persistence>

</replicated-cache>{

"replicated-cache": {

"mode": "SYNC",

"segments": "256",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

},

"locking": {

"isolation": "REPEATABLE_READ"

},

"transaction": {

"mode": "FULL_XA",

"locking": "OPTIMISTIC"

},

"expiration" : {

"lifespan" : "5000",

"max-idle" : "1000"

},

"memory": {

"max-count": "1000000",

"when-full": "REMOVE"

},

"indexing" : {

"enabled" : true,

"storage" : "local-heap",

"index-reader" : {

"refresh-interval" : "1000"

},

"indexed-entities": [

"org.infinispan.Person"

]

},

"partition-handling" : {

"when-split" : "ALLOW_READ_WRITES",

"merge-policy" : "PREFERRED_NON_NULL"

},

"persistence" : {

"passivation" : false

}

}

}replicatedCache:

mode: "SYNC"

segments: "256"

statistics: "true"

encoding:

mediaType: "application/x-protostream"

locking:

isolation: "REPEATABLE_READ"

transaction:

mode: "FULL_XA"

locking: "OPTIMISTIC"

expiration:

lifespan: "5000"

maxIdle: "1000"

memory:

maxCount: "1000000"

whenFull: "REMOVE"

indexing:

enabled: "true"

storage: "local-heap"

indexReader:

refreshInterval: "1000"

indexedEntities:

- "org.infinispan.Person"

partitionHandling:

whenSplit: "ALLOW_READ_WRITES"

mergePolicy: "PREFERRED_NON_NULL"

persistence:

passivation: "false"

# Persistent storage configuration.Multiple caches

<infinispan

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:infinispan:config:16.2 https://infinispan.org/schemas/infinispan-config-16.2.xsd

urn:infinispan:server:16.2 https://infinispan.org/schemas/infinispan-server-16.2.xsd"

xmlns="urn:infinispan:config:16.2"

xmlns:server="urn:infinispan:server:16.2">

<cache-container name="default"

statistics="true">

<distributed-cache name="mycacheone"

mode="ASYNC"

statistics="true">

<encoding media-type="application/x-protostream"/>

<expiration lifespan="5m"/>

<memory max-size="400MB"

when-full="REMOVE"/>

</distributed-cache>

<distributed-cache name="mycachetwo"

mode="SYNC"

statistics="true">

<encoding media-type="application/x-protostream"/>

<expiration lifespan="5m"/>

<memory max-size="400MB"

when-full="REMOVE"/>

</distributed-cache>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"name" : "default",

"statistics" : "true",

"caches" : {

"mycacheone" : {

"distributed-cache" : {

"mode": "ASYNC",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

},

"expiration" : {

"lifespan" : "300000"

},

"memory": {

"max-size": "400MB",

"when-full": "REMOVE"

}

}

},

"mycachetwo" : {

"distributed-cache" : {

"mode": "SYNC",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

},

"expiration" : {

"lifespan" : "300000"

},

"memory": {

"max-size": "400MB",

"when-full": "REMOVE"

}

}

}

}

}

}

}infinispan:

cacheContainer:

name: "default"

statistics: "true"

caches:

mycacheone:

distributedCache:

mode: "ASYNC"

statistics: "true"

encoding:

mediaType: "application/x-protostream"

expiration:

lifespan: "300000"

memory:

maxSize: "400MB"

whenFull: "REMOVE"

mycachetwo:

distributedCache:

mode: "SYNC"

statistics: "true"

encoding:

mediaType: "application/x-protostream"

expiration:

lifespan: "300000"

memory:

maxSize: "400MB"

whenFull: "REMOVE"3.2. Adding cache templates

The Infinispan schema includes *-cache-configuration elements that you can use to create templates.

You can then create caches on demand, using the same configuration multiple times.

-

Open your Infinispan configuration for editing.

-

Add the cache configuration with the appropriate

*-cache-configurationelement or object to the Cache Manager. -

Save and close your Infinispan configuration.

Cache template example

<infinispan>

<cache-container>

<distributed-cache-configuration name="my-dist-template"

mode="SYNC"

statistics="true">

<encoding media-type="application/x-protostream"/>

<memory max-count="1000000"

when-full="REMOVE"/>

<expiration lifespan="5s"

max-idle="1s"/>

</distributed-cache-configuration>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"distributed-cache-configuration" : {

"name" : "my-dist-template",

"mode": "SYNC",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

},

"expiration" : {

"lifespan" : "5000",

"max-idle" : "1000"

},

"memory": {

"max-count": "1000000",

"when-full": "REMOVE"

}

}

}

}

}infinispan:

cacheContainer:

distributedCacheConfiguration:

name: "my-dist-template"

mode: "SYNC"

statistics: "true"

encoding:

mediaType: "application/x-protostream"

expiration:

lifespan: "5000"

maxIdle: "1000"

memory:

maxCount: "1000000"

whenFull: "REMOVE"3.2.1. Creating caches from templates

Create caches from configuration templates.

|

Templates for remote caches are available from the Cache templates menu in Infinispan Console. |

-

Add at least one cache template to the Cache Manager.

-

Open your Infinispan configuration for editing.

-

Specify the template from which the cache inherits with the

configurationattribute or field. -

Save and close your Infinispan configuration.

Cache configuration inherited from a template

<distributed-cache configuration="my-dist-template" />{

"distributed-cache": {

"configuration": "my-dist-template"

}

}distributedCache:

configuration: "my-dist-template"3.2.2. Cache template inheritance

Cache configuration templates can inherit from other templates to extend and override settings.

Cache template inheritance is hierarchical. For a child configuration template to inherit from a parent, you must include it after the parent template.

Additionally, template inheritance is additive for elements that have multiple values. A cache that inherits from another template merges the values from that template, which can override properties.

Template inheritance example

<infinispan>

<cache-container>

<distributed-cache-configuration name="base-template">

<expiration lifespan="5s"/>

</distributed-cache-configuration>

<distributed-cache-configuration name="extended-template"

configuration="base-template">

<encoding media-type="application/x-protostream"/>

<expiration lifespan="10s"

max-idle="1s"/>

</distributed-cache-configuration>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"caches" : {

"base-template" : {

"distributed-cache-configuration" : {

"expiration" : {

"lifespan" : "5000"

}

}

},

"extended-template" : {

"distributed-cache-configuration" : {

"configuration" : "base-template",

"encoding": {

"media-type": "application/x-protostream"

},

"expiration" : {

"lifespan" : "10000",

"max-idle" : "1000"

}

}

}

}

}

}

}infinispan:

cacheContainer:

caches:

base-template:

distributedCacheConfiguration:

expiration:

lifespan: "5000"

extended-template:

distributedCacheConfiguration:

configuration: "base-template"

encoding:

mediaType: "application/x-protostream"

expiration:

lifespan: "10000"

maxIdle: "1000"3.2.3. Cache template wildcards

You can add wildcards to cache configuration template names. If you then create caches where the name matches the wildcard, Infinispan applies the configuration template.

|

Infinispan throws exceptions if cache names match more than one wildcard. |

Template wildcard example

<infinispan>

<cache-container>

<distributed-cache-configuration name="async-dist-cache-*"

mode="ASYNC"

statistics="true">

<encoding media-type="application/x-protostream"/>

</distributed-cache-configuration>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"distributed-cache-configuration" : {

"name" : "async-dist-cache-*",

"mode": "ASYNC",

"statistics": "true",

"encoding": {

"media-type": "application/x-protostream"

}

}

}

}

}infinispan:

cacheContainer:

distributedCacheConfiguration:

name: "async-dist-cache-*"

mode: "ASYNC"

statistics: "true"

encoding:

mediaType: "application/x-protostream"Using the preceding example, if you create a cache named "async-dist-cache-prod" then Infinispan uses the configuration from the async-dist-cache-* template.

3.2.4. Cache templates from multiple XML files

Split cache configuration templates into multiple XML files for granular flexibility and reference them with XML inclusions (XInclude).

|

Infinispan provides minimal support for the XInclude specification.

This means you cannot use the You must also add the |

<infinispan xmlns:xi="http://www.w3.org/2001/XInclude">

<cache-container default-cache="cache-1">

<!-- References files that contain cache configuration templates. -->

<xi:include href="distributed-cache-template.xml" />

<xi:include href="replicated-cache-template.xml" />

</cache-container>

</infinispan>Infinispan also provides an infinispan-config-fragment-16.2.xsd schema that you can use with configuration fragments.

<local-cache xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:infinispan:config:16.2 https://infinispan.org/schemas/infinispan-config-fragment-16.2.xsd"

xmlns="urn:infinispan:config:16.2"

name="mycache"/>3.3. Cache aliases

Add aliases to caches to access them using different names.

|

The |

<distributed-cache name="acache" aliases="0 anothername"/>{

"distributed-cache": {

"aliases": ["0", "anothername"]

}

}distributedCache:

aliases:

- "0"

- "anothername"3.4. Creating remote caches

When you create remote caches at runtime, Infinispan Server synchronizes your configuration across the cluster so that all nodes have a copy. For this reason you should always create remote caches dynamically with the following mechanisms:

-

Infinispan Console

-

Infinispan Command Line Interface (CLI)

-

Hot Rod or HTTP clients

3.4.1. Default Cache Manager

Infinispan Server provides a default Cache Manager that controls the lifecycle of remote caches. Starting Infinispan Server automatically instantiates the Cache Manager so you can create and delete remote caches and other resources like Protobuf schema.

After you start Infinispan Server and add user credentials, you can view details about the Cache Manager and get cluster information from Infinispan Console.

-

Open

127.0.0.1:11222in any browser.

You can also get information about the Cache Manager through the Command Line Interface (CLI) or REST API:

- CLI

-

Run the

describecommand in the default container.[//containers/default]> describe - REST

-

Open

127.0.0.1:11222/rest/v2/container/in any browser.

Default Cache Manager configuration

<infinispan>

<!-- Creates a Cache Manager named "default" and enables metrics. -->

<cache-container name="default"

statistics="true">

<!-- Adds cluster transport that uses the default JGroups TCP stack. -->

<transport cluster="${infinispan.cluster.name:cluster}"

stack="${infinispan.cluster.stack:tcp}"

node-name="${infinispan.node.name:}"/>

<!-- Requires user permission to access caches and perform operations. -->

<security>

<authorization/>

</security>

</cache-container>

</infinispan>{

"infinispan" : {

"jgroups" : {

"transport" : "org.infinispan.remoting.transport.jgroups.JGroupsTransport"

},

"cache-container" : {

"name" : "default",

"statistics" : "true",

"transport" : {

"cluster" : "cluster",

"node-name" : "",

"stack" : "tcp"

},

"security" : {

"authorization" : {}

}

}

}

}infinispan:

jgroups:

transport: "org.infinispan.remoting.transport.jgroups.JGroupsTransport"

cacheContainer:

name: "default"

statistics: "true"

transport:

cluster: "cluster"

nodeName: ""

stack: "tcp"

security:

authorization: ~3.4.2. Creating caches with Infinispan Console

Use Infinispan Console to create remote caches in an intuitive visual interface from any web browser.

-

Create a Infinispan user with

adminpermissions. -

Start at least one Infinispan Server instance.

-

Have a Infinispan cache configuration.

-

Open

127.0.0.1:11222/console/in any browser. -

Select Create Cache and follow the steps as Infinispan Console guides you through the process.

3.4.3. Creating remote caches with the Infinispan CLI

Use the Infinispan Command Line Interface (CLI) to add remote caches on Infinispan Server.

-

Create a Infinispan user with

adminpermissions. -

Start at least one Infinispan Server instance.

-

Have a Infinispan cache configuration.

-

Start the CLI.

bin/cli.sh -

Run the

connectcommand and enter your username and password when prompted. -

Use the

create cachecommand to create remote caches.For example, create a distributed cache named "distcache" as follows:

create cache distcache "<distributed-cache />"

You can use any configuration format supported by Infinispan. Create a cache using a JSON configuration as follows:

+

create cache json '{"distributed-cache":{"mode":"SYNC"}}'It is possible to use a configuration stored in an external file. For example, create a cache named "mycache" from a file named mycache.xml as follows:

+

create cache --file=mycache.xml mycache-

List all remote caches with the

lscommand.ls caches mycache -

View cache configuration with the

describecommand.describe caches/mycache

3.4.4. Creating remote caches from Hot Rod clients

Use the Infinispan Hot Rod API to create remote caches on Infinispan Server from Java, C++, .NET/C#, JS clients and more.

This procedure shows you how to use Hot Rod Java clients that create remote caches on first access. You can find code examples for other Hot Rod clients in the Infinispan Tutorials.

-

Create a Infinispan user with

adminpermissions. -

Start at least one Infinispan Server instance.

-

Have a Infinispan cache configuration.

-

Invoke the

remoteCache()method as part of your theConfigurationBuilder. -

Set the

configurationorconfiguration_uriproperties in thehotrod-client.propertiesfile on your classpath.

File file = new File("path/to/infinispan.xml")

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.remoteCache("another-cache")

.configuration("<distributed-cache name=\"another-cache\"/>");

builder.remoteCache("my.other.cache")

.configurationURI(file.toURI());infinispan.client.hotrod.cache.another-cache.configuration=<distributed-cache name=\"another-cache\"/>

infinispan.client.hotrod.cache.[my.other.cache].configuration_uri=file:///path/to/infinispan.xml|

If the name of your remote cache contains the |

3.4.5. Creating remote caches with the REST API

Use the Infinispan REST API to create remote caches on Infinispan Server from any suitable HTTP client.

-

Create a Infinispan user with

adminpermissions. -

Start at least one Infinispan Server instance.

-

Have a Infinispan cache configuration.

-

Invoke

POSTrequests to/rest/v2/caches/<cache_name>with cache configuration in the payload.

3.5. Creating embedded caches

Infinispan provides an EmbeddedCacheManager API that lets you control both the Cache Manager and embedded cache lifecycles programmatically.

3.5.1. Adding Infinispan to your project

Add Infinispan to your project to create embedded caches in your applications.

-

Configure your project to get Infinispan artifacts from the Maven repository.

-

Add the

infinispan-coreartifact as a dependency in yourpom.xmlas follows:

<dependencies>

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-core</artifactId>

</dependency>

</dependencies>3.5.2. Creating and using embedded caches

Infinispan provides a GlobalConfigurationBuilder API that controls the Cache Manager and a ConfigurationBuilder API that configures caches.

-

Add the

infinispan-coreartifact as a dependency in yourpom.xml.

-

Initialize a

CacheManager.You must always call the

cacheManager.start()method to initialize aCacheManagerbefore you can create caches. Default constructors do this for you but there are overloaded versions of the constructors that do not.Cache Managers are also heavyweight objects and Infinispan recommends instantiating only one instance per JVM.

-

Use the

ConfigurationBuilderAPI to define cache configuration. -

Obtain caches with

getCache(),createCache(), orgetOrCreateCache()methods.Infinispan recommends using the

getOrCreateCache()method because it either creates a cache on all nodes or returns an existing cache. -

If necessary use the

PERMANENTflag for caches to survive restarts. -

Stop the

CacheManagerby calling thecacheManager.stop()method to release JVM resources and gracefully shutdown any caches.

// Set up a clustered Cache Manager.

GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder();

// Initialize the default Cache Manager.

DefaultCacheManager cacheManager = new DefaultCacheManager(global.build());

// Create a distributed cache with synchronous replication.

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.clustering().cacheMode(CacheMode.DIST_SYNC);

// Obtain a volatile cache.

Cache<String, String> cache = cacheManager.administration().withFlags(CacheContainerAdmin.AdminFlag.VOLATILE).getOrCreateCache("myCache", builder.build());

// Stop the Cache Manager.

cacheManager.stop();getCache() methodInvoke the getCache(String) method to obtain caches, as follows:

Cache<String, String> myCache = manager.getCache("myCache");The preceding operation creates a cache named myCache, if it does not already exist, and returns it.

Using the getCache() method creates the cache only on the node where you invoke the method. In other words, it performs a local operation that must be invoked on each node across the cluster. Typically, applications deployed across multiple nodes obtain caches during initialization to ensure that caches are symmetric and exist on each node.

createCache() methodInvoke the createCache() method to create caches dynamically across the entire cluster.

Cache<String, String> myCache = manager.administration().createCache("myCache", "myTemplate");The preceding operation also automatically creates caches on any nodes that subsequently join the cluster.

Caches that you create with the createCache() method are ephemeral by default. If the entire cluster shuts down, the cache is not automatically created again when it restarts.

PERMANENT flagUse the PERMANENT flag to ensure that caches can survive restarts.

Cache<String, String> myCache = manager.administration().withFlags(AdminFlag.PERMANENT).createCache("myCache", "myTemplate");For the PERMANENT flag to take effect, you must enable global state and set a configuration storage provider.

For more information about configuration storage providers, see GlobalStateConfigurationBuilder#configurationStorage().

|

Visit the code tutorials to try examples with embedded Infinispan. See the code tutorial with embedded caches. |

3.5.3. Cache API

Infinispan provides a Cache interface that exposes simple methods for adding, retrieving and removing entries, including atomic mechanisms exposed by the JDK’s ConcurrentMap interface. Based on the cache mode used, invoking these methods will trigger a number of things, potentially replicating an entry to a remote node or looking up an entry from a remote node, or even a cache store.

For simple usage, using the Cache API should be no different from using the JDK Map API, and hence migrating from simple in-memory caches based on a Map to Infinispan’s Cache should be trivial.

Certain methods exposed in Map have certain performance consequences when used with Infinispan, such as

size() ,

values() ,

keySet() and

entrySet().

Specific methods on the keySet, values and entrySet are fine for use please see their Javadoc for further details.

Attempting to perform these operations globally could have large performance impact as well as become a scalability bottleneck. As such, these methods should be avoided in production code and only be used for informational or debugging purposes only.

It should be noted that using certain flags with the withFlags() method can mitigate some of these concerns, please check each method’s documentation for more details.

Further to simply storing entries, Infinispan’s cache API allows you to attach mortality information to data. For example, simply using put(key, value) would create an immortal entry, i.e., an entry that lives in the cache forever, until it is removed (or evicted from memory to prevent running out of memory). If, however, you put data in the cache using put(key, value, lifespan, timeunit) , this creates a mortal entry, i.e., an entry that has a fixed lifespan and expires after that lifespan.

In addition to lifespan , Infinispan also supports maxIdle as an additional metric with which to determine expiration. Any combination of lifespans or maxIdles can be used.

putForExternalRead operationInfinispan’s Cache class contains a different 'put' operation called putForExternalRead . This operation is particularly useful when Infinispan is used as a temporary cache for data that is persisted elsewhere. Under heavy read scenarios, contention in the cache should not delay the real transactions at hand, since caching should just be an optimization and not something that gets in the way.

To achieve this, putForExternalRead() acts as a put call that only operates if the key is not present in the cache, and fails fast and silently if another thread is trying to store the same key at the same time. In this particular scenario, caching data is a way to optimise the system and it’s not desirable that a failure in caching affects the on-going transaction, hence why failure is handled differently. putForExternalRead() is considered to be a fast operation because regardless of whether it’s successful or not, it doesn’t wait for any locks, and so returns to the caller promptly.

To understand how to use this operation, let’s look at basic example. Imagine a cache of Person instances, each keyed by a PersonId , whose data originates in a separate data store. The following code shows the most common pattern of using putForExternalRead within the context of this example:

// Id of the person to look up, provided by the application

PersonId id = ...;

// Get a reference to the cache where person instances will be stored

Cache<PersonId, Person> cache = ...;

// First, check whether the cache contains the person instance

// associated with with the given id

Person cachedPerson = cache.get(id);

if (cachedPerson == null) {

// The person is not cached yet, so query the data store with the id

Person person = dataStore.lookup(id);

// Cache the person along with the id so that future requests can

// retrieve it from memory rather than going to the data store

cache.putForExternalRead(id, person);

} else {

// The person was found in the cache, so return it to the application

return cachedPerson;

}Note that putForExternalRead should never be used as a mechanism to update the cache with a new Person instance originating from application execution (i.e. from a transaction that modifies a Person’s address). When updating cached values, please use the standard put operation, otherwise the possibility of caching inconsistent data is likely.

AdvancedCache API

In addition to the simple Cache interface, Infinispan offers an AdvancedCache interface, geared towards extension authors. The AdvancedCache offers the ability to access certain internal components and to apply flags to alter the default behavior of certain cache methods. The following code snippet depicts how an AdvancedCache can be obtained:

AdvancedCache advancedCache = cache.getAdvancedCache();Flags

Flags are applied to regular cache methods to alter the behavior of certain methods. For a list of all available flags, and their effects, see the Flag enumeration. Flags are applied using AdvancedCache.withFlags() . This builder method can be used to apply any number of flags to a cache invocation, for example:

advancedCache.withFlags(Flag.CACHE_MODE_LOCAL, Flag.SKIP_LOCKING)

.withFlags(Flag.FORCE_SYNCHRONOUS)

.put("hello", "world");Asynchronous API

In addition to synchronous API methods like Cache.put() , Cache.remove() , etc., Infinispan also has an asynchronous, non-blocking API where you can achieve the same results in a non-blocking fashion.

These methods are named in a similar fashion to their blocking counterparts, with "Async" appended. E.g., Cache.putAsync() , Cache.removeAsync() , etc. These asynchronous counterparts return a CompletableFuture that contains the actual result of the operation.

For example, in a cache parameterized as Cache<String, String>, Cache.put(String key, String value) returns String while Cache.putAsync(String key, String value) returns CompletableFuture<String>.

Why use such an API?

Non-blocking APIs are powerful in that they provide all of the guarantees of synchronous communications - with the ability to handle communication failures and exceptions - with the ease of not having to block until a call completes. This allows you to better harness parallelism in your system. For example:

Set<CompletableFuture<?>> futures = new HashSet<>();

futures.add(cache.putAsync(key1, value1)); // does not block

futures.add(cache.putAsync(key2, value2)); // does not block

futures.add(cache.putAsync(key3, value3)); // does not block

// the remote calls for the 3 puts will effectively be executed

// in parallel, particularly useful if running in distributed mode

// and the 3 keys would typically be pushed to 3 different nodes

// in the cluster

// check that the puts completed successfully

for (CompletableFuture<?> f: futures) f.get();Which processes actually happen asynchronously?

There are four things in Infinispan that can be considered to be on the critical path of a typical write operation. These are, in descending order of cost:

-

network calls

-

marshalling

-

writing to a cache store (optional)

-

locking

Using the async methods will take the network calls and marshalling off the critical path. For various technical reasons, writing to a cache store and acquiring locks, however, still happens in the caller’s thread.

4. Enabling and configuring Infinispan statistics and JMX monitoring

Infinispan can provide Cache Manager and cache statistics as well as export JMX MBeans.

4.1. Enabling statistics in embedded caches

Configure Infinispan to export statistics for the Cache Manager and embedded caches.

-

Open your Infinispan configuration for editing.

-

Add the

statistics="true"attribute or the.statistics(true)method. -

Save and close your Infinispan configuration.

Embedded cache statistics

<infinispan>

<cache-container statistics="true">

<distributed-cache statistics="true"/>

<replicated-cache statistics="true"/>

</cache-container>

</infinispan>GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder().cacheContainer().statistics(true);

DefaultCacheManager cacheManager = new DefaultCacheManager(global.build());

Configuration builder = new ConfigurationBuilder();

builder.statistics().enable();4.2. Enabling statistics in remote caches

Infinispan Server automatically enables statistics for the default Cache Manager. However, you must explicitly enable statistics for your caches.

-

Open your Infinispan configuration for editing.

-

Add the

statisticsattribute or field and specifytrueas the value. -

Save and close your Infinispan configuration.

Remote cache statistics

<distributed-cache statistics="true" />{

"distributed-cache": {

"statistics": "true"

}

}distributedCache:

statistics: true4.3. Enabling Hot Rod client statistics

Hot Rod Java clients can provide statistics that include remote cache and near-cache hits and misses.

-

Open your Hot Rod Java client configuration for editing.

-

Set

trueas the value for thestatisticsproperty or invoke thestatistics().enable()methods. -

Export JMX MBeans for your Hot Rod client with the

jmxandjmx_domainproperties or invoke thejmxEnable()andjmxDomain()methods. -

Save and close your client configuration.

Hot Rod Java client statistics

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.statistics().enable()

.jmxEnable()

.jmxDomain("my.domain.org")

.addServer()

.host("127.0.0.1")

.port(11222);

RemoteCacheManager remoteCacheManager = new RemoteCacheManager(builder.build());infinispan.client.hotrod.statistics = true

infinispan.client.hotrod.jmx = true

infinispan.client.hotrod.jmx_domain = my.domain.org4.4. Configuring Infinispan metrics

Infinispan generates metrics that are compatible with any monitoring system.

-

Gauges provide values such as the average number of nanoseconds for write operations or JVM uptime.

-

Histograms provide details about operation execution times such as read, write, and remove times.

By default, Infinispan generates gauges when you enable statistics but you can also configure it to generate histograms.

|

Infinispan metrics are provided at the |

-

You must add Micrometer Core and Micrometer Registry Prometheus JARs to your classpath to export Infinispan metrics for embedded caches.

-

Open your Infinispan configuration for editing.

-

Add the

metricselement or object to the cache container. -

Enable or disable gauges with the

gaugesattribute or field. -

Enable or disable histograms with the

histogramsattribute or field. -

Enable or disable the export of metrics in legacy format using the

legacyattribute or field. -

Save and close your client configuration.

Metrics configuration

<infinispan>

<cache-container statistics="true">

<metrics gauges="true"

histograms="true" />

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"metrics" : {

"gauges" : "true",

"histograms" : "true"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

metrics:

gauges: "true"

histograms: "true"GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

//Computes and collects statistics for the Cache Manager.

.statistics().enable()

//Exports collected statistics as gauge and histogram metrics.

.metrics().gauges(true).histograms(true)

.build();Legacy format

For backwards compatibility, metrics are currently exported in a legacy format by default. The setting legacy should be set to

false to export metrics in the new format.

<infinispan>

<cache-container statistics="true">

<metrics legacy="false" />

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"metrics" : {

"legacy" : "false"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

metrics:

legacy: "false"Infinispan Server exposes statistics through the metrics endpoint that you can collect with monitoring tools such as Prometheus.

To verify that statistics are exported to the metrics endpoint, you can do the following:

curl -v http://localhost:11222/metrics \

--digest -u username:passwordcurl -v http://localhost:11222/metrics \

--digest -u username:password \

-H "Accept: application/openmetrics-text"|

Infinispan no longer provides metrics in MicroProfile JSON format. |

4.5. Registering JMX MBeans

Infinispan can register JMX MBeans that you can use to collect statistics and

perform administrative operations.

You must also enable statistics otherwise Infinispan provides 0 values for all statistic attributes in JMX MBeans.

|

Use JMX Mbeans for collecting statistics only when Infinispan is embedded in applications and not with a remote Infinispan server. When you use JMX Mbeans for collecting statistics from a remote Infinispan server, the data received from JMX Mbeans might differ from the data received from other APIs such as REST. In such cases the data received from the other APIs is more accurate. |

-

Open your Infinispan configuration for editing.

-

Add the

jmxelement or object to the cache container and specifytrueas the value for theenabledattribute or field. -

Add the

domainattribute or field and specify the domain where JMX MBeans are exposed, if required. -

Save and close your client configuration.

JMX configuration

<infinispan>

<cache-container statistics="true">

<jmx enabled="true"

domain="example.com"/>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"jmx" : {

"enabled" : "true",

"domain" : "example.com"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

jmx:

enabled: "true"

domain: "example.com"GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.jmx().enable()

.domain("org.mydomain");4.5.1. Enabling JMX remote ports

Provide unique remote JMX ports to expose Infinispan MBeans through connections in JMXServiceURL format.

|

Infinispan Server does not expose JMX remotely via the single port endpoint. If you want to remotely access Infinispan Server via JMX you must enable a remote port. |

You can enable remote JMX ports using one of the following approaches:

-

Enable remote JMX ports that require authentication to one of the Infinispan Server security realms.

-

Enable remote JMX ports manually using the standard Java management configuration options.

-

For remote JMX with authentication, define JMX specific user roles using the default security realm. Users must have

controlRolewith read/write access or themonitorRolewith read-only access to access any JMX resources. Infinispan automatically maps globalADMINandMONITORpermissions to the JMXcontrolRoleandmonitorRoleroles.

Start Infinispan Server with a remote JMX port enabled using one of the following ways:

-

Enable remote JMX through port

9999.bin/server.sh --jmx 9999Using remote JMX with SSL disabled is not intended for production environments.

-

Pass the following system properties to Infinispan Server at startup.

bin/server.sh -Dcom.sun.management.jmxremote.port=9999 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=falseEnabling remote JMX with no authentication or SSL is not secure and not recommended in any environment. Disabling authentication and SSL allows unauthorized users to connect to your server and access the data hosted there.

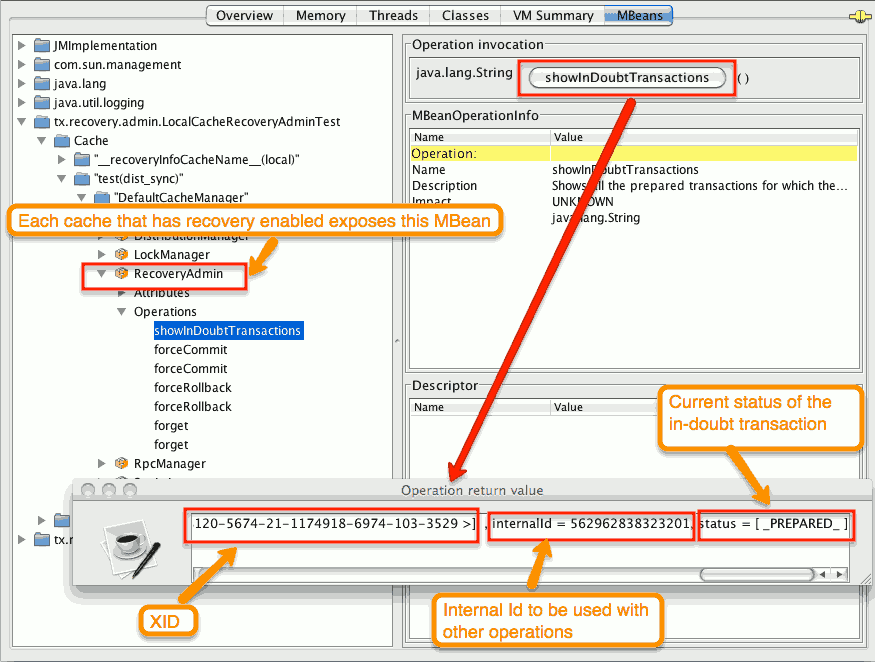

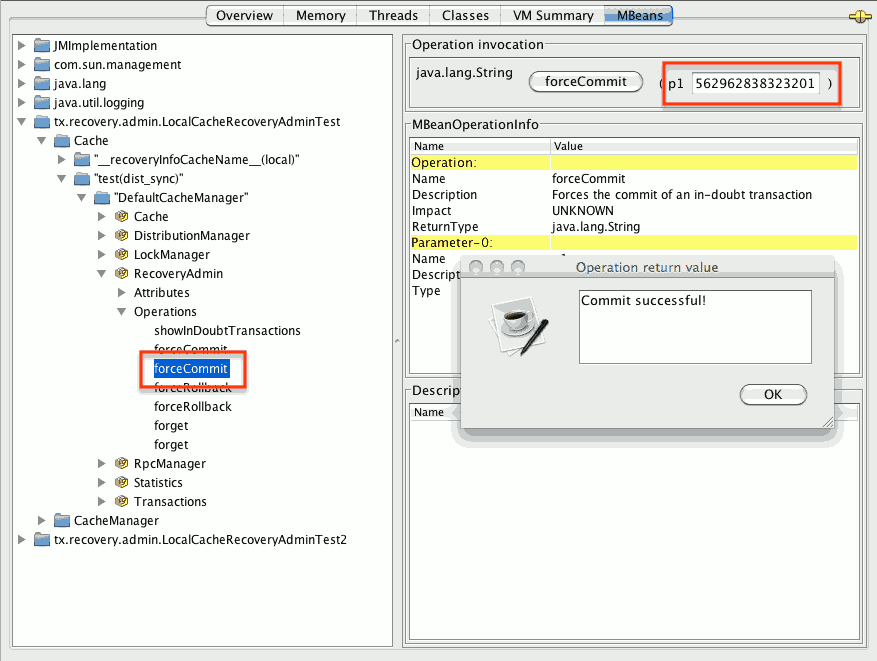

4.5.2. Infinispan MBeans

Infinispan exposes JMX MBeans that represent manageable resources.

org.infinispan:type=Cache-

Attributes and operations available for cache instances.

org.infinispan:type=CacheManager-

Attributes and operations available for Cache Managers, including Infinispan cache and cluster health statistics.

For a complete list of available JMX MBeans along with descriptions and available operations and attributes, see the Infinispan JMX Components documentation.

4.5.3. Registering MBeans in custom MBean servers

Infinispan includes an MBeanServerLookup interface that you can use to

register MBeans in custom MBeanServer instances.

-

Create an implementation of

MBeanServerLookupso that thegetMBeanServer()method returns the custom MBeanServer instance. -

Configure Infinispan to register JMX MBeans.

-

Open your Infinispan configuration for editing.

-

Add the

mbean-server-lookupattribute or field to the JMX configuration for the Cache Manager. -

Specify fully qualified name (FQN) of your

MBeanServerLookupimplementation. -

Save and close your client configuration.

JMX MBean server lookup configuration

<infinispan>

<cache-container statistics="true">

<jmx enabled="true"

domain="example.com"

mbean-server-lookup="com.example.MyMBeanServerLookup"/>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"jmx" : {

"enabled" : "true",

"domain" : "example.com",

"mbean-server-lookup" : "com.example.MyMBeanServerLookup"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

jmx:

enabled: "true"

domain: "example.com"

mbeanServerLookup: "com.example.MyMBeanServerLookup"GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.jmx().enable()

.domain("org.mydomain")

.mBeanServerLookup(new com.acme.MyMBeanServerLookup());4.6. Exporting metrics during a state transfer operation

You can export time metrics for clustered caches that Infinispan redistributes across nodes.

A state transfer operation occurs when a clustered cache topology changes, such as a node joining or leaving a cluster. During a state transfer operation, Infinispan exports metrics from each cache, so that you can determine a cache’s status. A state transfer exposes attributes as properties, so that Infinispan can export metrics from each cache.

|

You cannot perform a state transfer operation in invalidation mode. |

Infinispan generates time metrics that are compatible with the REST API and the JMX API.

-

Configure Infinispan metrics.

-

Enable metrics for your cache type, such as embedded cache or remote cache.

-

Initiate a state transfer operation by changing your clustered cache topology.

-

Choose one of the following methods:

-

Configure Infinispan to use the REST API to collect metrics.

-

Configure Infinispan to use the JMX API to collect metrics.

-

4.7. Monitoring the status of cross-site replication