Add Infinispan libraries to your Java project and create embedded caches that store data in the same memory space where you execute application code.

1. Adding Infinispan to your Maven repository

Infinispan Java distributions are available from Maven and requires at least JDK 17.

Infinispan artifacts are available from Maven central. See the org.infinispan group for available Infinispan artifacts.

1.1. Configuring your project POM

Configure Project Object Model (POM) files in your project to use Infinispan dependencies for embedded caches, Hot Rod clients, and other capabilities.

-

Open your project

pom.xmlfor editing. -

Define the

version.infinispanproperty with the correct Infinispan version. -

Include the

infinispan-bomin adependencyManagementsection.The Bill Of Materials (BOM) controls dependency versions, which avoids version conflicts and means you do not need to set the version for each Infinispan artifact you add as a dependency to your project.

-

Save and close

pom.xml.

The following example shows the Infinispan version and BOM:

<properties>

<version.infinispan>16.1.0.Dev02</version.infinispan>

</properties>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-bom</artifactId>

<version>${version.infinispan}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>Add Infinispan artifacts as dependencies to your pom.xml as required.

2. Creating embedded caches

Infinispan provides an EmbeddedCacheManager API that lets you control both the Cache Manager and embedded cache lifecycles programmatically.

2.1. Adding Infinispan to your project

Add Infinispan to your project to create embedded caches in your applications.

-

Configure your project to get Infinispan artifacts from the Maven repository.

-

Add the

infinispan-coreartifact as a dependency in yourpom.xmlas follows:

<dependencies>

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-core</artifactId>

</dependency>

</dependencies>2.2. Creating and using embedded caches

Infinispan provides a GlobalConfigurationBuilder API that controls the Cache Manager and a ConfigurationBuilder API that configures caches.

-

Add the

infinispan-coreartifact as a dependency in yourpom.xml.

-

Initialize a

CacheManager.You must always call the

cacheManager.start()method to initialize aCacheManagerbefore you can create caches. Default constructors do this for you but there are overloaded versions of the constructors that do not.Cache Managers are also heavyweight objects and Infinispan recommends instantiating only one instance per JVM.

-

Use the

ConfigurationBuilderAPI to define cache configuration. -

Obtain caches with

getCache(),createCache(), orgetOrCreateCache()methods.Infinispan recommends using the

getOrCreateCache()method because it either creates a cache on all nodes or returns an existing cache. -

If necessary use the

PERMANENTflag for caches to survive restarts. -

Stop the

CacheManagerby calling thecacheManager.stop()method to release JVM resources and gracefully shutdown any caches.

// Set up a clustered Cache Manager.

GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder();

// Initialize the default Cache Manager.

DefaultCacheManager cacheManager = new DefaultCacheManager(global.build());

// Create a distributed cache with synchronous replication.

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.clustering().cacheMode(CacheMode.DIST_SYNC);

// Obtain a volatile cache.

Cache<String, String> cache = cacheManager.administration().withFlags(CacheContainerAdmin.AdminFlag.VOLATILE).getOrCreateCache("myCache", builder.build());

// Stop the Cache Manager.

cacheManager.stop();getCache() methodInvoke the getCache(String) method to obtain caches, as follows:

Cache<String, String> myCache = manager.getCache("myCache");The preceding operation creates a cache named myCache, if it does not already exist, and returns it.

Using the getCache() method creates the cache only on the node where you invoke the method. In other words, it performs a local operation that must be invoked on each node across the cluster. Typically, applications deployed across multiple nodes obtain caches during initialization to ensure that caches are symmetric and exist on each node.

createCache() methodInvoke the createCache() method to create caches dynamically across the entire cluster.

Cache<String, String> myCache = manager.administration().createCache("myCache", "myTemplate");The preceding operation also automatically creates caches on any nodes that subsequently join the cluster.

Caches that you create with the createCache() method are ephemeral by default. If the entire cluster shuts down, the cache is not automatically created again when it restarts.

PERMANENT flagUse the PERMANENT flag to ensure that caches can survive restarts.

Cache<String, String> myCache = manager.administration().withFlags(AdminFlag.PERMANENT).createCache("myCache", "myTemplate");For the PERMANENT flag to take effect, you must enable global state and set a configuration storage provider.

For more information about configuration storage providers, see GlobalStateConfigurationBuilder#configurationStorage().

|

Visit the code tutorials to try examples with embedded Infinispan. See the code tutorial with embedded caches. |

2.3. Cache API

Infinispan provides a Cache interface that exposes simple methods for adding, retrieving and removing entries, including atomic mechanisms exposed by the JDK’s ConcurrentMap interface. Based on the cache mode used, invoking these methods will trigger a number of things, potentially replicating an entry to a remote node or looking up an entry from a remote node, or even a cache store.

For simple usage, using the Cache API should be no different from using the JDK Map API, and hence migrating from simple in-memory caches based on a Map to Infinispan’s Cache should be trivial.

Certain methods exposed in Map have certain performance consequences when used with Infinispan, such as

size() ,

values() ,

keySet() and

entrySet().

Specific methods on the keySet, values and entrySet are fine for use please see their Javadoc for further details.

Attempting to perform these operations globally could have large performance impact as well as become a scalability bottleneck. As such, these methods should be avoided in production code and only be used for informational or debugging purposes only.

It should be noted that using certain flags with the withFlags() method can mitigate some of these concerns, please check each method’s documentation for more details.

Further to simply storing entries, Infinispan’s cache API allows you to attach mortality information to data. For example, simply using put(key, value) would create an immortal entry, i.e., an entry that lives in the cache forever, until it is removed (or evicted from memory to prevent running out of memory). If, however, you put data in the cache using put(key, value, lifespan, timeunit) , this creates a mortal entry, i.e., an entry that has a fixed lifespan and expires after that lifespan.

In addition to lifespan , Infinispan also supports maxIdle as an additional metric with which to determine expiration. Any combination of lifespans or maxIdles can be used.

putForExternalRead operationInfinispan’s Cache class contains a different 'put' operation called putForExternalRead . This operation is particularly useful when Infinispan is used as a temporary cache for data that is persisted elsewhere. Under heavy read scenarios, contention in the cache should not delay the real transactions at hand, since caching should just be an optimization and not something that gets in the way.

To achieve this, putForExternalRead() acts as a put call that only operates if the key is not present in the cache, and fails fast and silently if another thread is trying to store the same key at the same time. In this particular scenario, caching data is a way to optimise the system and it’s not desirable that a failure in caching affects the on-going transaction, hence why failure is handled differently. putForExternalRead() is considered to be a fast operation because regardless of whether it’s successful or not, it doesn’t wait for any locks, and so returns to the caller promptly.

To understand how to use this operation, let’s look at basic example. Imagine a cache of Person instances, each keyed by a PersonId , whose data originates in a separate data store. The following code shows the most common pattern of using putForExternalRead within the context of this example:

// Id of the person to look up, provided by the application

PersonId id = ...;

// Get a reference to the cache where person instances will be stored

Cache<PersonId, Person> cache = ...;

// First, check whether the cache contains the person instance

// associated with with the given id

Person cachedPerson = cache.get(id);

if (cachedPerson == null) {

// The person is not cached yet, so query the data store with the id

Person person = dataStore.lookup(id);

// Cache the person along with the id so that future requests can

// retrieve it from memory rather than going to the data store

cache.putForExternalRead(id, person);

} else {

// The person was found in the cache, so return it to the application

return cachedPerson;

}Note that putForExternalRead should never be used as a mechanism to update the cache with a new Person instance originating from application execution (i.e. from a transaction that modifies a Person’s address). When updating cached values, please use the standard put operation, otherwise the possibility of caching inconsistent data is likely.

2.3.1. AdvancedCache API

In addition to the simple Cache interface, Infinispan offers an AdvancedCache interface, geared towards extension authors. The AdvancedCache offers the ability to access certain internal components and to apply flags to alter the default behavior of certain cache methods. The following code snippet depicts how an AdvancedCache can be obtained:

AdvancedCache advancedCache = cache.getAdvancedCache();Flags

Flags are applied to regular cache methods to alter the behavior of certain methods. For a list of all available flags, and their effects, see the Flag enumeration. Flags are applied using AdvancedCache.withFlags() . This builder method can be used to apply any number of flags to a cache invocation, for example:

advancedCache.withFlags(Flag.CACHE_MODE_LOCAL, Flag.SKIP_LOCKING)

.withFlags(Flag.FORCE_SYNCHRONOUS)

.put("hello", "world");2.3.2. Asynchronous API

In addition to synchronous API methods like Cache.put() , Cache.remove() , etc., Infinispan also has an asynchronous, non-blocking API where you can achieve the same results in a non-blocking fashion.

These methods are named in a similar fashion to their blocking counterparts, with "Async" appended. E.g., Cache.putAsync() , Cache.removeAsync() , etc. These asynchronous counterparts return a CompletableFuture that contains the actual result of the operation.

For example, in a cache parameterized as Cache<String, String>, Cache.put(String key, String value) returns String while Cache.putAsync(String key, String value) returns CompletableFuture<String>.

Why use such an API?

Non-blocking APIs are powerful in that they provide all of the guarantees of synchronous communications - with the ability to handle communication failures and exceptions - with the ease of not having to block until a call completes. This allows you to better harness parallelism in your system. For example:

Set<CompletableFuture<?>> futures = new HashSet<>();

futures.add(cache.putAsync(key1, value1)); // does not block

futures.add(cache.putAsync(key2, value2)); // does not block

futures.add(cache.putAsync(key3, value3)); // does not block

// the remote calls for the 3 puts will effectively be executed

// in parallel, particularly useful if running in distributed mode

// and the 3 keys would typically be pushed to 3 different nodes

// in the cluster

// check that the puts completed successfully

for (CompletableFuture<?> f: futures) f.get();Which processes actually happen asynchronously?

There are four things in Infinispan that can be considered to be on the critical path of a typical write operation. These are, in descending order of cost:

-

network calls

-

marshalling

-

writing to a cache store (optional)

-

locking

Using the async methods will take the network calls and marshalling off the critical path. For various technical reasons, writing to a cache store and acquiring locks, however, still happens in the caller’s thread.

3. Programmatically configuring user roles and permissions

Configure security authorization programmatically when using embedded caches in Java applications.

3.1. Infinispan user roles and permissions

Infinispan includes several roles that provide users with permissions to access caches and Infinispan resources.

| Role | Permissions | Description |

|---|---|---|

|

ALL |

Superuser with all permissions including control of the Cache Manager lifecycle. |

|

ALL_READ, ALL_WRITE, LISTEN, EXEC, MONITOR, CREATE |

Can create and delete Infinispan resources in addition to |

|

ALL_READ, ALL_WRITE, LISTEN, EXEC, MONITOR |

Has read and write access to Infinispan resources in addition to |

|

ALL_READ, MONITOR |

Has read access to Infinispan resources in addition to |

|

MONITOR |

Can view statistics via JMX and the |

3.1.1. Permissions

User roles are sets of permissions with different access levels.

Permission |

Function |

Description |

CONFIGURATION |

|

Defines new cache configurations. |

LISTEN |

|

Registers listeners against a Cache Manager. |

LIFECYCLE |

|

Stops the Cache Manager. |

CREATE |

|

Create and remove container resources such as caches, counters, schemas, and scripts. |

MONITOR |

|

Allows access to JMX statistics and the |

ALL |

- |

Includes all Cache Manager permissions. |

Permission |

Function |

Description |

READ |

|

Retrieves entries from a cache. |

WRITE |

|

Writes, replaces, removes, evicts data in a cache. |

EXEC |

|

Allows code execution against a cache. |

LISTEN |

|

Registers listeners against a cache. |

BULK_READ |

|

Executes bulk retrieve operations. |

BULK_WRITE |

|

Executes bulk write operations. |

LIFECYCLE |

|

Starts and stops a cache. |

ADMIN |

|

Allows access to underlying components and internal structures. |

MONITOR |

|

Allows access to JMX statistics and the |

ALL |

- |

Includes all cache permissions. |

ALL_READ |

- |

Combines the READ and BULK_READ permissions. |

ALL_WRITE |

- |

Combines the WRITE and BULK_WRITE permissions. |

3.1.2. Role and permission mappers

Infinispan implements users as a collection of principals.

Principals represent either an individual user identity, such as a username, or a group to which the users belong. Internally, these are implemented with the javax.security.auth.Subject class.

To enable authorization, the principals must be mapped to role names, which are then expanded into a set of permissions.

Infinispan includes the PrincipalRoleMapper API for associating security principals to roles, and the RolePermissionMapper API for associating roles with specific permissions.

Infinispan provides the following role and permission mapper implementations:

- Cluster role mapper

-

Stores principal to role mappings in the cluster registry.

- Cluster permission mapper

-

Stores role to permission mappings in the cluster registry. Allows you to dynamically modify user roles and permissions.

- Identity role mapper

-

Uses the principal name as the role name. The type or format of the principal name depends on the source. For example, in an LDAP directory the principal name could be a Distinguished Name (DN).

- Common name role mapper

-

Uses the Common Name (CN) as the role name. You can use this role mapper with an LDAP directory or with client certificates that contain Distinguished Names (DN); for example

cn=managers,ou=people,dc=example,dc=commaps to themanagersrole.

By default, principal-to-role mapping is only applied to principals which represent groups.

It is possible to configure Infinispan to also perform the mapping for user principals by setting the

authorization.group-only-mapping configuration attribute to false.

|

Mapping users to roles and permissions in Infinispan

Consider the following user retrieved from an LDAP server, as a collection of DNs:

CN=myapplication,OU=applications,DC=mycompany CN=dataprocessors,OU=groups,DC=mycompany CN=finance,OU=groups,DC=mycompany

Using the Common name role mapper, the user would be mapped to the following roles:

dataprocessors finance

Infinispan has the following role definitions:

dataprocessors: ALL_WRITE ALL_READ finance: LISTEN

The user would have the following permissions:

ALL_WRITE ALL_READ LISTEN

3.1.3. Configuring role mappers

Infinispan enables the cluster role mapper and cluster permission mapper by default. To use a different implementation for role mapping, you must configure the role mappers.

-

Open your Infinispan configuration for editing.

-

Declare the role mapper as part of the security authorization in the Cache Manager configuration.

-

Save the changes to your configuration.

With embedded caches you can programmatically configure role and permission mappers with the principalRoleMapper() and rolePermissionMapper() methods.

Role mapper configuration

<cache-container>

<security>

<authorization>

<common-name-role-mapper />

</authorization>

</security>

</cache-container>{

"infinispan" : {

"cache-container" : {

"security" : {

"authorization" : {

"common-name-role-mapper": {}

}

}

}

}

}infinispan:

cacheContainer:

security:

authorization:

commonNameRoleMapper: ~3.2. Enabling and configuring authorization for embedded caches

When using embedded caches, you can configure authorization with the GlobalSecurityConfigurationBuilder and ConfigurationBuilder classes.

-

Construct a

GlobalConfigurationBuilderand enable security authorization with thesecurity().authorization().enable()method. -

Specify a role mapper with the

principalRoleMapper()method. -

If required, define custom role and permission mappings with the

role()andpermission()methods.GlobalConfigurationBuilder global = new GlobalConfigurationBuilder(); global.security().authorization().enable() .principalRoleMapper(new ClusterRoleMapper()) .role("myroleone").permission(AuthorizationPermission.ALL_WRITE) .role("myroletwo").permission(AuthorizationPermission.ALL_READ); -

Enable authorization for caches in the

ConfigurationBuilder.-

Add all roles from the global configuration.

ConfigurationBuilder config = new ConfigurationBuilder(); config.security().authorization().enable(); -

Explicitly define roles for a cache so that Infinispan denies access for users who do not have the role.

ConfigurationBuilder config = new ConfigurationBuilder(); config.security().authorization().enable().role("myroleone");

-

3.3. Adding authorization roles at runtime

Dynamically map roles to permissions when using security authorization with Infinispan caches.

-

Configure authorization for embedded caches.

-

Have

ADMINpermissions for Infinispan.

-

Obtain the

RolePermissionMapperinstance. -

Define new roles with the

addRole()method.MutableRolePermissionMapper mapper = (MutableRolePermissionMapper) cacheManager.getCacheManagerConfiguration().security().authorization().rolePermissionMapper(); mapper.addRole(Role.newRole("myroleone", true, AuthorizationPermission.ALL_WRITE, AuthorizationPermission.LISTEN)); mapper.addRole(Role.newRole("myroletwo", true, AuthorizationPermission.READ, AuthorizationPermission.WRITE));

3.4. Executing code with secure caches

When you construct a DefaultCacheManager for an embedded cache that uses security authorization, the Cache Manager returns a SecureCache that checks the security context before invoking any operations.

A SecureCache also ensures that applications cannot retrieve lower-level insecure objects such as DataContainer.

For this reason, you must execute code with a Infinispan user that has a role with the appropriate level of permission.

-

Configure authorization for embedded caches.

-

If necessary, retrieve the current Subject from the Infinispan context:

Security.getSubject(); -

Wrap method calls in a

PrivilegedActionto execute them with the Subject.Security.doAs(mySubject, (PrivilegedAction<String>)() -> cache.put("key", "value"));

3.5. Configuring the access control list (ACL) cache

When you grant or deny roles to users, Infinispan stores details about which users can access your caches internally. This ACL cache improves performance for security authorization by avoiding the need for Infinispan to calculate if users have the appropriate permissions to perform read and write operations for every request.

|

Whenever you grant or deny roles to users, Infinispan flushes the ACL cache to ensure it applies user permissions correctly. This means that Infinispan must recalculate cache permissions for all users each time you grant or deny roles. For best performance you should not frequently or repeatedly grant and deny roles in production environments. |

-

Open your Infinispan configuration for editing.

-

Specify the maximum number of entries for the ACL cache with the

cache-sizeattribute.Entries in the ACL cache have a cardinality of

caches * users. You should set the maximum number of entries to a value that can hold information for all your caches and users. For example, the default size of1000is appropriate for deployments with up to 100 caches and 10 users. -

Set the timeout value, in milliseconds, with the

cache-timeoutattribute.If Infinispan does not access an entry in the ACL cache within the timeout period that entry is evicted. When the user subsequently attempts cache operations then Infinispan recalculates their cache permissions and adds an entry to the ACL cache.

Specifying a value of

0for either thecache-sizeorcache-timeoutattribute disables the ACL cache. You should disable the ACL cache only if you disable authorization. -

Save the changes to your configuration.

ACL cache configuration

<infinispan>

<cache-container name="acl-cache-configuration">

<security cache-size="1000"

cache-timeout="5m">

<authorization/>

</security>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"name" : "acl-cache-configuration",

"security" : {

"cache-size" : "1000",

"cache-timeout" : "300000",

"authorization" : {}

}

}

}

}infinispan:

cacheContainer:

name: "acl-cache-configuration"

security:

cache-size: "1000"

cache-timeout: "300000"

authorization: ~3.5.1. Flushing ACL caches

It is possible to flush the ACL cache using the GlobalSecurityManager MBean, accessible over JMX.

In this case, flush means the cache is empty and the information is loaded from the external source.

Flushing the ACL caches does not synchronize it cluster-wide.

4. Enabling and configuring Infinispan statistics and JMX monitoring

Infinispan can provide Cache Manager and cache statistics as well as export JMX MBeans.

4.1. Enabling statistics in embedded caches

Configure Infinispan to export statistics for the Cache Manager and embedded caches.

-

Open your Infinispan configuration for editing.

-

Add the

statistics="true"attribute or the.statistics(true)method. -

Save and close your Infinispan configuration.

Embedded cache statistics

<infinispan>

<cache-container statistics="true">

<distributed-cache statistics="true"/>

<replicated-cache statistics="true"/>

</cache-container>

</infinispan>GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder().cacheContainer().statistics(true);

DefaultCacheManager cacheManager = new DefaultCacheManager(global.build());

Configuration builder = new ConfigurationBuilder();

builder.statistics().enable();4.2. Configuring Infinispan metrics

Infinispan generates metrics that are compatible with any monitoring system.

-

Gauges provide values such as the average number of nanoseconds for write operations or JVM uptime.

-

Histograms provide details about operation execution times such as read, write, and remove times.

By default, Infinispan generates gauges when you enable statistics but you can also configure it to generate histograms.

|

Infinispan metrics are provided at the |

-

You must add Micrometer Core and Micrometer Registry Prometheus JARs to your classpath to export Infinispan metrics for embedded caches.

-

Open your Infinispan configuration for editing.

-

Add the

metricselement or object to the cache container. -

Enable or disable gauges with the

gaugesattribute or field. -

Enable or disable histograms with the

histogramsattribute or field. -

Enable or disable the export of metrics in legacy format using the

legacyattribute or field. -

Save and close your client configuration.

Metrics configuration

<infinispan>

<cache-container statistics="true">

<metrics gauges="true"

histograms="true" />

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"metrics" : {

"gauges" : "true",

"histograms" : "true"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

metrics:

gauges: "true"

histograms: "true"GlobalConfiguration globalConfig = new GlobalConfigurationBuilder()

//Computes and collects statistics for the Cache Manager.

.statistics().enable()

//Exports collected statistics as gauge and histogram metrics.

.metrics().gauges(true).histograms(true)

.build();Legacy format

For backwards compatibility, metrics are currently exported in a legacy format by default. The setting legacy should be set to

false to export metrics in the new format.

<infinispan>

<cache-container statistics="true">

<metrics legacy="false" />

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"metrics" : {

"legacy" : "false"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

metrics:

legacy: "false"4.3. Registering JMX MBeans

Infinispan can register JMX MBeans that you can use to collect statistics and

perform administrative operations.

You must also enable statistics otherwise Infinispan provides 0 values for all statistic attributes in JMX MBeans.

|

Use JMX Mbeans for collecting statistics only when Infinispan is embedded in applications and not with a remote Infinispan server. When you use JMX Mbeans for collecting statistics from a remote Infinispan server, the data received from JMX Mbeans might differ from the data received from other APIs such as REST. In such cases the data received from the other APIs is more accurate. |

-

Open your Infinispan configuration for editing.

-

Add the

jmxelement or object to the cache container and specifytrueas the value for theenabledattribute or field. -

Add the

domainattribute or field and specify the domain where JMX MBeans are exposed, if required. -

Save and close your client configuration.

JMX configuration

<infinispan>

<cache-container statistics="true">

<jmx enabled="true"

domain="example.com"/>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"jmx" : {

"enabled" : "true",

"domain" : "example.com"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

jmx:

enabled: "true"

domain: "example.com"GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.jmx().enable()

.domain("org.mydomain");4.3.1. Enabling JMX remote ports

Provide unique remote JMX ports to expose Infinispan MBeans through connections in JMXServiceURL format.

You can enable remote JMX ports using one of the following approaches:

-

Enable remote JMX ports that require authentication to one of the Infinispan Server security realms.

-

Enable remote JMX ports manually using the standard Java management configuration options.

-

For remote JMX with authentication, define JMX specific user roles using the default security realm. Users must have

controlRolewith read/write access or themonitorRolewith read-only access to access any JMX resources. Infinispan automatically maps globalADMINandMONITORpermissions to the JMXcontrolRoleandmonitorRoleroles.

Start Infinispan Server with a remote JMX port enabled using one of the following ways:

-

Enable remote JMX through port

9999.bin/server.sh --jmx 9999Using remote JMX with SSL disabled is not intended for production environments.

-

Pass the following system properties to Infinispan Server at startup.

bin/server.sh -Dcom.sun.management.jmxremote.port=9999 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=falseEnabling remote JMX with no authentication or SSL is not secure and not recommended in any environment. Disabling authentication and SSL allows unauthorized users to connect to your server and access the data hosted there.

4.3.2. Infinispan MBeans

Infinispan exposes JMX MBeans that represent manageable resources.

org.infinispan:type=Cache-

Attributes and operations available for cache instances.

org.infinispan:type=CacheManager-

Attributes and operations available for Cache Managers, including Infinispan cache and cluster health statistics.

For a complete list of available JMX MBeans along with descriptions and available operations and attributes, see the Infinispan JMX Components documentation.

4.3.3. Registering MBeans in custom MBean servers

Infinispan includes an MBeanServerLookup interface that you can use to

register MBeans in custom MBeanServer instances.

-

Create an implementation of

MBeanServerLookupso that thegetMBeanServer()method returns the custom MBeanServer instance. -

Configure Infinispan to register JMX MBeans.

-

Open your Infinispan configuration for editing.

-

Add the

mbean-server-lookupattribute or field to the JMX configuration for the Cache Manager. -

Specify fully qualified name (FQN) of your

MBeanServerLookupimplementation. -

Save and close your client configuration.

JMX MBean server lookup configuration

<infinispan>

<cache-container statistics="true">

<jmx enabled="true"

domain="example.com"

mbean-server-lookup="com.example.MyMBeanServerLookup"/>

</cache-container>

</infinispan>{

"infinispan" : {

"cache-container" : {

"statistics" : "true",

"jmx" : {

"enabled" : "true",

"domain" : "example.com",

"mbean-server-lookup" : "com.example.MyMBeanServerLookup"

}

}

}

}infinispan:

cacheContainer:

statistics: "true"

jmx:

enabled: "true"

domain: "example.com"

mbeanServerLookup: "com.example.MyMBeanServerLookup"GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.jmx().enable()

.domain("org.mydomain")

.mBeanServerLookup(new com.acme.MyMBeanServerLookup());4.4. Exporting metrics during a state transfer operation

You can export time metrics for clustered caches that Infinispan redistributes across nodes.

A state transfer operation occurs when a clustered cache topology changes, such as a node joining or leaving a cluster. During a state transfer operation, Infinispan exports metrics from each cache, so that you can determine a cache’s status. A state transfer exposes attributes as properties, so that Infinispan can export metrics from each cache.

|

You cannot perform a state transfer operation in invalidation mode. |

Infinispan generates time metrics that are compatible with the REST API and the JMX API.

-

Configure Infinispan metrics.

-

Enable metrics for your cache type, such as embedded cache or remote cache.

-

Initiate a state transfer operation by changing your clustered cache topology.

-

Choose one of the following methods:

-

Configure Infinispan to use the REST API to collect metrics.

-

Configure Infinispan to use the JMX API to collect metrics.

-

4.5. Monitoring the status of cross-site replication

Monitor the site status of your backup locations to detect interruptions in the communication between the sites.

When a remote site status changes to offline, Infinispan stops replicating your data to the backup location.

Your data become out of sync and you must fix the inconsistencies before bringing the clusters back online.

Monitoring cross-site events is necessary for early problem detection. Use one of the following monitoring strategies:

-

Monitoring cross-site replication with the Prometheus metrics or any other monitoring system

Monitoring cross-site replication with the REST API

Monitor the status of cross-site replication for all caches using the REST endpoint. You can implement a custom script to poll the REST endpoint or use the following example.

-

Enable cross-site replication.

-

Implement a script to poll the REST endpoint.

The following example demonstrates how you can use a Python script to poll the site status every five seconds.

#!/usr/bin/python3

import time

import requests

from requests.auth import HTTPDigestAuth

class InfinispanConnection:

def __init__(self, server: str = 'http://localhost:11222', cache_manager: str = 'default',

auth: tuple = ('admin', 'change_me')) -> None:

super().__init__()

self.__url = f'{server}/rest/v2/container/x-site/backups/'

self.__auth = auth

self.__headers = {

'accept': 'application/json'

}

def get_sites_status(self):

try:

rsp = requests.get(self.__url, headers=self.__headers, auth=HTTPDigestAuth(self.__auth[0], self.__auth[1]))

if rsp.status_code != 200:

return None

return rsp.json()

except:

return None

# Specify credentials for Infinispan user with permission to access the REST endpoint

USERNAME = 'admin'

PASSWORD = 'change_me'

# Set an interval between cross-site status checks

POLL_INTERVAL_SEC = 5

# Provide a list of servers

SERVERS = [

InfinispanConnection('http://127.0.0.1:11222', auth=(USERNAME, PASSWORD)),

InfinispanConnection('http://127.0.0.1:12222', auth=(USERNAME, PASSWORD))

]

#Specify the names of remote sites

REMOTE_SITES = [

'nyc'

]

#Provide a list of caches to monitor

CACHES = [

'work',

'sessions'

]

def on_event(site: str, cache: str, old_status: str, new_status: str):

# TODO implement your handling code here

print(f'site={site} cache={cache} Status changed {old_status} -> {new_status}')

def __handle_mixed_state(state: dict, site: str, site_status: dict):

if site not in state:

state[site] = {c: 'online' if c in site_status['online'] else 'offline' for c in CACHES}

return

for cache in CACHES:

__update_cache_state(state, site, cache, 'online' if cache in site_status['online'] else 'offline')

def __handle_online_or_offline_state(state: dict, site: str, new_status: str):

if site not in state:

state[site] = {c: new_status for c in CACHES}

return

for cache in CACHES:

__update_cache_state(state, site, cache, new_status)

def __update_cache_state(state: dict, site: str, cache: str, new_status: str):

old_status = state[site].get(cache)

if old_status != new_status:

on_event(site, cache, old_status, new_status)

state[site][cache] = new_status

def update_state(state: dict):

rsp = None

for conn in SERVERS:

rsp = conn.get_sites_status()

if rsp:

break

if rsp is None:

print('Unable to fetch site status from any server')

return

for site in REMOTE_SITES:

site_status = rsp.get(site, {})

new_status = site_status.get('status')

if new_status == 'mixed':

__handle_mixed_state(state, site, site_status)

else:

__handle_online_or_offline_state(state, site, new_status)

if __name__ == '__main__':

_state = {}

while True:

update_state(_state)

time.sleep(POLL_INTERVAL_SEC)When a site status changes from online to offline or vice-versa, the function on_event is invoked.

If you want to use this script, you must specify the following variables:

-

USERNAMEandPASSWORD: The username and password of Infinispan user with permission to access the REST endpoint. -

POLL_INTERVAL_SEC: The number of seconds between polls. -

SERVERS: The list of Infinispan Servers at this site. The script only requires a single valid response but the list is provided to allow fail over. -

REMOTE_SITES: The list of remote sites to monitor on these servers. -

CACHES: The list of cache names to monitor.

Monitoring cross-site replication with the Prometheus metrics

Prometheus, and other monitoring systems, let you configure alerts to detect when a site status changes to offline.

| Monitoring cross-site latency metrics can help you to discover potential issues. |

-

Enable cross-site replication.

-

Configure Infinispan metrics.

-

Configure alerting rules using the Prometheus metrics format.

-

For the site status, use

1foronlineand0foroffline. -

For the

exprfiled, use the following format:

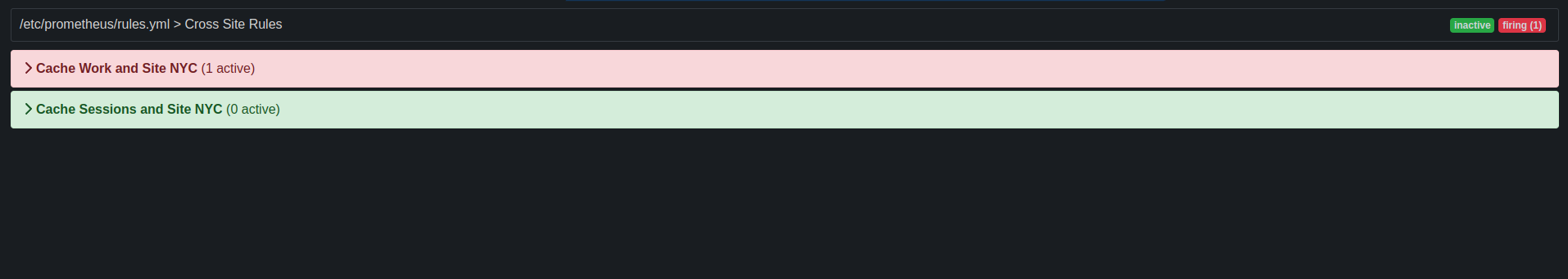

infinispan_x_site_admin_status{cache=\"<cache name>\",site=\"<site name>\"}.In the following example, Prometheus alerts you when the NYC site gets

offlinefor cache namedworkorsessions.groups: - name: Cross Site Rules rules: - alert: Cache Work and Site NYC expr: infinispan_x_site_admin_status{cache=\"Work\",site=\"NYC\"} == 0 - alert: Cache Sessions and Site NYC expr: infinispan_x_site_admin_status{cache=\"Sessions\",site=\"NYC\"} == 0The following image shows an alert that the NYC site is

offlinefor cachework. Figure 1. Prometheus Alert

Figure 1. Prometheus Alert

-

5. Setting up Infinispan cluster transport

Infinispan requires a transport layer so nodes can automatically join and leave clusters. The transport layer also enables Infinispan nodes to replicate or distribute data across the network and perform operations such as re-balancing and state transfer.

5.1. Default JGroups stacks

Infinispan provides default JGroups stack files, default-jgroups-*.xml, in the default-configs directory inside the infinispan-core-16.1.0.Dev02.jar file.

| File name | Stack name | Description |

|---|---|---|

|

|

Uses UDP for transport and UDP multicast for discovery. Suitable for larger clusters (over 100 nodes) or if you are using replicated caches or invalidation mode. Minimizes the number of open sockets. |

|

|

Uses TCP for transport and the |

|

|

Uses TCP for transport and |

|

|

Uses TCP for transport and |

|

|

Uses TCP for transport and |

|

|

Uses TCP for transport and |

|

|

Uses |

5.2. Cluster discovery protocols

Infinispan supports different protocols that allow nodes to automatically find each other on the network and form clusters.

There are two types of discovery mechanisms that Infinispan can use:

-

Generic discovery protocols that work on most networks and do not rely on external services.

-

Discovery protocols that rely on external services to store and retrieve topology information for Infinispan clusters.

For instance the DNS_PING protocol performs discovery through DNS server records.

|

Running Infinispan on hosted platforms requires using discovery mechanisms that are adapted to network constraints that individual cloud providers impose. |

5.2.1. PING

PING, or UDPPING is a generic JGroups discovery mechanism that uses dynamic multicasting with the UDP protocol.

When joining, nodes send PING requests to an IP multicast address to discover other nodes already in the Infinispan cluster. Each node responds to the PING request with a packet that contains the address of the coordinator node and its own address. C=coordinator’s address and A=own address. If no nodes respond to the PING request, the joining node becomes the coordinator node in a new cluster.

<PING num_discovery_runs="3"/>5.2.2. TCPPING

TCPPING is a generic JGroups discovery mechanism that uses a list of static addresses for cluster members.

With TCPPING, you manually specify the IP address or hostname of each node in the Infinispan cluster as part of the JGroups stack, rather than letting nodes discover each other dynamically.

<TCP bind_port="7800" />

<TCPPING timeout="3000"

initial_hosts="${jgroups.tcpping.initial_hosts:hostname1[port1],hostname2[port2]}"

port_range="0"

num_initial_members="3"/>5.2.3. MPING

MPING uses IP multicast to discover the initial membership of Infinispan clusters.

You can use MPING to replace TCPPING discovery with TCP stacks and use multicasing for discovery instead of static lists of initial hosts. However, you can also use MPING with UDP stacks.

<MPING mcast_addr="${jgroups.mcast_addr:239.6.7.8}"

mcast_port="${jgroups.mcast_port:46655}"

num_discovery_runs="3"

ip_ttl="${jgroups.udp.ip_ttl:2}"/>5.2.4. TCPGOSSIP

Gossip routers provide a centralized location on the network from which your Infinispan cluster can retrieve addresses of other nodes.

You inject the address (IP:PORT) of the Gossip router into Infinispan nodes as follows:

-

Pass the address as a system property to the JVM; for example,

-DGossipRouterAddress="10.10.2.4[12001]". -

Reference that system property in the JGroups configuration file.

<TCP bind_port="7800" />

<TCPGOSSIP timeout="3000"

initial_hosts="${GossipRouterAddress}"

num_initial_members="3" />5.2.5. JDBC_PING2

JDBC_PING2 uses shared databases to store information about Infinispan clusters. This protocol supports any database that can use a JDBC connection.

Nodes write their IP addresses to the shared database so joining nodes can find the Infinispan cluster on the network. When nodes leave Infinispan clusters, they delete their IP addresses from the shared database.

<JDBC_PING2 connection_url="jdbc:mysql://localhost:3306/database_name"

connection_username="user"

connection_password="password"

connection_driver="com.mysql.jdbc.Driver"/>|

Add the appropriate JDBC driver to the classpath so Infinispan can use JDBC_PING2. |

Using a server datasource for JDBC_PING2 discovery

Add a managed datasource to a Infinispan Server and use it to provide database connections for the cluster transport JDBC_PING2 discovery protocol.

-

Install a Infinispan Server cluster.

-

Deploy a JDBC driver JAR to your Infinispan Server

server/libdirectory -

Create a datasource for your database.

<server xmlns="urn:infinispan:server:16.2"> <data-sources> <!-- Defines a unique name for the datasource and JNDI name that you reference in JDBC cache store configuration. Enables statistics for the datasource, if required. --> <data-source name="ds" jndi-name="jdbc/postgres" statistics="true"> <!-- Specifies the JDBC driver that creates connections. --> <connection-factory driver="org.postgresql.Driver" url="jdbc:postgresql://localhost:5432/postgres" username="postgres" password="changeme"> <!-- Sets optional JDBC driver-specific connection properties. --> <connection-property name="name">value</connection-property> </connection-factory> <!-- Defines connection pool tuning properties. --> <connection-pool initial-size="1" max-size="10" min-size="3" background-validation="1s" idle-removal="1m" blocking-timeout="1s" leak-detection="10s"/> </data-source> </data-sources> </server> -

Create a JGroups stack which uses the

JDBC_PING2protocol for discovery. -

Configure cluster transport to use the datasource by specifying the name of the datasource with the

server:data-sourceattribute.<infinispan> <jgroups> <stack name="jdbc" extends="tcp"> <JDBC_PING2 stack.combine="REPLACE" stack.position="MPING" /> </stack> </jgroups> <cache-container> <transport stack="jdbc" server:data-source="ds" /> </cache-container> </infinispan>

5.2.6. DNS_PING

JGroups DNS_PING queries DNS servers to discover Infinispan cluster members in Kubernetes environments such as OKD and Red Hat OpenShift.

<dns.DNS_PING dns_query="myservice.myproject.svc.cluster.local" />-

DNS for Services and Pods (Kubernetes documentation for adding DNS entries)

5.2.7. Cloud discovery protocols

Infinispan includes default JGroups stacks that use discovery protocol implementations that are specific to cloud providers.

| Discovery protocol | Default stack file | Artifact | Version |

|---|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

Providing dependencies for cloud discovery protocols

To use aws.S3_PING, GOOGLE_PING2, or azure.AZURE_PING cloud discovery protocols, you need to provide dependent libraries to Infinispan.

-

Add the artifact dependencies to your project

pom.xml.

You can then configure the cloud discovery protocol as part of a JGroups stack file or with system properties.

5.3. Using the default JGroups stacks

Infinispan uses JGroups protocol stacks so nodes can send each other messages on dedicated cluster channels.

Infinispan provides preconfigured JGroups stacks for UDP and TCP protocols.

You can use these default stacks as a starting point for building custom cluster transport configuration that is optimized for your network requirements.

Do one of the following to use one of the default JGroups stacks:

-

Use the

stackattribute in yourinfinispan.xmlfile.<infinispan> <cache-container default-cache="replicatedCache"> <!-- Use the default UDP stack for cluster transport. --> <transport cluster="${infinispan.cluster.name}" stack="udp" node-name="${infinispan.node.name:}"/> </cache-container> </infinispan> -

Use the

addProperty()method to set the JGroups stack file:GlobalConfiguration globalConfig = new GlobalConfigurationBuilder().transport() .defaultTransport() .clusterName("qa-cluster") //Uses the default-jgroups-udp.xml stack for cluster transport. .addProperty("configurationFile", "default-jgroups-udp.xml") .build();

Infinispan logs the following message to indicate which stack it uses:

[org.infinispan.CLUSTER] ISPN000078: Starting JGroups channel cluster with stack udp5.4. Customizing JGroups stacks

Adjust and tune properties to create a cluster transport configuration that works for your network requirements.

Infinispan provides attributes that let you extend the default JGroups stacks for easier configuration. You can inherit properties from the default stacks while combining, removing, and replacing other properties.

-

Create a new JGroups stack declaration in your

infinispan.xmlfile. -

Add the

extendsattribute and specify a JGroups stack to inherit properties from. -

Use the

stack.combineattribute to modify properties for protocols configured in the inherited stack. -

Use the

stack.positionattribute to define the location for your custom stack. -

Specify the stack name as the value for the

stackattribute in thetransportconfiguration.For example, you might evaluate using a Gossip router and symmetric encryption with the default TCP stack as follows:

<infinispan> <jgroups> <!-- Creates a custom JGroups stack named "my-stack". --> <!-- Inherits properties from the default TCP stack. --> <stack name="my-stack" extends="tcp"> <!-- Uses TCPGOSSIP as the discovery mechanism instead of MPING --> <TCPGOSSIP initial_hosts="${jgroups.tunnel.gossip_router_hosts:localhost[12001]}" stack.combine="REPLACE" stack.position="MPING" /> <!-- Removes the FD_SOCK2 protocol from the stack. --> <FD_SOCK2 stack.combine="REMOVE"/> <!-- Modifies the timeout value for the VERIFY_SUSPECT2 protocol. --> <VERIFY_SUSPECT2 timeout="2s"/> <!-- Adds SYM_ENCRYPT to the stack after VERIFY_SUSPECT2. --> <SYM_ENCRYPT sym_algorithm="AES" keystore_name="mykeystore.p12" keystore_type="PKCS12" store_password="changeit" key_password="changeit" alias="myKey" stack.combine="INSERT_AFTER" stack.position="VERIFY_SUSPECT2" /> </stack> </jgroups> <cache-container name="default" statistics="true"> <!-- Uses "my-stack" for cluster transport. --> <transport cluster="${infinispan.cluster.name}" stack="my-stack" node-name="${infinispan.node.name:}"/> </cache-container> </infinispan> -

Check Infinispan logs to ensure it uses the stack.

[org.infinispan.CLUSTER] ISPN000078: Starting JGroups channel cluster with stack my-stack

5.4.1. Inheritance attributes

When you extend a JGroups stack, inheritance attributes let you adjust protocols and properties in the stack you are extending.

-

stack.positionspecifies protocols to modify. -

stack.combineuses the following values to extend JGroups stacks:Value Description COMBINEOverrides protocol properties.

REPLACEReplaces protocols.

INSERT_AFTERAdds a protocol into the stack after another protocol. Does not affect the protocol that you specify as the insertion point.

Protocols in JGroups stacks affect each other based on their location in the stack. For example, you should put a protocol such as

NAKACK2after theSYM_ENCRYPTorASYM_ENCRYPTprotocol so thatNAKACK2is secured.INSERT_BEFOREInserts a protocols into the stack before another protocol. Affects the protocol that you specify as the insertion point.

REMOVERemoves protocols from the stack.

5.5. Using JGroups system properties

Pass system properties to Infinispan at startup to tune cluster transport.

-

Use

-D<property-name>=<property-value>arguments to set JGroups system properties as required.

For example, set a custom bind port and IP address as follows:

java -cp ... -Djgroups.bind.port=1234 -Djgroups.bind.address=192.0.2.0|

When you embed Infinispan clusters in clustered WildFly applications, JGroups system properties can clash or override each other. For example, you do not set a unique bind address for either your Infinispan cluster or your WildFly application. In this case both Infinispan and your WildFly application use the JGroups default property and attempt to form clusters using the same bind address. |

5.5.1. Cluster transport properties

Use the following properties to customize JGroups cluster transport.

| System Property | Description | Default Value | Required/Optional |

|---|---|---|---|

|

Bind address for cluster transport. |

|

Optional |

|

Bind port for the socket. |

|

Optional |

|

IP address for multicast when using UDP transport, both discovery and inter-cluster communication. The IP address must be a valid "class D" address that is suitable for IP multicast. |

|

Optional |

|

Port for the multicast socket when using UDP transport. |

|

Optional |

|

Time-to-live (TTL) for IP multicast packets when using UDP transport. The value defines the number of network hops a packet can make before it is dropped. |

2 |

Optional |

|

Minimum number of threads for the thread pool. |

0 |

Optional |

|

Maximum number of threads for the thread pool. |

200 |

Optional |

|

Maximum number of milliseconds to wait for join requests to succeed. |

2000 |

Optional |

|

Dump threads when the thread pool is full. |

|

Optional |

|

Offset from |

|

Optional |

|

Maximum number of bytes in a message. Messages larger than that are fragmented. |

60000 |

Optional |

|

Enables JGroups diagnostic probing. |

false |

Optional |

5.5.2. System properties for cloud discovery protocols

Use the following properties to configure JGroups discovery protocols for hosted platforms.

Amazon EC2

System properties for configuring aws.S3_PING.

| System Property | Description | Default Value | Required/Optional |

|---|---|---|---|

|

Name of the Amazon S3 region. |

No default value. |

Optional |

|

Name of the Amazon S3 bucket. The name must exist and be unique. |

No default value. |

Optional |

Google Cloud Platform

System properties for configuring GOOGLE_PING2.

| System Property | Description | Default Value | Required/Optional |

|---|---|---|---|

|

Name of the Google Compute Engine bucket. The name must exist and be unique. |

No default value. |

Required |

Azure

System properties for azure.AZURE_PING`.

| System Property | Description | Default Value | Required/Optional |

|---|---|---|---|

|

Name of the Azure storage account. The name must exist and be unique. |

No default value. |

Required |

|

Name of the Azure storage access key. |

No default value. |

Required |

|

Valid DNS name of the container that stores ping information. |

No default value. |

Required |

5.6. Using inline JGroups stacks

You can insert complete JGroups stack definitions into infinispan.xml files.

-

Embed a custom JGroups stack declaration in your

infinispan.xmlfile.<infinispan> <!-- Contains one or more JGroups stack definitions. --> <jgroups> <!-- Defines a custom JGroups stack named "prod". --> <stack name="prod"> <TCP bind_port="7800" port_range="30" recv_buf_size="20000000" send_buf_size="640000"/> <RED/> <MPING break_on_coord_rsp="true" mcast_addr="${jgroups.mping.mcast_addr:239.2.4.6}" mcast_port="${jgroups.mping.mcast_port:43366}" num_discovery_runs="3" ip_ttl="${jgroups.udp.ip_ttl:2}"/> <MERGE3 /> <FD_SOCK2 /> <FD_ALL3 timeout="3000" interval="1000" timeout_check_interval="1000" /> <VERIFY_SUSPECT2 timeout="1s" /> <pbcast.NAKACK2 use_mcast_xmit="false" xmit_interval="200ms" xmit_table_num_rows="50" xmit_table_msgs_per_row="1024" xmit_table_max_compaction_time="30s" /> <UNICAST3 conn_close_timeout="5s" xmit_interval="200ms" xmit_table_num_rows="50" xmit_table_msgs_per_row="1024" xmit_table_max_compaction_time="30s" /> <pbcast.STABLE desired_avg_gossip="2s" max_bytes="1M" /> <pbcast.GMS print_local_addr="false" join_timeout="${jgroups.join_timeout:2s}" /> <UFC max_credits="4m" min_threshold="0.40" /> <MFC max_credits="4m" min_threshold="0.40" /> <FRAG4 /> </stack> </jgroups> <cache-container default-cache="replicatedCache"> <!-- Uses "prod" for cluster transport. --> <transport cluster="${infinispan.cluster.name}" stack="prod" node-name="${infinispan.node.name:}"/> </cache-container> </infinispan>

5.7. Using external JGroups stacks

Reference external files that define custom JGroups stacks in infinispan.xml files.

-

Put custom JGroups stack files on the application classpath.

Alternatively you can specify an absolute path when you declare the external stack file.

-

Reference the external stack file with the

stack-fileelement.<infinispan> <jgroups> <!-- Creates a "prod-tcp" stack that references an external file. --> <stack-file name="prod-tcp" path="prod-jgroups-tcp.xml"/> </jgroups> <cache-container default-cache="replicatedCache"> <!-- Use the "prod-tcp" stack for cluster transport. --> <transport stack="prod-tcp" /> <replicated-cache name="replicatedCache"/> </cache-container> <!-- Cache configuration goes here. --> </infinispan>

You can also use the addProperty() method in the TransportConfigurationBuilder class to specify a custom JGroups stack file as follows:

GlobalConfiguration globalConfig = new GlobalConfigurationBuilder().transport()

.defaultTransport()

.clusterName("prod-cluster")

//Uses a custom JGroups stack for cluster transport.

.addProperty("configurationFile", "my-jgroups-udp.xml")

.build();In this example, my-jgroups-udp.xml references a UDP stack with custom properties such as the following:

<config xmlns="urn:org:jgroups"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:org:jgroups http://www.jgroups.org/schema/jgroups-5.5.xsd">

<UDP bind_addr="${jgroups.bind_addr:127.0.0.1}"

mcast_addr="${jgroups.udp.mcast_addr:239.0.2.0}"

mcast_port="${jgroups.udp.mcast_port:46655}"

tos="8"

ucast_recv_buf_size="20000000"

ucast_send_buf_size="640000"

mcast_recv_buf_size="25000000"

mcast_send_buf_size="640000"

bundler.max_size="64k"

ip_ttl="${jgroups.udp.ip_ttl:2}"

diag.enabled="false"

thread_naming_pattern="pl"

thread_pool.enabled="true"

thread_pool.min_threads="2"

thread_pool.max_threads="30"

thread_pool.keep_alive_time="5s" />

<!-- Other JGroups stack configuration goes here. -->

</config>5.8. Using custom JChannels

Construct custom JGroups JChannels as in the following example:

JChannel jchannel = new JChannel();

// Configure the jchannel as needed.

ConfigurationBuilderHolder holder = new ConfigurationBuilderHolder();

holder.getGlobalConfigurationBuilder()

.transport().transport(new JGroupsTransport(jchannel));

// Further configuration as needed.

DefaultCacheManager cacheManager = new DefaultCacheManager(holder);|

Infinispan cannot use custom JChannels that are already connected. |

5.9. Encrypting cluster transport

Secure cluster transport so that nodes communicate with encrypted messages. You can also configure Infinispan clusters to perform certificate authentication so that only nodes with valid identities can join.

5.9.1. JGroups encryption protocols

To secure cluster traffic, you can configure Infinispan nodes to encrypt JGroups message payloads with secret keys.

Infinispan nodes can obtain secret keys from either:

-

The coordinator node (asymmetric encryption).

-

A shared keystore (symmetric encryption).

You configure asymmetric encryption by adding the ASYM_ENCRYPT protocol to a JGroups stack in your Infinispan configuration.

This allows Infinispan clusters to generate and distribute secret keys.

|

When using asymmetric encryption, you should also provide keystores so that nodes can perform certificate authentication and securely exchange secret keys. This protects your cluster from man-in-the-middle (MitM) attacks. |

Asymmetric encryption secures cluster traffic as follows:

-

The first node in the Infinispan cluster, the coordinator node, generates a secret key.

-

A joining node performs certificate authentication with the coordinator to mutually verify identity.

-

The joining node requests the secret key from the coordinator node. That request includes the public key for the joining node.

-

The coordinator node encrypts the secret key with the public key and returns it to the joining node.

-

The joining node decrypts and installs the secret key.

-

The node joins the cluster, encrypting and decrypting messages with the secret key.

You configure symmetric encryption by adding the SYM_ENCRYPT protocol to a JGroups stack in your Infinispan configuration.

This allows Infinispan clusters to obtain secret keys from keystores that you provide.

-

Nodes install the secret key from a keystore on the Infinispan classpath at startup.

-

Node join clusters, encrypting and decrypting messages with the secret key.

ASYM_ENCRYPT with certificate authentication provides an additional layer of encryption in comparison with SYM_ENCRYPT.

You provide keystores that encrypt the requests to coordinator nodes for the secret key.

Infinispan automatically generates that secret key and handles cluster traffic, while letting you specify when to generate secret keys.

For example, you can configure clusters to generate new secret keys when nodes leave.

This ensures that nodes cannot bypass certificate authentication and join with old keys.

SYM_ENCRYPT, on the other hand, is faster than ASYM_ENCRYPT because nodes do not need to exchange keys with the cluster coordinator.

A potential drawback to SYM_ENCRYPT is that there is no configuration to automatically generate new secret keys when cluster membership changes.

Users are responsible for generating and distributing the secret keys that nodes use to encrypt cluster traffic.

5.9.2. Securing cluster transport with asymmetric encryption

Configure Infinispan clusters to generate and distribute secret keys that encrypt JGroups messages.

-

Create a keystore with certificate chains that enables Infinispan to verify node identity.

-

Place the keystore on the classpath for each node in the cluster.

For Infinispan Server, you put the keystore in the $ISPN_HOME directory.

-

Add the

SSL_KEY_EXCHANGEandASYM_ENCRYPTprotocols to a JGroups stack in your Infinispan configuration, as in the following example:<infinispan> <jgroups> <!-- Creates a secure JGroups stack named "encrypt-tcp" that extends the default TCP stack. --> <stack name="encrypt-tcp" extends="tcp"> <!-- Adds a keystore that nodes use to perform certificate authentication. --> <!-- Uses the stack.combine and stack.position attributes to insert SSL_KEY_EXCHANGE into the default TCP stack after VERIFY_SUSPECT2. --> <SSL_KEY_EXCHANGE keystore_name="mykeystore.jks" keystore_password="changeit" stack.combine="INSERT_AFTER" stack.position="VERIFY_SUSPECT2"/> <!-- Configures ASYM_ENCRYPT --> <!-- Uses the stack.combine and stack.position attributes to insert ASYM_ENCRYPT into the default TCP stack before pbcast.NAKACK2. --> <!-- The use_external_key_exchange = "true" attribute configures nodes to use the `SSL_KEY_EXCHANGE` protocol for certificate authentication. --> <ASYM_ENCRYPT asym_keylength="2048" asym_algorithm="RSA" change_key_on_coord_leave = "false" change_key_on_leave = "false" use_external_key_exchange = "true" stack.combine="INSERT_BEFORE" stack.position="pbcast.NAKACK2"/> </stack> </jgroups> <cache-container name="default" statistics="true"> <!-- Configures the cluster to use the JGroups stack. --> <transport cluster="${infinispan.cluster.name}" stack="encrypt-tcp" node-name="${infinispan.node.name:}"/> </cache-container> </infinispan>

When you start your Infinispan cluster, the following log message indicates that the cluster is using the secure JGroups stack:

[org.infinispan.CLUSTER] ISPN000078: Starting JGroups channel cluster with stack <encrypted_stack_name>Infinispan nodes can join the cluster only if they use ASYM_ENCRYPT and can obtain the secret key from the coordinator node.

Otherwise the following message is written to Infinispan logs:

[org.jgroups.protocols.ASYM_ENCRYPT] <hostname>: received message without encrypt header from <hostname>; dropping it5.9.3. Securing cluster transport with symmetric encryption

Configure Infinispan clusters to encrypt JGroups messages with secret keys from keystores that you provide.

-

Create a keystore that contains a secret key.

-

Place the keystore on the classpath for each node in the cluster.

For Infinispan Server, you put the keystore in the $ISPN_HOME directory.

-

Add the

SYM_ENCRYPTprotocol to a JGroups stack in your Infinispan configuration.

<infinispan>

<jgroups>

<!-- Creates a secure JGroups stack named "encrypt-tcp" that extends the default TCP stack. -->

<stack name="encrypt-tcp" extends="tcp">

<!-- Adds a keystore from which nodes obtain secret keys. -->

<!-- Uses the stack.combine and stack.position attributes to insert SYM_ENCRYPT into the default TCP stack after VERIFY_SUSPECT2. -->

<SYM_ENCRYPT keystore_name="myKeystore.p12"

keystore_type="PKCS12"

store_password="changeit"

key_password="changeit"

alias="myKey"

stack.combine="INSERT_AFTER"

stack.position="VERIFY_SUSPECT2"/>

</stack>

</jgroups>

<cache-container name="default" statistics="true">

<!-- Configures the cluster to use the JGroups stack. -->

<transport cluster="${infinispan.cluster.name}"

stack="encrypt-tcp"

node-name="${infinispan.node.name:}"/>

</cache-container>

</infinispan>When you start your Infinispan cluster, the following log message indicates that the cluster is using the secure JGroups stack:

[org.infinispan.CLUSTER] ISPN000078: Starting JGroups channel cluster with stack <encrypted_stack_name>Infinispan nodes can join the cluster only if they use SYM_ENCRYPT and can obtain the secret key from the shared keystore.

Otherwise the following message is written to Infinispan logs:

[org.jgroups.protocols.SYM_ENCRYPT] <hostname>: received message without encrypt header from <hostname>; dropping it

5.10. TCP and UDP ports for cluster traffic

Infinispan uses the following ports for cluster transport messages:

| Default Port | Protocol | Description |

|---|---|---|

|

TCP/UDP |

JGroups cluster bind port |

|

UDP |

JGroups multicast |

|

TCP |

Failure detection is provided by |

Cross-site replication

Infinispan uses the following ports for the JGroups RELAY2 protocol:

7900-

For Infinispan clusters running on Kubernetes.

7801-

For other deployments.

5.11. Virtual Threads Support

Infinispan supports virtual threads, which can significantly improve application responsiveness and scalability under high concurrency. By default, they are enabled if you are running on JDK 21 or higher.

|

On systems with JDK versions prior to 24 and low CPU counts (2 or less), Infinispan might experience thread pinning issues when using virtual threads. Thread pinning is a situation where virtual threads are unexpectedly bound to a limited number of OS threads, potentially leading to performance degradation or system freezes. To work around this problem, virtual threads can be disabled as described in the procedure below, or the virtual thread scheduler parallelism may be increased with the Java option |

6. Clustered Locks

Clustered locks are data structures that are distributed and shared across nodes in a Infinispan cluster. Clustered locks allow you to run code that is synchronized between nodes.

6.1. Lock API

Infinispan provides a ClusteredLock API that lets you concurrently execute

code on a cluster when using Infinispan in embedded mode.

The API consists of the following:

-

ClusteredLockexposes methods to implement clustered locks. -

ClusteredLockManagerexposes methods to define, configure, retrieve, and remove clustered locks. -

EmbeddedClusteredLockManagerFactoryinitializesClusteredLockManagerimplementations.

Infinispan supports NODE ownership so that all nodes in a cluster can use a

lock.

Infinispan clustered locks are non-reentrant so any node in the cluster can acquire a lock but only the node that creates the lock can release it.

If two consecutive lock calls are sent for the same owner, the first call acquires the lock if it is available and the second call is blocked.

6.2. Using Clustered Locks

Learn how to use clustered locks with Infinispan embedded in your application.

-

Add the

infinispan-clustered-lockdependency to yourpom.xml:

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-clustered-lock</artifactId>

</dependency>-

Initialize the

ClusteredLockManagerinterface from a Cache Manager. This interface is the entry point for defining, retrieving, and removing clustered locks. -

Give a unique name for each clustered lock.

-

Acquire locks with the

lock.tryLock(1, TimeUnit.SECONDS)method.

// Set up a clustered Cache Manager.

GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder();

// Configure the cache mode, in this case it is distributed and synchronous.

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.clustering().cacheMode(CacheMode.DIST_SYNC);

// Initialize a new default Cache Manager.

DefaultCacheManager cm = new DefaultCacheManager(global.build(), builder.build());

// Initialize a Clustered Lock Manager.

ClusteredLockManager clm1 = EmbeddedClusteredLockManagerFactory.from(cm);

// Define a clustered lock named 'lock'.

clm1.defineLock("lock");

// Get a lock from each node in the cluster.

ClusteredLock lock = clm1.get("lock");

AtomicInteger counter = new AtomicInteger(0);

// Acquire the lock as follows.

// Each 'lock.tryLock(1, TimeUnit.SECONDS)' method attempts to acquire the lock.

// If the lock is not available, the method waits for the timeout period to elapse. When the lock is acquired, other calls to acquire the lock are blocked until the lock is released.

CompletableFuture<Boolean> call1 = lock.tryLock(1, TimeUnit.SECONDS).whenComplete((r, ex) -> {

if (r) {

System.out.println("lock is acquired by the call 1");

lock.unlock().whenComplete((nil, ex2) -> {

System.out.println("lock is released by the call 1");

counter.incrementAndGet();

});

}

});

CompletableFuture<Boolean> call2 = lock.tryLock(1, TimeUnit.SECONDS).whenComplete((r, ex) -> {

if (r) {

System.out.println("lock is acquired by the call 2");

lock.unlock().whenComplete((nil, ex2) -> {

System.out.println("lock is released by the call 2");

counter.incrementAndGet();

});

}

});

CompletableFuture<Boolean> call3 = lock.tryLock(1, TimeUnit.SECONDS).whenComplete((r, ex) -> {

if (r) {

System.out.println("lock is acquired by the call 3");

lock.unlock().whenComplete((nil, ex2) -> {

System.out.println("lock is released by the call 3");

counter.incrementAndGet();

});

}

});

CompletableFuture.allOf(call1, call2, call3).whenComplete((r, ex) -> {

// Print the value of the counter.

System.out.println("Value of the counter is " + counter.get());

// Stop the Cache Manager.

cm.stop();

});6.3. Configuring Internal Caches for Locks

Clustered Lock Managers include an internal cache that stores lock state. You can configure the internal cache either declaratively or programmatically.

-

Define the number of nodes in the cluster that store the state of clustered locks. The default value is

-1, which replicates the value to all nodes. -

Specify one of the following values for the cache reliability, which controls how clustered locks behave when clusters split into partitions or multiple nodes leave:

-

AVAILABLE: Nodes in any partition can concurrently operate on locks. -

CONSISTENT: Only nodes that belong to the majority partition can operate on locks. This is the default value. -

Programmatic configuration

import org.infinispan.lock.configuration.ClusteredLockManagerConfiguration; import org.infinispan.lock.configuration.ClusteredLockManagerConfigurationBuilder; import org.infinispan.lock.configuration.Reliability; ... GlobalConfigurationBuilder global = GlobalConfigurationBuilder.defaultClusteredBuilder(); final ClusteredLockManagerConfiguration config = global.addModule(ClusteredLockManagerConfigurationBuilder.class).numOwner(2).reliability(Reliability.AVAILABLE).create(); DefaultCacheManager cm = new DefaultCacheManager(global.build()); ClusteredLockManager clm1 = EmbeddedClusteredLockManagerFactory.from(cm); clm1.defineLock("lock"); -

Declarative configuration

<infinispan xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="urn:infinispan:config:16.2 https://infinispan.org/schemas/infinispan-config-16.2.xsd" xmlns="urn:infinispan:config:16.2"> <cache-container default-cache="default"> <transport/> <local-cache name="default"> <locking concurrency-level="100" acquire-timeout="1s"/> </local-cache> <clustered-locks xmlns="urn:infinispan:config:clustered-locks:16.2" num-owners = "3" reliability="AVAILABLE"> <clustered-lock name="lock1" /> <clustered-lock name="lock2" /> </clustered-locks> </cache-container> <!-- Cache configuration goes here. --> </infinispan>

-

7. Executing code in the grid

The main benefit of a cache is the ability to very quickly lookup a value by its key, even across machines. In fact this use alone is probably the reason many users use Infinispan. However Infinispan can provide many more benefits that aren’t immediately apparent. Since Infinispan is usually used in a cluster of machines we also have features available that can help utilize the entire cluster for performing the user’s desired workload.

7.1. Cluster Executor

Since you have a group of machines, it makes sense to leverage their combined

computing power for executing code on all of them them.

The Cache Manager comes with a nice utility that allows you to

execute arbitrary code in the cluster. Note this feature requires no Cache to be used. This

Cluster Executor

can be retrieved by calling executor() on the EmbeddedCacheManager. This executor is retrievable

in both clustered and non clustered configurations.

| The ClusterExecutor is specifically designed for executing code where the code is not reliant upon the data in a cache and is used instead as a way to help users to execute code easily in the cluster. |

This manager was built specifically around Java’s streaming API, thus all methods take a functional interface as an argument. Also since these arguments will be sent to other nodes they need to be serializable. We even used a nice trick to ensure our lambdas are immediately Serializable. That is by having the arguments implement both Serializable and the real argument type (ie. Runnable or Function). The JRE will pick the most specific class when determining which method to invoke, so in that case your lambdas will always be serializable. It is also possible to use ProtoStream marshalling to reduce message size further.

The manager by default will submit a given command to all nodes in the cluster including the node

where it was submitted from. You can control on which nodes the task is executed on

by using the filterTargets methods as is explained in the section.

7.1.1. Filtering execution nodes

It is possible to limit on which nodes the command will be ran. For example you may want to only run a computation on machines in the same rack. Or you may want to perform an operation once in the local site and again on a different site. A cluster executor can limit what nodes it sends requests to at the scope of same or different machine, rack or site level.

EmbeddedCacheManager manager = ...;

manager.executor().filterTargets(ClusterExecutionPolicy.SAME_RACK).submit(...)To use this topology base filtering you must enable topology aware consistent hashing through Server Hinting.

You can also filter using a predicate based on the Address of the node. This can also

be optionally combined with topology based filtering in the previous code snippet.

We also allow the target node to be chosen by any means using a Predicate that

will filter out which nodes can be considered for execution. Note this can also be combined

with Topology filtering at the same time to allow even more fine control of where you code

is executed within the cluster.

EmbeddedCacheManager manager = ...;

// Just filter

manager.executor().filterTargets(a -> a.equals(..)).submit(...)

// Filter only those in the desired topology

manager.executor().filterTargets(ClusterExecutionPolicy.SAME_SITE, a -> a.equals(..)).submit(...)7.1.2. Timeout