Monday, 12 March 2012

JDK 8 backported ConcurrentHashMaps in Infinispan

Doug Lea and the folks on the concurrency-interest group have been hard at work on an update of JSR 166 (concurrency utilities) for Java 8 - called JSR 166e. These include some pretty impressive changes in a building-block we’ve all come to rely pretty heavily on, the ConcurrentHashMap.

One if the big drawbacks in the ConcurrentHashMap, since it was introduced in Java 5, has always been memory footprint. It is kinda bulky, especially when compared to a regular HashMap - 1.6 kb in memory versus about 100 bytes! Yes, these are for empty maps.

In Java 8, one of the improvements in the ConcurrentHashMap has been memory footprint - now closer to a regular HashMap. In addition to that, the new Java 8 CHM performs better under concurrent load when compared to its original form. See this discussion and comments in the proposed ConcurrentHashMapV8 sources for more details.

So, Infinispan makes pretty heavy use of ConcurrentHashMaps internally. One change in the recently released Infinispan 5.1.2.Final is these internal CHMs are built using a factory, and we’ve included a version of the Java 8 CHM in Infinispan. So by default, Infinispan uses the JDK’s provided CHM. But if you wish to force Infinispan to use the backported Java 8 CHM, all you need to do is include the following JVM parameter when you start Infinispan:

-Dinfinispan.unsafe.allow_jdk8_chm=true

We’d love to hear what you have to say about this, in terms of memory footprint, garbage collection and overall performance. Please use the Infinispan user forums to provide your feedback.

Thanks Manik

Tags: event performance community garbage collection concurrency

Tuesday, 30 March 2010

Infinispan eviction, batching updates and LIRS

DataContainer abstraction represents the heart of Infinispan. It is a container structure where actual cache data resides. Every put, remove, get and other invoked cache operations eventually end up in the data container. Therefore, it is of utmost importance the data container is implemented in a way that does not impede overall system throughput. Also recall that the data container’s memory footprint can not grow indefinitely because we would eventually run out of memory; we have to periodically evict certain entries from the data container according to a chosen eviction algorithm.

LRU eviction algorithm, although simple and easy to understand, under performs in cases of weak access locality (one time access entries are not timely replaced, entries to be accessed soonest are unfortunately replaced, and so on). Recently, a new eviction algorithm - LIRS has gathered a lot of attention because it addresses weak access locality shortcomings of LRU yet it retains LRU’s simplicity.

However, no matter what eviction algorithm is utilized, if eviction is not implemented in a scalable, low lock contention approach, it can seriously degrade overall system performance. In order to do any meaningful selection of entries for eviction we have to lock data container until appropriate eviction entries are selected. Having such a lock protected data container in turn causes high lock contention offsetting any eviction precision gained by sophisticated eviction algorithms. In order to get superior throughput while retaining high eviction precision we need both low lock contention data container and high precision eviction algorithm implementation – a seemingly impossible feat.

Instead of making a trade-off between the high precision eviction algorithm and the low lock contention there is a third approach: we keep lock protected data container but we amortize locking cost through batching updates. The basic idea is to wrap any eviction algorithm with a framework that keeps track of cache access per thread (i.e. ThreadLocal) in a simple queue. For each cache hit associated with a thread, the access is recorded in the thread’s queue. If thread’s queue is full or the number of accesses recorded in the queue reaches a certain pre-determined threshold, we acquire a lock and then execute operations defined by the eviction algorithm - once for all the accesses in the queue. A thread is allowed to access many cache items without requesting a lock to run the eviction replacement algorithm, or without paying the lock acquisition cost. We fully exploit a non-blocking lock APIs like tryLock. As you recall tryLock makes an attempt to get the lock and if the lock is currently held by another thread, it fails without blocking its caller thread. Although tryLock is cheap it is not used for every cache access for obvious reasons but rather on certain pre-determined thresholds. In case when thread’s queue is totally full a lock must be explicitly requested. Therefore, using batching updates approach we significantly lower the cost of lock contention, streamline access to locked structures and retain the precision of eviction algorithm such as LIRS. The key insight is that batching the updates on the eviction algorithm doesn’t materially affect the accuracy of the algorithm.

How are these ideas implemented in Infinispan? We introduced BoundedConcurrentHashMap class based on Doug Lea’s ConcurrentHashMap. BoundedConcurrentHashMap hashes entries based on their keys into lock protected segments. Instead of recording entries accessed per thread we record them in a lock free queue on a segment level. The main reason not to use ThreadLocal is that we could potentially have hundreds of threads hitting the data container, some of them very short lived thus possibly never reaching batching thresholds. When predetermined thresholds are reached eviction algorithms is executed on a segment level. Would running eviction algorithm on a segment level rather than entire data container impact overall eviction precision? In our performance tests we have not found any evidence of that.

Infinispan’s eviction algorithm is specified using strategy attribute of eviction XML element. In addition to old eviction approaches, starting with release 4.1.ALPHA2, you can now select LIRS eviction algorithm. LRU remains the default. Also note that starting with 4.1ALPHA2 release there are two distinct approaches to actually evict entries from the cache: piggyback and the default approach using a dedicated EvictionManager thread. Piggyback eviction thread policy, as it name implies, does eviction by piggybacking on user threads that are hitting the data container. Dedicated EvictionManager thread is unchanged from the previous release and it remains the default option. In order to support these two eviction thread policies a new eviction attribute threadPolicy has been added to eviction element of Infinispan configuration schema.

Does eviction redesign based on batching updates promise to live up to its expectations? Ding et al, authors of the original batching proposal, found that their framework increased throughput nearly twofold in comparison with unmodified eviction in postgreSQL 8.2.3. We do not have any numbers to share yet, however, initial testing of BoundedConcurrentHashMap were indeed promising. One of our partner companies replaced their crucial caching component with BoundedConcurrentHashMap and realized a 54% performance improvement on the Berlin SPARQL benchmark for their flagship product. Stay tuned for more updates.

Cheers,

Vladimir

Tags: eviction concurrency data structures

Monday, 27 July 2009

Increase transactional throughput with deadlock detection

Deadlock detection is a new feature in Infinispan. It is about increasing the number of transactions that can be concurrently processed. Let’s start with the problem first (the deadlock) then discuss some design details and performance.

So, the by-the-book deadlock example is the following:

-

Transaction one (T1) performs following operation sequence: (write key_1,write key_2)

-

Transaction two (T2) performs following sequence: (write key_2, write key_1).

Now, if the T1 and T2 happen at the same time and both have executed first operation, then they will wait for each other virtually forever to release owned locks on keys. In the real world, the waiting period is defined by a lock acquisition timeout (LAT) - which defaults to 10 seconds - that allows the system to overcome such scenarios and respond to the user one way (successful) or the other(failure): so after a period of LAT one (or both) transaction will rollback, allowing the other to continue working.

Deadlocks are bad for both system’s throughput and user experience. System throughput is affected because during the deadlock period (which might extend up to LAT) no other thread will be able to update neither key_1 nor key_2. Even worse, access to any other keys that were modified by T1 or T2 will be similarly restricted. User experience is altered by the fact that the call(s) will freeze for the entire deadlock period, and also there’s a chance that both T1 and T2 will rollback by timing out.

As a side note, in the previous example, if the code running the transactions would(and can) enforce any sort of ordering on the keys accessed within the transaction, then the deadlock would be avoided. E.g. if the application code would order the operation based on the lexicographic ordering of keys, both T1 and T2 would execute the following sequence: (write key_1,write key_2), and so no deadlock would result. This is a best practice and should be followed whenever possible. Enough with the theory! The way Infinispan performs deadlock detection is based on an algorithm designed by Jason Greene and Manik Surtani, which is detailed here. The basic idea is to split the LAT in smaller cycles, as it follows:

lock(int lockAcquisitionTimeout) {

while (currentTime < startTime + timeout) {

if (acquire(smallTimeout)) break;

testForDeadlock(globalTransaction, key);

}

}What testForDeadlock(globalTransaction, key) does is check weather there is another transaction that satisfies both conditions:

-

holds a lock on key and

-

intends to lock on a key that is currently called by this transaction.

If such a transaction is found then this is a deadlock, and one of the running transactions will be interrupted: the decision of which transaction will interrupt is based on coin toss, a random number that is associated with each transaction. This will ensure that only one transaction will rollback, and the decision is deterministic: nodes and transactions do not need to communicate with each other to determine the outcome.

Deadlock detection in Infinispan works in two flavors: determining deadlocks on transactions that spread over several caches and deadlock detection in transactions running on a single(local) cache.

Let’s see some performance figures as well. A class for benchmarking performance of deadlock detection functionality was created and can be seen here. Test description (from javadoc):

We use a fixed size pool of keys (KEY_POOL_SIZE) on which each transaction operates. A number of threads (THREAD_COUNT) repeatedly starts transactions and tries to acquire locks on a random subset of this pool, by executing put operations on each key. If all locks were successfully acquired then the tx tries to commit: only if it succeeds this tx is counted as successful. The number of elements in this subset is the transaction size (TX_SIZE). The greater transaction size is, the higher chance for deadlock situation to occur. On each thread these transactions are being repeatedly executed (each time on a different, random key set) for a given time interval (BENCHMARK_DURATION). At the end, the number of successful transactions from each thread is cumulated, and this defines throughput (successful tx) per time unit (by default one minute).

Disclaimer: The following figures are for a scenario especially designed to force very high contention. This is not typical, and you shouldn’t expect to see this level of increase in performance for applications with lower contention (which most likely is the case). Please feel free tune the above benchmark class to fit the contention level of your application; sharing your experience would be very useful!

Following diagram shows the performance degradation resulting from running the deadlock detection code by itslef in a scenario where no contention/deadlocks are present. http://2.bp.blogspot.com/_ISQfVF8ALAQ/Sm2h_re8qKI/AAAAAAAABqA/bsNgEyCkcYw/s1600-h/DLD_replicated.JPG[

]

Some clues on when to enable deadlock detection. A high number of transaction rolling back due to org.infinispan.util.concurrent.TimeoutException is an indicator that this functionality might help. TimeoutException might be caused by other causes as well, but deadlocks will always result in this exception being thrown. Generally, when you have a high contention on a set of keys, deadlock detection may help. But the best way is not to guess the performance improvement but to benchmark and monitor it: you can have access to statistics (e.g. number of deadlocks detected) through JMX, as it is exposed via the DeadlockDetectingLockManager MBean.

Tags: transactions benchmarks deadlock detection concurrency

Tuesday, 12 May 2009

Implementing a performant, thread-safe ordered data container

To achieve efficient ordering of entries in the DataContainer interface for configurations that support eviction, there was a need for a linked HashMap implementation that was thread-safe and performant. Below, I specifically discuss the implementations of the FIFODataContainer and LRUDataContainer in Infinispan 4.0.x. Wherever this document references FIFODataContainer, this also applies to LRUDataContainer - which extends FIFODataContainer. The only difference is that LRUDataContainer updates links whenever an entry is visited as well as added.

After analysing and considering a few different approaches, the one I settled on is a subset of the algorithms described by H. Sundell and P. Tsigas in their 2008 paper titled Lock-Free Deques and Doubly Linked Lists, combined with the approach used by Sun’s JDK6 for reference marking in ConcurrentSkipListMap's implementation.

Reference marking? What’s that?

Compare-and-swap (CAS) is a common technique today for atomically updating a variable or a reference without the use of a mutual exclusion mechanism like a lock. But this only works when you modify a single memory location at a time, be it a reference or a primitive. Sometimes you need to atomically update two separate bits of information in a single go, such as a reference, as well as some information about that reference. Hence reference marking. In C, this is sometimes done by making use of the assumption that an entire word in memory is not needed to store a pointer to another memory location, and some bits of this word can be used to store additional flags via bitmasking. This allows for atomic updates of both the reference and this extra information using a single CAS operation.

This is possible in Java too using AtomicMarkableReference, but is usually considered overly complex, slow and space-inefficient. Instead, what we do is borrow a technique from ConcurrentSkipListMap and use an intermediate, delegating entry. While this adds a little more complexity in traversal (you need to be aware of the presence of these marker entries when traversing the linked list), this performs better than an AtomicMarkableReference.

In this specific implementation, the 'extra information' stored in a reference is the fact that the entry holding the reference is in the process of being deleted. It is a common problem with lock-free linked lists when you have concurrent insert and delete operations that the newly inserted entry gets deleted as well, since it attaches itself to the entry being concurrently deleted. When the entry to be removed marks its references, however, this makes other threads aware of the fact and cause CAS operations on the reference to fail and retry.

Performance

Aside from maintaining order of entries and being thread-safe, performance was one of the other goals. The target is to achieve constant-time performance - O(1) - for all operations on DataContainer.

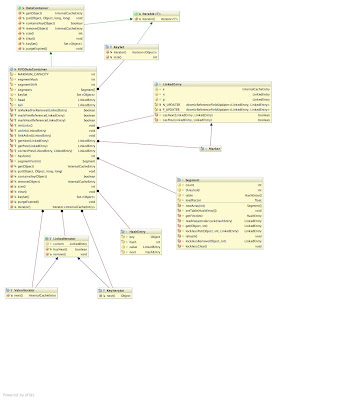

The clhttp://3.bp.blogspot.com/_ca0W9t-Ryos/SgGwA2iw_vI/AAAAAAAAAKA/TpVMWo2Rq9U/s1600-h/FIFODataContainer.jpeg[ ]ass diagram (click to view in full-size) depicts the FIFODataContainer class. At its heart the FIFODataContainer mimics a JDK ConcurrentHashMap (CHM), making use of hashtable-like lockable segments. Unlike the segments in CHM, however, these segments are simpler as they support a much smaller set of operations.

]ass diagram (click to view in full-size) depicts the FIFODataContainer class. At its heart the FIFODataContainer mimics a JDK ConcurrentHashMap (CHM), making use of hashtable-like lockable segments. Unlike the segments in CHM, however, these segments are simpler as they support a much smaller set of operations.

Retrieving data from the container The use of segments allow for constant-time thread-safe get() and containsKey() operations. Iterators obtained from the DataContainer - which implements Iterable, and hence usable in for-each loops - and keySet() are immutable, thread-safe and efficient, using traversal of the linked list - making use of getNext() and getPrev() helpers. See below for details. Traversal is efficient and constant-time.

Updating the container When removing an entry, remove() locks the segment in question, removes the entry, and unlinks the entry. Both operations are thread-safe and constant-time. Locking the segment and removing the entry is pretty straightforward. Unlinking involves marking references, and then an attempt at CAS’ing next and previous references to bypass the removed entry. Order here is important - updates to the next reference needs to happen first, read on for more details as to why.

When performing a put(), the entry is created, segment locked and entry inserted into the segment. The entry is then inserted at the tail of the linked list. Again, both operations are thread-safe and constant-time. Linking at the tail involves careful CAS’ing of references on the new entry, the tail dummy entry and the former last entry in the list.

Maintaining a lock-free, concurrent doubly linked list It is important to note that the entries in this implementation are doubly linked. This is critical since, unlike the JDK’s ConcurrentSkipListMap, we use a hashtable to look up entries to be removed, to achieve constant time performance in lookup. Locating the parent entry to update a reference needs to be constant-time as well, and hence the need for a previous reference. Doubly-linked lists make things much trickier though, as there two references to update atomically (yes, that sounds wrong!)

Crucially, what we do not care about - and do not support - is reverse-order traversal. This means that we only really care about maintaining accuracy in the forward direction of the linked list, and treat the previous reference as an approximation to an entry somewhere behind the current entry. Previous references can then be corrected - using the correctPrev() helper method described below - to locate the precise entry behind the current entry. By placing greater importance on the forward direction of the list, this allows us to reliably CAS the forward reference even if the previous reference CAS fails. It is hence critical that whenever any references are updated, the next reference is CAS’d first, and only on this success the previous reference CAS is attempted. The same order applies with marking references. Also, it is important that any process that touches an entry that observes that the next pointer is marked but the previous pointer is not, attempts to mark the previous pointer before attempting any further steps.

The specific functions we need to expose, to support DataContainer operations, are:

void linkAtEnd(LinkedEntry entry);

void unlink(LinkedEntry entry);

LinkedEntry correctPrev(LinkedEntry suggestedPrev, LinkedEntry current);

LinkedEntry getNext(LinkedEntry current);

LinkedEntry getPrev(LinkedEntry current);These are exposed as protected final methods, usable by FIFODataContainer and its subclasses. The implementations themselves use a combination of CAS’s on a LinkedEntry’s next and previous pointers, marking references, and helper correction of previous pointers when using getNext() and getPrevious() to traverse the list. Note that it is important that only the last two methods are used when traversing rather than directly accessing a LinkedEntry’s references - since markers need to be stepped over and links corrected.

Please refer to Lock-Free Deques and Doubly Linked Lists for details of the algorithm steps.

Tags: algorithms eviction concurrency data structures