Friday, 02 November 2018

Infinispan triple connector release!

Infinispan Spark, Infinispan Hadoop and Infinispan Kafka have a new fresh release each!

The native Apache Spark connector now supports Infinispan 9.4.x and Spark 2.3.2, and it exposes Infinispan’s new transcoding capabilities, enabling the InfinispanRDD and InfinispanDStream to operate with multiple data formats. For more details see the documentation.

Infinispan Hadoop 0.3

The connector that allows accessing Infinispan using standard Input/OutputFormat interfaces now offers compatibility with Infinispan 9.4.x and has been certified to run with the Hadoop 3.1.1 runtime. For more details about this connector, see the user manual. Also make sure to check the docker based demos: Infinispan + Yarn and Infinispan + Apache Flink.

Last but not least, the Infinispan Kafka connector was upgraded to work with the latest Kafka (2.0.x) and Infinispan releases (9.4.x). Many thanks to Andrea Cosentino for contributing this integration.

Tags: release kafka spark hadoop

Tuesday, 24 July 2018

Infinispan Spark connector 0.8 released

The Infinispan Spark connector version 0.8 has been released and is available in Maven central and SparkPackages.

This is a maintenance only release to bring compatibility with Spark 2.3 and Infinispan 9.3.

For more information about the connector, please consult the documentation and also try the docker based sample.

For feedback and general help, please use the Infinispan chat.

Tags: release spark server

Thursday, 29 March 2018

Infinispan Spark connector 0.7 released!

A new version of connector that integrates Infinispan and Apache Spark has just been released!

This release brings compatibility with Infinispan 9.2.x and Spark 2.3.0.

Also included a new feature that allows to create and delete caches on demand, passing custom configurations when required. For more details, please consult the documentation.

To quickly try the connector, make sure to check the Twitter demo, and for any issues or suggestions, please report them on our JIRA.

Cheers!

Tags: release spark

Tuesday, 28 November 2017

Back from Madrid JUG and Codemotion Madrid!!

We’ve just come back from our trip to Spain and first of all, we’d like to thank everyone who attended our talks and workshops at Madrid Java User Group and Codemotion Madrid as well organisers and sponsors who made it possible!

We had a very hectic schedule, which started with a Red Hat double bill for Madrid JUG. Thomas Segismont started the evening with Vert.x talk and Galder followed up with a talk on how to do data analytics using Infinispan-based data grids.

In the data analytics talk, Galder focused on how to use distributed Java Streams to do analytics and also showed how to use Infinispan Spark connector when Java Streams are not enough. The distributed Java Streams demo he ran can be found here. The most relevant files of that demo are:

-

Live coding instructions, follow them to do the same steps he did in the talk.

-

Server task solution class using distributed Java Streams.

Galder also demonstrated how to use Infinispan Spark connector by showing the Twitter example. The slides from this talk (in Spanish) can be found here:

Next day on Friday, Galder gave a talk at Codemotion Madrid on working with streaming data with Infinispan, Vert.x and OpenShift. For the first time he was running it all on top of Google Cloud, so he could finally free up my laptop from running the demos and take advantage of the power of a cloud provider!

The demo can be found here where you can also find instructions on how to run it on top of Google Cloud. If you want to follow the same steps he followed during the talk, live coding instructions are here. The slides from this talk (in Spanish) can be found here:

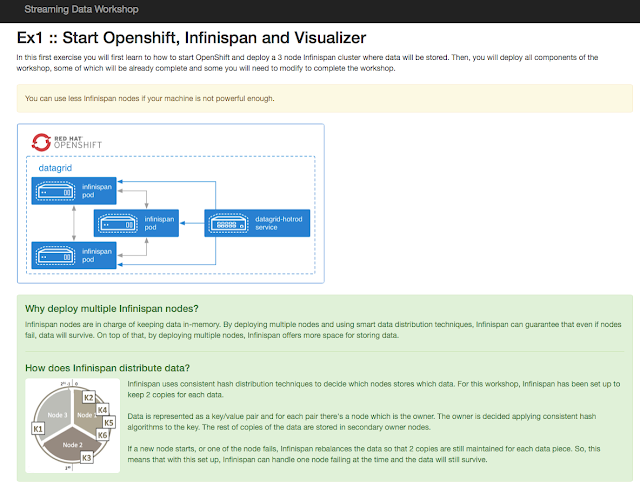

Finally on Saturday we delivered the Streaming Data workshop at Codemotion Madrid. Once again, basing our workshop on top of Virtual Box still caused us some issues, but people managed to get through it. We have some plans for next year to avoid the need of Virtual Box, stay tuned!

http://3.bp.blogspot.com/-vhDHZjc2CX0/Wh0t4gzxt3I/AAAAAAAAFDY/J_1l7ze26-gYTcp3XSu_S7AeZs2zFbM4QCK4BGAYYCw/s1600/DPfG2yYX0AAH682.jpeg[

http://3.bp.blogspot.com/-vhDHZjc2CX0/Wh0t4gzxt3I/AAAAAAAAFDY/J_1l7ze26-gYTcp3XSu_S7AeZs2zFbM4QCK4BGAYYCw/s1600/DPfG2yYX0AAH682.jpeg[ ]

]

http://3.bp.blogspot.com/-hKcqGpbsNno/Wh0t4QnaYaI/AAAAAAAAFDI/k8OvIG8-VpMvZPhHRKVjyADMBdASgnBEQCK4BGAYYCw/s1600/DPfG2yWXUAEOdoY.jpg[

http://3.bp.blogspot.com/-hKcqGpbsNno/Wh0t4QnaYaI/AAAAAAAAFDI/k8OvIG8-VpMvZPhHRKVjyADMBdASgnBEQCK4BGAYYCw/s1600/DPfG2yWXUAEOdoY.jpg[ ]

]

We have added more detailed instructions on how to run the workshop in your office or at home, so if you’re interested in going through it, make sure you check these steps and let us know how they work for you:

This trip to Madrid wraps up a very intense year in terms of promoting Infinispan! Next month we’ll be doing a recap of the talks, videos…etc so that you can catch up with them in case you missed any of them :)

Katia & Galder Un saludo!

Tags: conference openshift spark google

Tuesday, 21 November 2017

Infinispan Spark connector 0.6 is out!

This is a small release to align with Infinispan 9.2.x and Spark 2.2, and it also has an improvement to the Java API related to filterByQuery.

For a full list of changes, please refer to the release notes. Make sure to also check the improved documentation and the Twitter demo.

Enjoy!

Tags: spark release

Monday, 03 April 2017

Infinispan Spark connector 0.5 released!

The Infinispan Spark connector offers seamless integration between Apache Spark and Infinispan Servers. Apart from supporting Infinispan 9.0.0.Final and Spark 2.1.0, this release brings many usability improvements, and support for another major Spark API.

Configuration changes

The connector no longer uses a java.util.Properties object to hold configuration, that’s now duty of org.infinispan.spark.config.ConnectorConfiguration, type safe and both Java and Scala friendly:

Filtering by query String

The previous version introduced the possibility of filtering an InfinispanRDD by providing a Query instance, that required going through the QueryDSL which in turn required a properly configured remote cache.

It’s now possible to simply use an Ickle query string:

Improved support for Protocol Buffers

Support for reading from a Cache with protobuf encoding was present in the previous connector version, but now it’s possible to also write using protobuf encoding and also have protobuf schema registration automatically handled.

To see this in practice, consider an arbitrary non-Infinispan based RDD<Integer, Hotel> where Hotel is given by:

In order to write this RDD to Infinispan it’s just a matter of doing:

Internally the connector will trigger the auto-generation of the .proto file and message marshallers related to the configured entity(ies) and will handle registration of schemas in the server prior to writing.

Splitter is now pluggable

The Splitter is the interface responsible to create one or more partitions from a Infinispan cache, being each partition related to one or more segments. The Infinispan Spark connector now can be created using a custom implementation of Splitter allowing for different data partitioning strategies during the job processing.

Goodbye Scala 2.10

Scala 2.10 support was removed, Scala 2.11 is currently the only supported version. Scala 2.12 support will follow https://issues.apache.org/jira/browse/SPARK-14220

Streams with initial state

It is possible to configure the InfinispanInputDStream with an extra boolean parameter to receive the current cache state as events.

Dataset support

The Infinispan Spark connector now ships with support for Spark’s Dataset API, with support for pushing down predicates, similar to rdd.filterByQuery. The entry point of this API is the Spark session:

To create an Infinispan based Dataframe, the "infinispan" data source need to be used, along with the usual connector configuration:

From here it’s possible to use the untyped API, for example:

or execute SQL queries by setting a view:

In both cases above, the predicates and the required columns will be converted to an Infinispan Ickle filter, thus filtering data at the source rather than at Spark processing phase.

For the full list of changes see the release notes. For more information about the connector, the official documentation is the place to go. Also check the twitter data processing sample and to report bugs or request new features use the project JIRA.

Tags: spark server

Monday, 07 March 2016

Infinispan related presentations on DevConf.cz 2016

DevConf.cz (Developer Conference) is a free annual conference for all Linux and JBoss Community Developers, Admins and Linux users organized by Red Hat Czech Republic in cooperation with the Fedora and JBoss communities. See more details on DevConf’s homepage.

Since it’s JBoss related conference, Infinispan cannot be missing there! We had two Infinispan related presentantions. First of them was presented by Jiří Holuša focusing on new features in Infinispan 8. The presentation also covers very gentle introduction to Infinispan in general.

The second one, named "From Big Data towards Fast Data", was given by Vojtěch Juránek and talked about latest trends in Big Data world. The presentation goes through the introduction about Big Data, mentions the problems with it and comes up with the term Fast Data. Some approaches are presented, how to solve this Fast Data phenomenon, ending up with new Infinispan Spark connector as one of the possible solutions.

Please, see the links below.

Presentation "Infinispan 8 - keeping up with the latest trends" Video: https://www.youtube.com/watch?v=r0__NEgldzI Slides: bit.ly/1oxAuCM * Presentation "From Big Data towards Fast Data"* Video: https://www.youtube.com/watch?v=TVXeXM2g7So Slides: bit.ly/1PrQ0ZK

Cheers, Jiří

Tags: conference presentations spark infinispan 8

Monday, 01 February 2016

'Infinispan 8 Tour' video available

The recording of the talk presented last Wednesday in London is available! Thanks to everyone who joined and to the London JBUG organizers for the awesome new venue!

Cheers, Gustavo

Tags: functional presentations spark hadoop streams video

Wednesday, 09 December 2015

Infinispan Spark connector 0.2 released!

The connector allows the Infinispan Server to become a data source for Apache Spark, for both batch jobs and stream processing, including read and write.

In this release, the highlight is the addition of two new operators to the RDD that support filtering using native capabilities of Infinispan. The first one is filterByQuery:

The second operator was introduced to replace the previous configuration based filter factory name, and was extended to support arbitrary parameters:

The connector has also been updated to be compatible with Spark 1.5.2 and Infinispan 8.1.0.Final.

For more details including full list of changes and download info please visit the Connectors Download section. The project Github contains up-to-date info on how to get started with the connector, also make sure to try the included docker based demo. To report any issue or to request new features, use the new dedicated issue tracker. We’d love to get your feedback!

Tags: spark server

Monday, 17 August 2015

Infinispan Spark connector 0.1 released!

Dear users,

The Infinispan connector for Apache Spark has just been made available as a Spark Package!

What is it?

The Infinispan Spark connector allows tight integration with Apache Spark, allowing Spark jobs to be run against data stored in the Infinispan Server, exposing any cache as an RDD, and also writing data from any key/value RDD to a cache. It’s also possible to create a DStream backed by cache events and to save any key-value DStream to a cache.

The minimum version required is Infinispan 8.0.0.Beta3.

Giving it a spin with Docker

A handy docker image that contains an Infinispan cluster co-located with an Apache Spark standalone cluster is the fastest way to try the connector. Start by launching the container that hosts the Spark Master:

And then run as many worker nodes as you want:

Using the shell

The Apache Spark shell is a convenient way to quickly run jobs in an interactive fashion. Taking advantage of the fact that Spark is already installed in the docker containers (and thus the shell), let’s attach to the master:

Once inside, a Spark shell can be launched by:

That’s all it’s needed. The shell grabs the Infinispan connector and its dependencies from spark-packages.org and exposes them in the classpath.

Generating data and writing to Infinispan

Let’s obtain a list of words from the Linux dictionary, and generate 1k random 4-word phrases. Paste the commands in the shell:

From the phrases, we’ll create a key value RDD (Long, String):

To save to Infinispan:

Obtaining facts about data

To be able to explore data in the cache, the first step is to create an infinispan RDD:

As an example job, let’s calculate a histogram showing the distribution of word lengths in the phrases. This is simply a sequence of transformations expressed by:

This pipeline yields:

2 chars words: 10 occurrences 3 chars words: 37 occurrences 4 chars words: 133 occurrences 5 chars words: 219 occurrences 6 chars words: 373 occurrences 7 chars words: 428 occurrences 8 chars words: 510 occurrences 9 chars words: 508 occurrences 10 chars words: 471 occurrences 11 chars words: 380 occurrences 12 chars words: 309 occurrences 13 chars words: 238 occurrences

…

Now let’s find similar words using the Levenshtein distance algorithm. For that we need to define a function that will calculate the edit distance between two strings. As usual, paste in the shell:

Empowered by the Levenshtein distance implementation, we need another function that given a word, will find in the cache similar words according to the provided maximum edit distance:

Sample usage:

Where to go from here

And that concludes this first post on Infinispan-Spark integration. Be sure to check the Twitter demo for non-shell usages of the connector, including Java and Scala API.

And it goes without saying, your feedback is much appreciated! :)

Tags: release spark