Thursday, 04 June 2020

Secure server by default

The Infinispan server we introduced in 10.0 exposes a single port through which both Hot Rod and HTTP clients can connect.

While Infinispan has had very extensive security support since 7.0, the out-of-the-box default configuration did not enable authentication.

Infinispan 11.0’s server’s default configuration, instead, requires authentication. We have made several improvements to how authentication is configured and the tooling we provide to make the experience as smooth as possible.

Automatic authentication mechanism selection

Previously, when enabling authentication, you had to explicitly define which mechanisms had to be enabled per-protocol, with all of the peculiarities specific to each one (i.e. SASL for Hot Rod, HTTP for REST). Here is an example configuration with Infinispan 10.1 that enables DIGEST authentication:

<endpoints socket-binding="default" security-realm="default">

<hotrod-connector name="hotrod">

<authentication>

<sasl mechanisms="DIGEST-MD5" server-name="infinispan"/>

</authentication>

</hotrod-connector>

<rest-connector name="rest">

<authentication mechanisms="DIGEST"/>

</rest-connector>

</endpoints>In Infinispan 11.0, the mechanisms are automatically selected based on the capabilities of the security realm. Using the following configuration:

<endpoints socket-binding="default" security-realm="default">

<hotrod-connector name="hotrod" />

<rest-connector name="rest"/>

</endpoints>together with a properties security realm, will enable DIGEST for HTTP and SCRAM-*, DIGEST-* and CRAM-MD5 for Hot Rod. BASIC/PLAIN will only be implicitly enabled when the security realm has a TLS/SSL identity.

The following tables summarize the mapping between realm type and implicitly enabled mechanisms.

| Security Realm | SASL Authentication Mechanism |

|---|---|

Property Realms and LDAP Realms | SCRAM-*, DIGEST-*, CRAM-MD5 |

Token Realms | OAUTHBEARER |

Trust Realms | EXTERNAL |

Kerberos Identities | GSSAPI, GS2-KRB5 |

SSL/TLS Identities | PLAIN |

| Security Realm | HTTP Authentication Mechanism |

|---|---|

Property Realms and LDAP Realms | DIGEST |

Token Realms | BEARER_TOKEN |

Trust Realms | CLIENT_CERT |

Kerberos Identities | SPNEGO |

SSL/TLS Identities | BASIC |

Automatic encryption

If the security realm has a TLS/SSL identity, the endpoint will automatically enable TLS for all protocols.

Encrypted properties security realm

The properties realm that is part of the default configuration has been greatly improved in Infinispan 11. The passwords are now stored in multiple encrypted formats in order to support the various DIGEST, SCRAM and PLAIN/BASIC mechanisms.

The user functionality that is now built into the CLI allows easy creation and manipulation of users, passwords and groups:

[disconnected]> user create --password=secret --groups=admin admin

[disconnected]> connect --username=admin --password=secret

[ispn-29934@cluster//containers/default]> user ls

[ "admin" ]

[ispn-29934@cluster//containers/default]> user describe admin

{ username: "admin", realm: "default", groups = [admin] }

[ispn-29934@cluster//containers/default]> user password admin

Set a password for the user: ******

Confirm the password for the user: ******

[ispn-29934@cluster//containers/default]>Authorization: simplified

Authorization is another security aspect of Infinispan. In previous versions, setting up authorization was complicated by the need to add all the needed roles to each cache:

<infinispan>

<cache-container name="default">

<security>

<authorization>

<identity-role-mapper/>

<role name="AdminRole" permissions="ALL"/>

<role name="ReaderRole" permissions="READ"/>

<role name="WriterRole" permissions="WRITE"/>

<role name="SupervisorRole" permissions="READ WRITE EXEC BULK_READ"/>

</authorization>

</security>

<distributed-cache name="secured">

<security>

<authorization roles="AdminRole ReaderRole WriterRole SupervisorRole"/>

</security>

</distributed-cache>

</cache-container>

...

</infinispan>With Infinispan 11 you can avoid specifying all the roles at the cache level: just enable authorization and all roles will implicitly apply. As you can see, the cache definition is much more concise:

<infinispan>

<cache-container name="default">

...

<distributed-cache name="secured">

<security>

<authorization/>

</security>

</distributed-cache>

</cache-container>

...

</infinispan>Conclusions

Tags: server security

Sunday, 10 September 2017

Multi-tenancy - Infinispan as a Service (also on OpenShift)

In recent years Software as a Service concept has gained a lot of traction. I’m pretty sure you’ve used it many times before. Let’s take a look at a practical example and explain what’s going on behind the scenes.

Practical example - photo album application

Imagine a very simple photo album application hosted within the cloud. Upon the first usage you are asked to create an account. Once you sign up, a new tenant is created for you in the application with all necessary details and some dedicated storage just for you. Starting from this point you can start using the album - download and upload photos.

The software provider that created the photo album application can also celebrate. They have a new client! But with a new client the system needs to increase its capacity to ensure it can store all those lovely photos. There are also other concerns - how to prevent leaking photos and other data from one account into another? And finally, since all the content will be transferred through the Internet, how to secure transmission?

As you can see, multi-tenancy is not that easy as it would seem. The good news is that if it’s properly configured and secured, it might be beneficial both for the client and for the software provider.

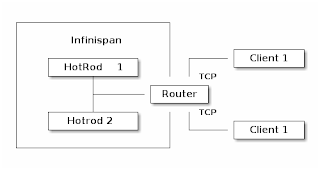

Multi-tenancy in Infinispan

Let’s think again about our photo album application for a moment. Whenever a new client signs up we need to create a new account for him and dedicate some storage. Translating that into Infinispan concepts this would mean creating a new CacheContainer. Within a CacheContainer we can create multiple Caches for user details, metadata and photos. You might be wondering why creating a new Cache is not sufficient? It turns out that when a Hot Rod client connects to a cluster, it connects to a CacheContainer exposed via a Hot Rod Endpoint. Such a client has access to all Caches. Considering our example, your friends could possibly see your photos. That’s definitely not good! So we need to create a CacheContainer per tenant. Before we introduced Multi-tenancy, you could expose each CacheContainer using a separate port (using separate Hot Rod Endpoint for each of them). In many scenarios this is impractical because of proliferation of ports. For this reason we introduced the Router concept. It allows multiple clients to access their own CacheContainers through a single endpoint and also prevents them from accessing data which doesn’t belong to them. The final piece of the puzzle is transmitting sensitive data through an unsecured channel such as the Internet. The use of TLS encryption solves this problem. The final outcome should look like the following:

The Router component on the diagram above is responsible for recognizing data from each client and redirecting it to the appropriate Hot Rod endpoint. As the name implies, the router inspects incoming traffic and reroutes it to the appropriate underlying CacheContainer. To do this it can use two different strategies depending on the protocol: TLS/SNI for the Hot Rod protocol, matching each server certificate to a specific cache container and path prefixes for REST. The SNI strategy detects the SNI Host Name (which is used as tenant) and also requires TLS certificates to match. By creating proper trust stores we can match which tenant can access which CacheContainers. URL path prefix is very easy to understand, but it is also less secure unless you enable authentication. For this reason it should not be used in production unless you know what you are doing (the SNI strategy for the REST endpoint will be implemented in the near future). Each client has its own unique REST path prefix that needs to be used for accessing the data (e.g. http://127.0.0.1:8080/rest/client1/fotos/2).

Confused? Let’s clarify this with an example.

Foto application sample configuration

The first step is to generate proper key/trust stores for the server and client:

The next step is to configure the server. The snippet below shows only the most important parts:

Let’s analyze the most critical lines:

-

7, 15 - We need to add generated key stores to the server identities

-

25, 30 - It is highly recommended to use separate CacheContainers

-

38, 39 - A Hot Rod connector (but without socket binding) is required to provide proper mapping to CacheContainer. You can also use many useful settings on this level (like ignored caches or authentication).

-

42 - Router definition which binds into default Hot Rod and REST ports.

-

44 - 46 - The most important bit which states that only a client using SSLRealm1 (which uses trust store corresponding to client_1_server_keystore.jks) and TLS/SNI Host name client-1 can access Hot Rod endpoint named multi-tenant-hotrod-1 (which points to CacheContainer multi-tenancy-1).

Improving the application by using OpenShift

Hint: You might be interested in looking at our previous blog posts about hosting Infinispan on OpenShift. You may find them at the bottom of the page.

So far we’ve learned how to create and configure a new CacheContainer per tenant. But we also need to remember that system capacity needs to be increased with each new tenant. OpenShift is a perfect tool for scaling the system up and down. The configuration we created in the previous step almost matches our needs but needs some tuning.

As we mentioned earlier, we need to encrypt transport between the client and the server. The main disadvantage is that OpenShift Router will not be able to inspect it and take routing decisions. A passthrough Route fits perfectly in this scenario but requires creating TLS/SNI Host Names as Fully Qualified Application Names. So if you start OpenShift locally (using oc cluster up) the tenant names will look like the following: client-1-fotoalbum.192.168.0.17.nip.io.

We also need to think how to store generated key stores. The easiest way is to use Secrets:

Finally, a full DeploymentConfiguration:

If you’re interested in playing with the demo by yourself, you might find a working example here. It mainly targets OpenShift but the concept and configuration are also applicable for local deployment.

Tags: security hotrod server multi-tenancy rest

Monday, 19 June 2017

Cache operations impersonation: do as I say (or maybe as she says)

The implementation of cache authorization in Infinispan has traditionally followed the JAAS model of wrapping calls in a PrivilegedAction invoked through Subject.doAs(). This led to the following cumbersome pattern:

We also provided an implementation which, instead of relying on enabling the SecurityManager, could use a lighter and faster ThreadLocal for storing the Subject:

While this solves the performance issue, it still leads to unreadable code. This is why, in Infinispan 9.1 we have introduced a new way to perform authorization on caches:

Obviously, for multiple invocations, you can hold on to the "impersonated" cache and reuse it:

We hope this will make your life simpler and your code more readable !

Tags: security API

Tuesday, 21 March 2017

Docker image security changes

In the latest 9.0.0.CR3 version, the Infinispan REST endpoint is secured by default, and in order to facilitate remote access, the Docker image has some changes related to the security.

The image now creates a default user login upon start; this user can be changed via environment variables if desired:

You can check if the settings are in place by manipulating data via REST. Trying to do a curl without credentials should lead to a 401 response:

So make sure to always include the credentials from now on when interacting with the Rest endpoint! If using curl, this is the syntax:

And that’s all for this post. To find out more about the Infinispan Docker image, check the documentation, give it a try and let us know if you have any issues or suggestions!

Tags: docker security server rest

Thursday, 23 February 2017

Node.js client 0.4.0 released with encryption and cross-site failover

We’ve just released Infinispan Node.js Client version 0.4.0 which comes with encrypted client connectivity via SSL/TLS (with optional TLS/SNI support), as well as cross-site client failover.

Thanks to the encryption integration, Node.js Hot Rod clients can talk to Hot Rod servers via an encrypted channel, allowing trusted client and/or authenticated clients to connect. Check the documentation for information on how to enable encryption in Node.js Hot Rod client.

Also, we’ve added the possibility for the client to connect to multiple clusters. Normally, the client is connected to a single cluster, but if all nodes fail to respond, the client can failover to a different cluster, as long as one or more initial addresses have been provided. On top of that, clients can manually switch clusters using switchToCluster and switchToDefaultCluster APIs. Check documentation for more info.

On top of that, we’ve applied several bug fixes that further tighten the inner workings of the Node.js client.

If you’re a Node.js user and want to store data remotely in Infinispan Server instances, please give the client a go and tell us what you think of it via our forum, via our issue tracker or via IRC on the #infinispan channel on Freenode.

Tags: release security xsite javascript js-client node.js